Yifei Zhou

@yifeizhou02

Visiting researcher @AIatMeta | PhD student @berkeley_ai, working on RL for foundation models

ID: 1547790583334285313

http://yifeizhou02.github.io 15-07-2022 03:50:20

217 Tweet

1,1K Followers

445 Following

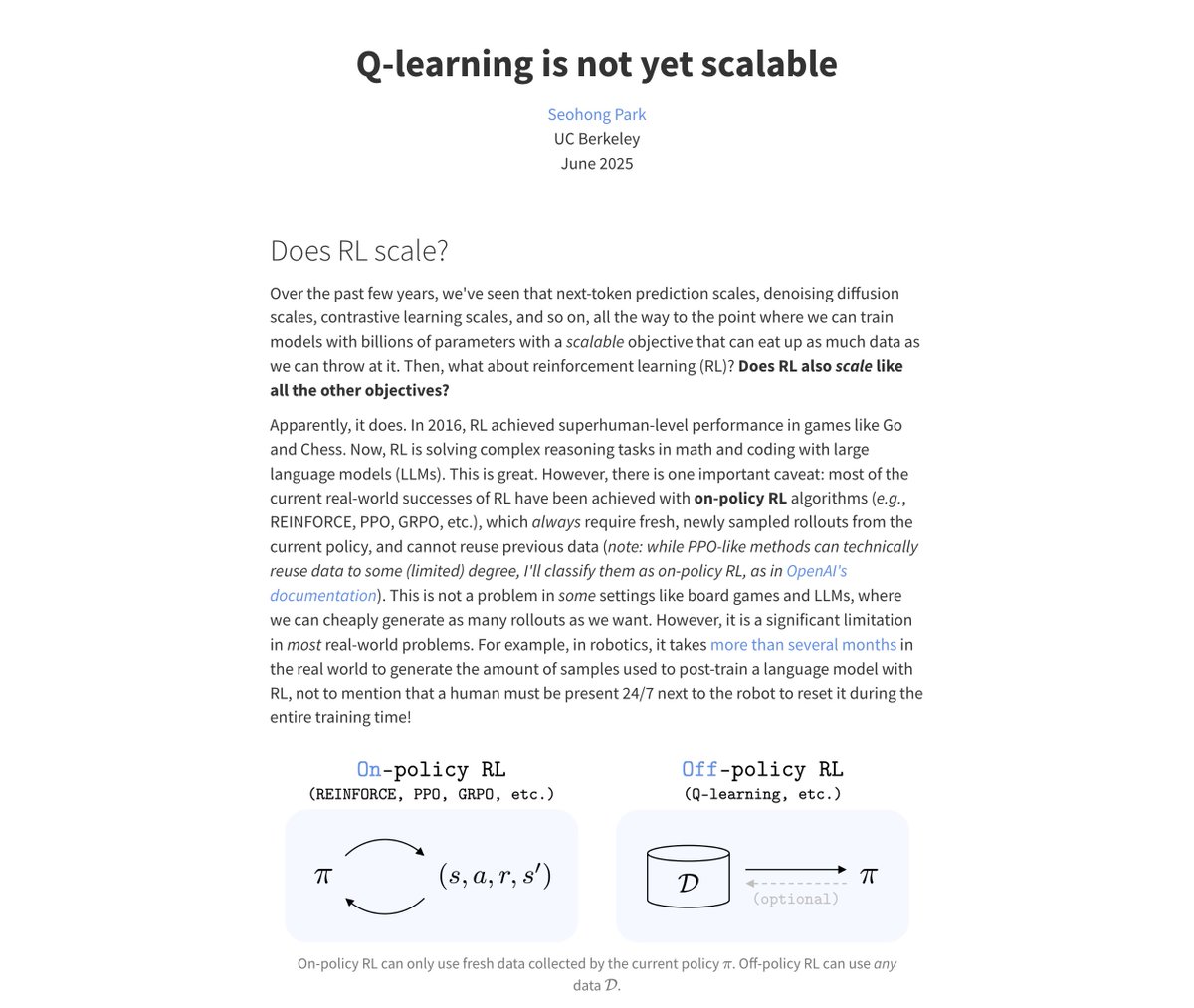

Can offline RL methods do well on any problem, as we scale compute and data? In our new paper led by Seohong Park, we show that task horizon can fundamentally hinder scaling for offline RL, and how explicitly reducing task horizon can address this. arxiv.org/abs/2506.04168 🧵⬇️

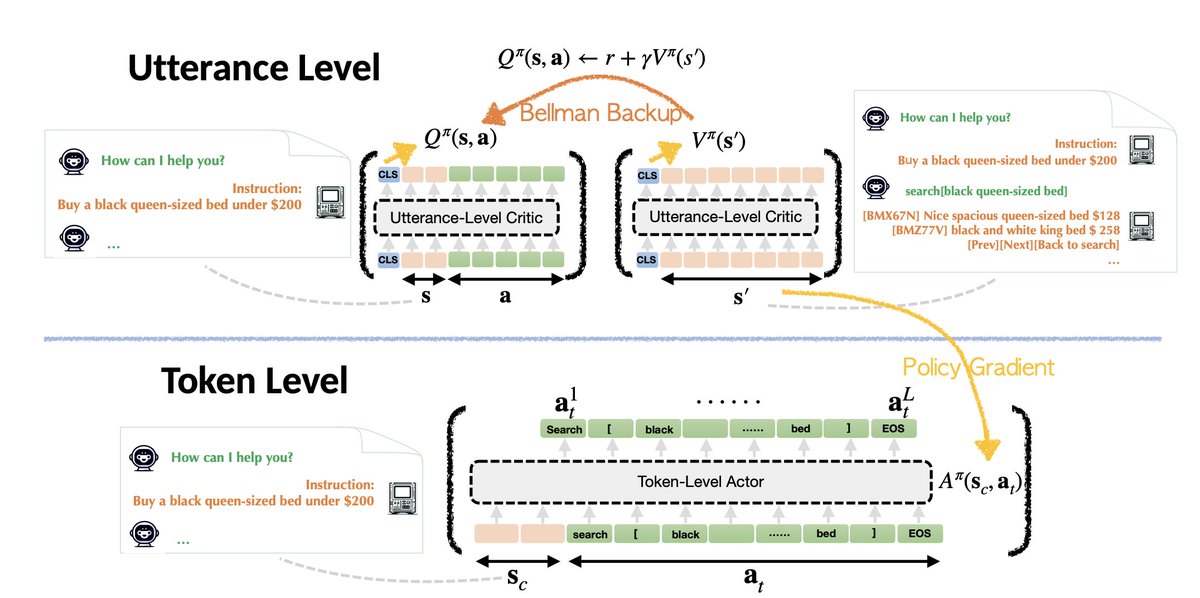

Looking back, some of the most effective methods that we've built for training LLM/VLM agents in multi-turn settings also *needed* to utilize such a hierarchical structure, e.g., ArCHer (yifeizhou02.github.io/archer.io/) by Yifei Zhou, further showing the promise behind such ideas.

I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little from next frame prediction. Maybe it's because LLMs are actually brain scanners in disguise. Idle musings in my new blog post: sergeylevine.substack.com/p/language-mod…

Our view on test-time scaling has been to train models to discover algos that enable them to solve harder problems. Amrith Setlur & Matthew Yang's new work e3 shows how RL done with this view produces best <2B LLM on math that extrapolates beyond training budget. 🧵⬇️