Yulin Chen

@yulinchen99

PhD Student at @nyuniversity @CILVRatNYU | Previously @TsinghuaNLP

ID: 1473167814131593221

https://yulinchen99.github.io/ 21-12-2021 05:46:16

16 Tweet

177 Followers

157 Following

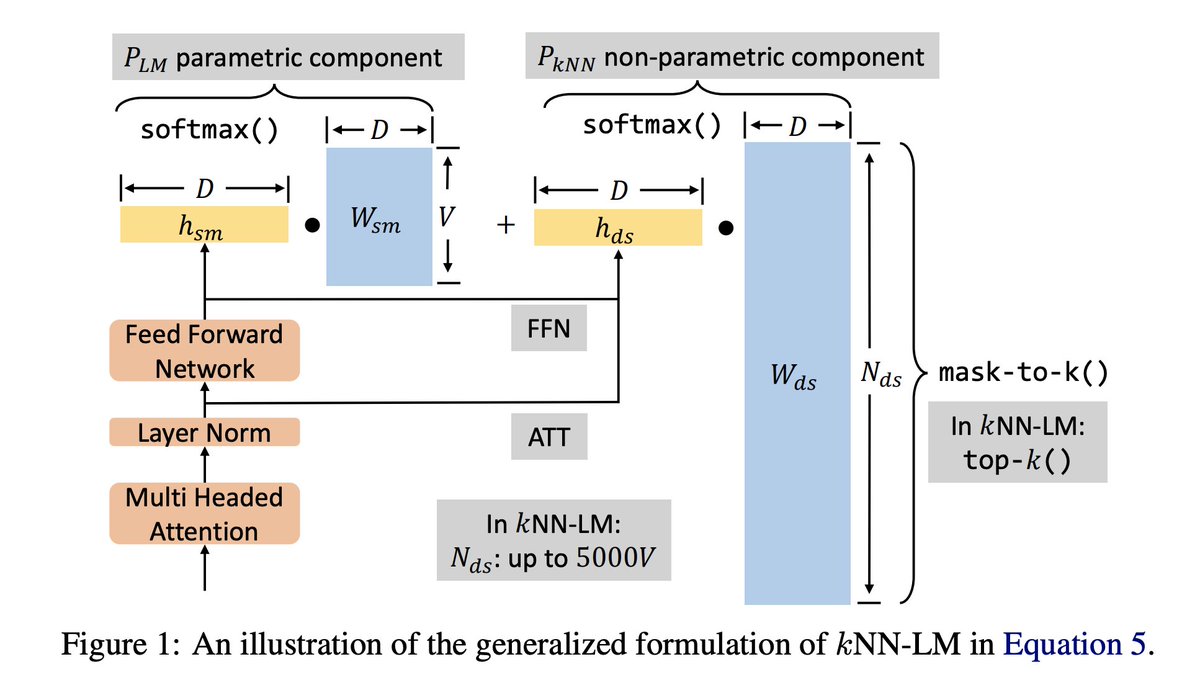

A new preprint 📢 arxiv.org/pdf/2301.02828… K-nearest neighbors language models (kNN-LMs; Urvashi Khandelwal et al., ICLR'2020) improve the perplexity of standard LMs, even when they retrieve examples from the *same training set that the base LM was trained on*. but why? (1/3)