Zhiding Yu

@zhidingyu

Working to make machines understand the world like human beings.

Words are my own.

ID: 1283364577581756418

https://chrisding.github.io/ 15-07-2020 11:35:44

146 Tweet

7,7K Followers

494 Following

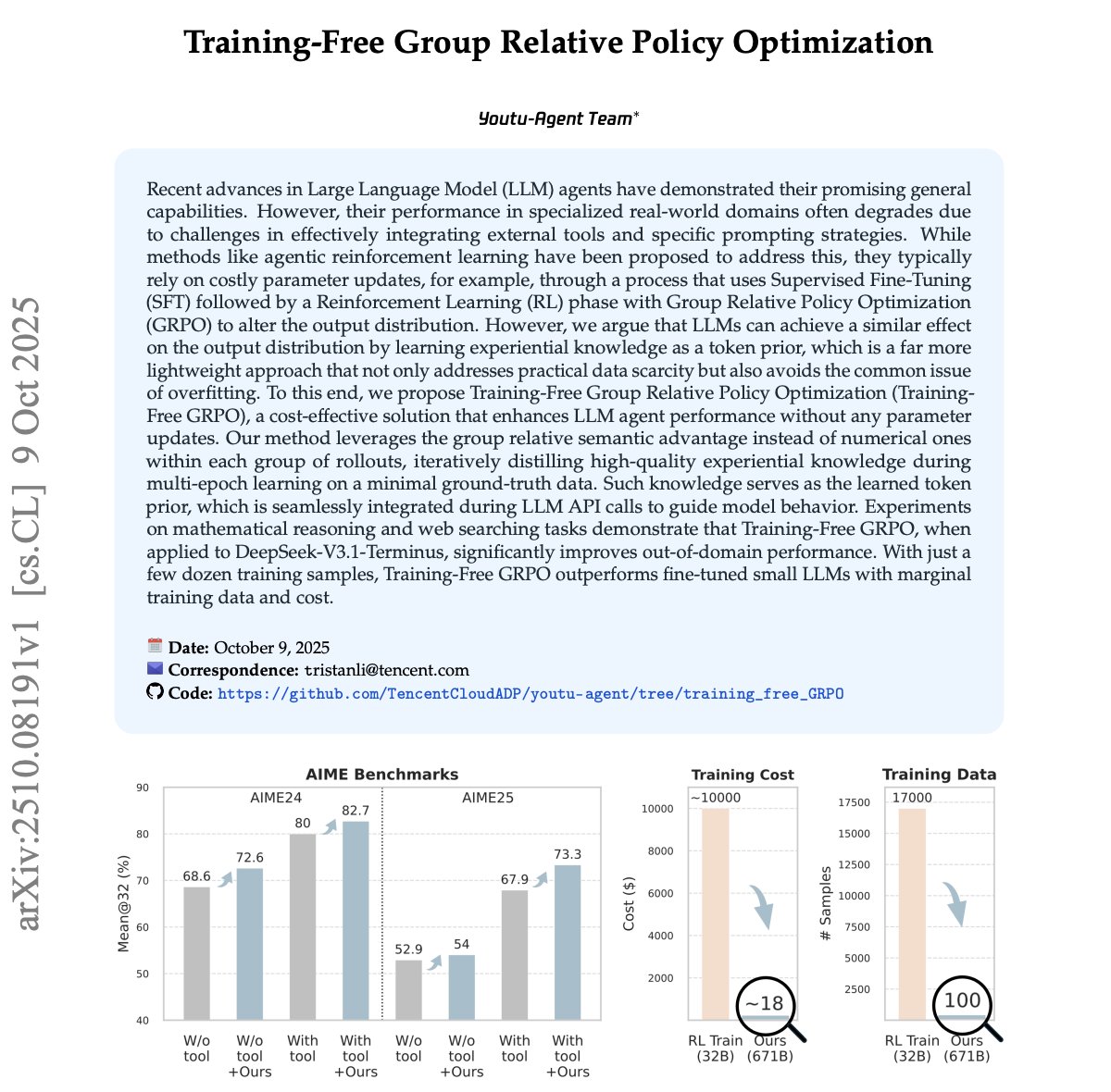

Check this super cool work done by our intern Shaokun Zhang - RL + Tool Using is the future of LLM Agent! Before joining NVIDIA, Shaokun was a contributor of the famous multi-agent workflow framework #AutoGen. Now, the age of agent learning is coming beyond workflow control!

I did not notice this until just now. Thank you Andi Marafioti for the recommendation! Very glad that even though Eagle 2 is not our latest work, people still find it very useful.