Zifeng Ding

@zifengding6

Postdoc @cambridgenlp @Cambridge_Uni

Visiting Research Scientist @CamelAIOrg

ID: 1593720090981277697

https://zifengding.github.io/ 18-11-2022 21:37:52

6 Tweet

11 Followers

17 Following

The AVeriTeC automated #factchecking shared task paper is now online. Congratulations again to the winners and thanks to all our amazing participants! To hear more, swing by FEVERworkshop at #EMNLP in Miami on the 15th. 📝: arxiv.org/abs/2410.23850 🌐: fever.ai/task.html

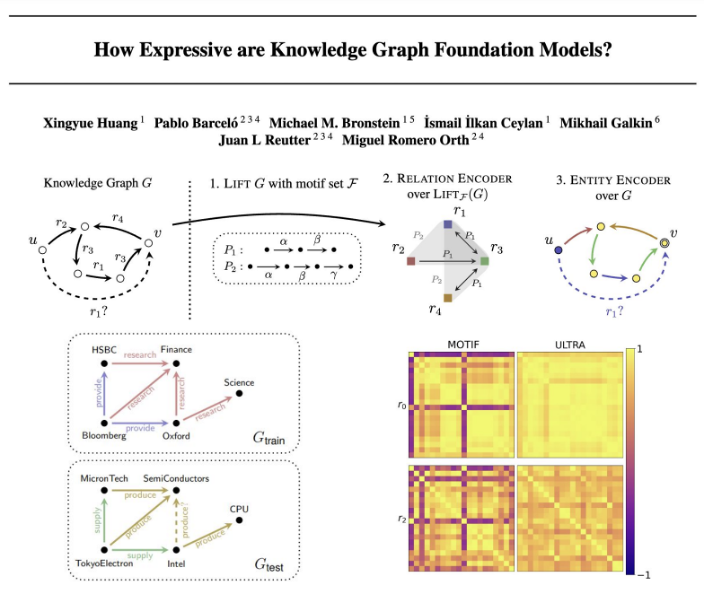

Knowledge Graph Foundation Models (KGFMs) are at the frontier of graph learning - but we didn’t have a principled understanding of what we can (or can’t) do with them. Now we do! 💡🚀 🧵 with Pablo Barcelo, İsmail İlkan Ceylan, Michael Bronstein, Michael Galkin, Juan Reutter, Miguel

Looking forward to this year's edition! With great speakers: Ryan McDonald Yulan He Vlad Niculae Antonis Anastasopoulos Raquel Fernández Anna Rogers Preslav Nakov Mohit Bansal Eunsol Choi Marie-Catherine de Marneffe !

![CAMEL-AI.org (@camelaiorg) on Twitter photo [Scaling Environment for Agents]

Launch week day 2

Project Loong 🐉 is releasing!

camel-ai.org/launchweek-env… [Scaling Environment for Agents]

Launch week day 2

Project Loong 🐉 is releasing!

camel-ai.org/launchweek-env…](https://pbs.twimg.com/media/Gndw7EsW4AAdcls.jpg)