Eldar Kurtic

@_eldarkurtic

Efficient inference @RedHat_AI & @ISTAustria

ID: 1017492704970801152

12-07-2018 19:35:39

207 Tweet

614 Followers

590 Following

Major props to the contributors who made this release happen 🙌 @JoelNiklaus Lewis Tunstall Alina Lozovskaya Clémentine Fourrier 🍊 Alvin HERIUN Eldar Kurtić María Grandury jnanliu Pavel Iakubovskii Check out the release & try it out: 🔗 github.com/huggingface/li…

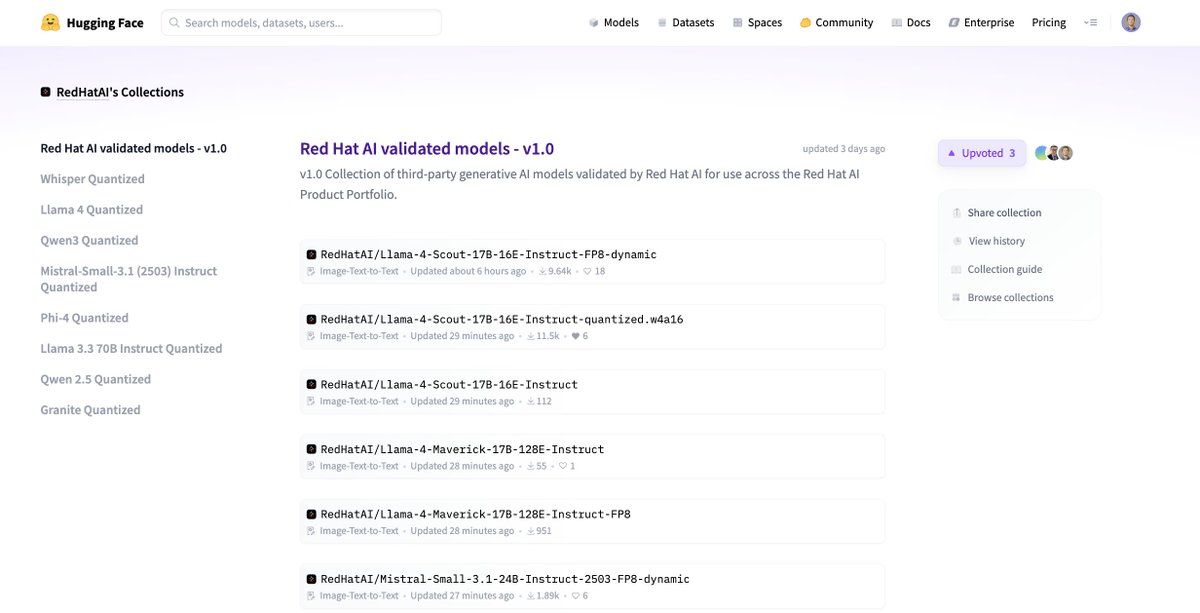

Love this approach by Red Hat AI. We need more trust & validation in AI and this can help! huggingface.co/RedHatAI

The recording of Erwan Gallen's and my PyTorch Day France 2025 and GOSIM Foundation talk, "Scaling LLM Inference with vLLM," is now available on PyTorch’s YouTube channel. youtube.com/watch?v=XYh6Xf…

Want to learn more about GuideLLM, the tool used by Charles 🎉 Frye and Modal' LLM Engine Advisor to easily benchmark LLM inference stack? Join the next vLLM office hours with Saša , Michael Goin , Jenny Yi, and Mark Kurtz . More details in the thread below 👇

The Hugging Face folks deserve far more credit for being a pillar of open-source and still managing to push out SOTA results across the board, along with a full write-up of the entire model’s lifecycle.

.vLLM office hours return next week! Alongside project updates from Michael Goin, vLLM committers and HPC experts Robert Shaw + Tyler Michael Smith will share how to scale MoE models with llm-d and lessons from real world multi-node deployments. Register: red.ht/office-hours