Jonathan Lai

@_jlai

Post training @GoogleDeepMind, Gemini Reasoning, RL, Opinions are my own

ID: 971441900

26-11-2012 06:31:19

18 Tweet

115 Followers

138 Following

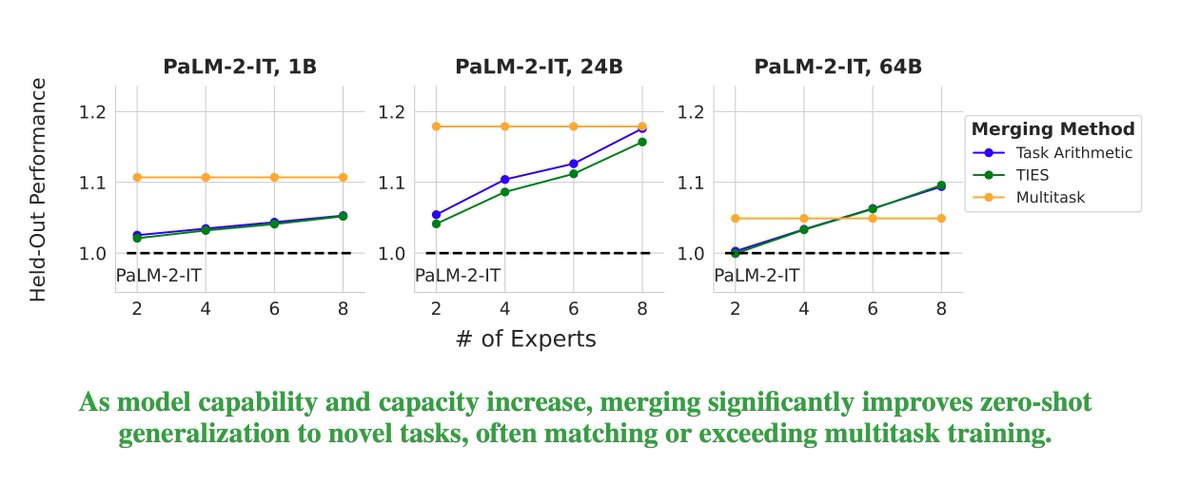

🚨✨ Thrilled to share the first study on model merging at large scales by our intern Prateek Yadav Google AI Google DeepMind For larger LLMs merging is an efficient alternative to multitask learning, that can preserve the majority of in-domain performance, while significantly

Excited to share that our paper on model merging at scale has been accepted to Transactions on Machine Learning Research (TMLR). Huge congrats to my intern Prateek Yadav and our awesome co-authors Jonathan Lai, Alexandra Chronopoulou, Manaal Faruqui, Mohit Bansal, and Tsendsuren 🎉!!

Huge congrats Prateek Yadav and all!! 🎉 It was great to work with everyone here!