Jaivardhan Kapoor

@_jaivardhan_

PhD student @mackelab and affiliated with @MPI_IS, previously @IITKanpur, @MPI_IS, @AaltoPML

I develop diffusion models for medical images and neural recordings

ID: 731230526778970112

http://jkapoor.me 13-05-2016 21:11:58

421 Tweet

328 Followers

669 Following

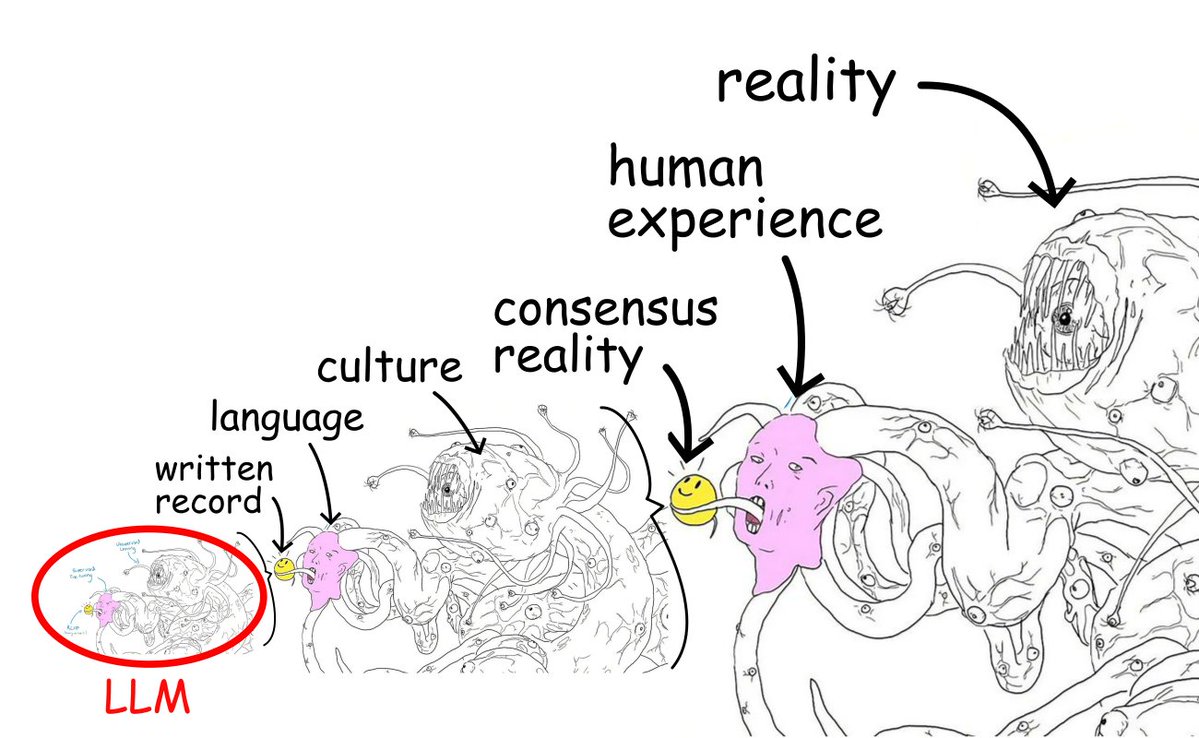

before Lisbon, I gave a Machine Learning in Science teatime talk about surfing as a beginner to prepare folks for the frustration but it was actually about the PhD: in SD, I realized surfing as a beginner is a lot like starting a PhD, when you, invariably, also suck this was my guide for both: