Angelica Chen

@_angie_chen

She/Her | PhD student @NYUDataScience - formerly at @Princeton 🐅

angie-chen at 🦋

Interested in deep learning+NLP, pastries, and running

ID: 703274475576541189

http://angie-chen55.github.io/ 26-02-2016 17:44:36

109 Tweet

1,1K Followers

437 Following

What makes some LM interpretability research “mechanistic”? In our new position paper in BlackboxNLP, Sarah Wiegreffe and I argue that the practical distinction was never technical, but a historical artifact that we should be—and are—moving past to bridge communities.

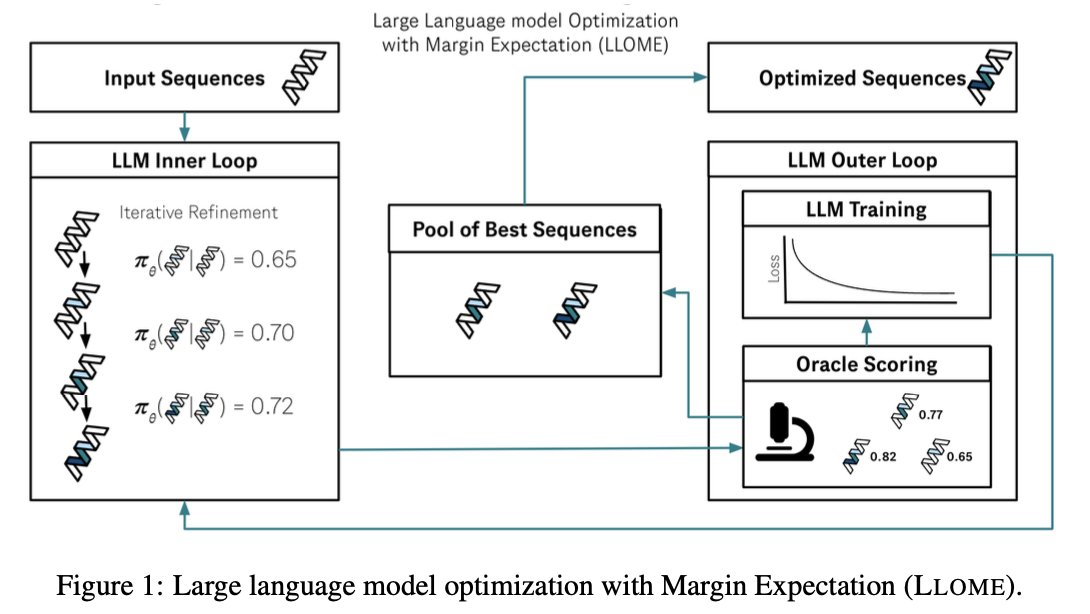

LLMs are highly constrained biological sequence optimizers. In new work led by Angelica Chen & Samuel Stanton , we show how to drive an active learning loop for protein design with an LLM. 1/

Two NeurIPS Conference workshop spotlight talks from our lab this year! Amy Lu will present on all-atom protein generation from sequence-only inputs at MLSB and Angelica Chen will present on LLMs as highly-constrained biophysical sequence optimizers at AIDrugX

CDS PhD student Angelica Chen presents LLOME, using LLMs to optimize synthetic sequences with potential applications for drug design. Co-led by Samuel Stanton & Nathan C. Frey and with insights from Kyunghyun Cho, Richard Bonneau, and others at Prescient Design. nyudatascience.medium.com/language-model…

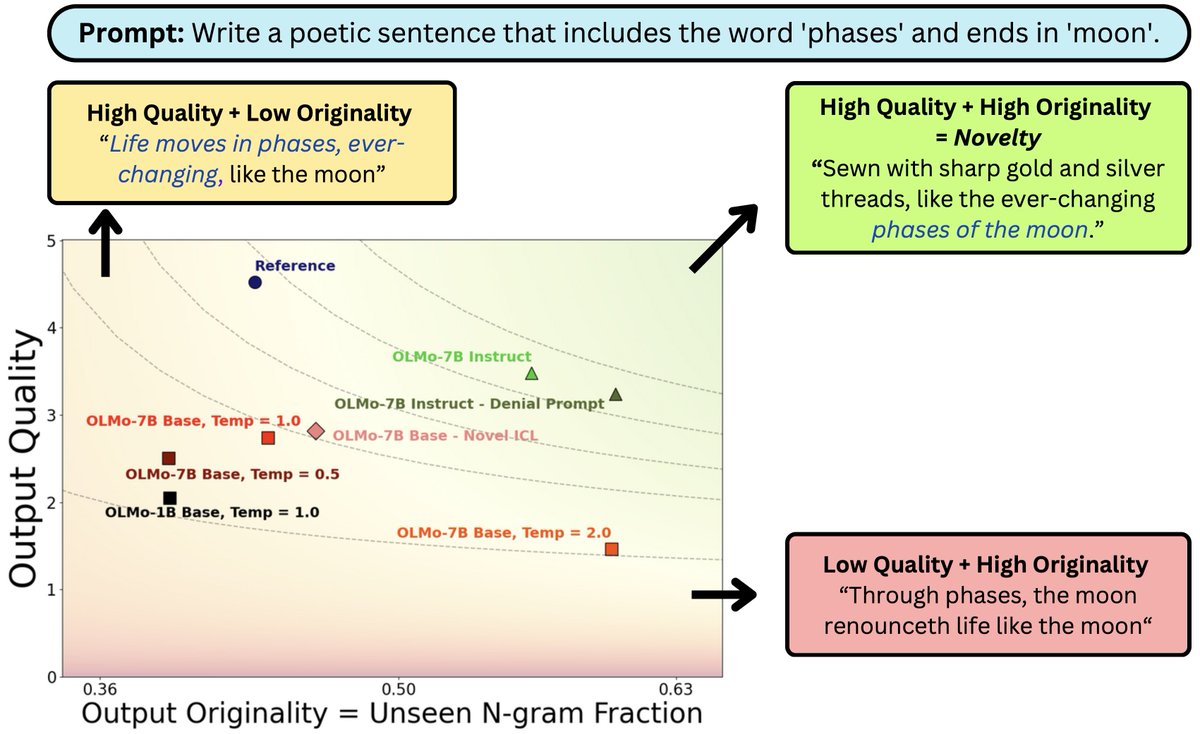

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵