Behrooz Ghorbani

@_ghorbani

Researcher at @OpenAI, studying large language models. Formerly @GoogleBrain and @stanford_ee. Opinions expressed are solely my own.

ID: 941178306744872970

https://web.stanford.edu/~ghorbani/ 14-12-2017 05:29:29

134 Tweet

447 Followers

491 Following

Do current LLMs perform simple tasks (e.g., grade school math) reliably? We know they don't (is 9.9 larger than 9.11?), but why? Turns out that, for one reason, benchmarks are too noisy to pinpoint such lingering failures. w/ Josh Vendrow Eddie Vendrow Sara Beery 1/5

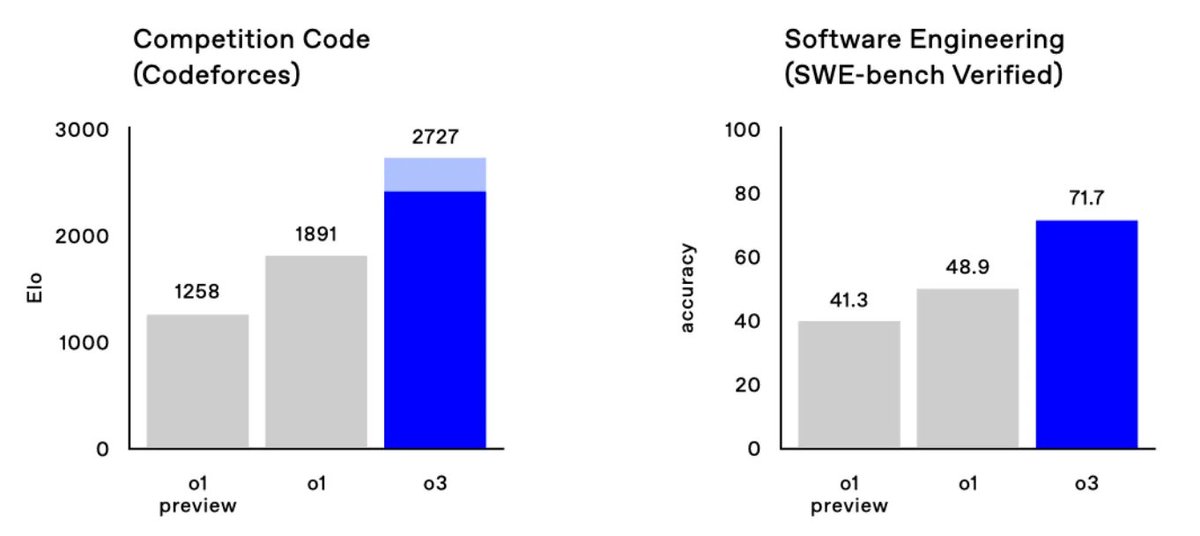

Congrats to Alexander Wei , Sheryl Hsu , Noam Brown , and the team for this truly remarkable result! It's a clear example of the rapid pace of AI progress!

Huge congratulations to AI at Meta and to Shengjia Zhao! Shengjia is one of the most brilliant and kind researchers I’ve had the privilege to work with.