Oleg Rybkin

@_oleh

🇺🇦 Postdoc @ Berkeley. Interested in RL at scale.

ID: 2306706864

http://olehrybkin.com 23-01-2014 14:37:12

282 Tweet

835 Followers

402 Following

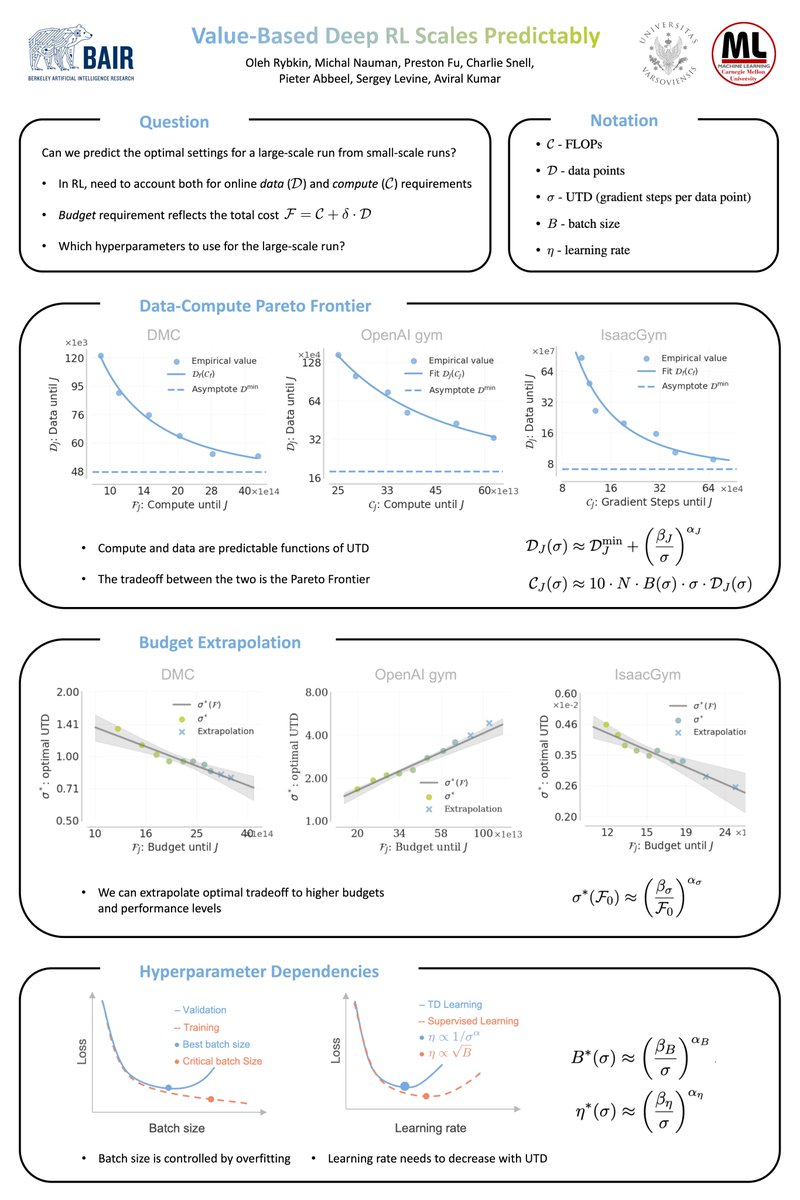

Oleg Rybkin will also present an oral talk on our recent work on building scaling laws for value-based RL. We find that value-based deep RL algorithms scale predictably. Talk at Workshop on robot learning (WRL), April 27. Charlie Snell will then present the poster!

Oleg Rybkin Dorsa Sadigh Chelsea Finn To be presented at ICML 2025 as a *spotlight poster* :)

our new system trains humanoid robots using data from cell phone videos, enabling skills such as climbing stairs and sitting on chairs in a single policy (w/ Hongsuk Benjamin Choi Junyi Zhang David McAllister)

This was fun thanks for having me Chris Paxton Michael Cho - Rbt/Acc! See the podcast for some livestream of the robot in real time and me evaluating a policy live! Or check it out for yourself at auto-eval.github.io and eval your policy in real without breaking a sweat

![fly51fly (@fly51fly) on Twitter photo [LG] Value-Based Deep RL Scales Predictably

O Rybkin, M Nauman, P Fu, C Snell... [UC Berkeley] (2025)

arxiv.org/abs/2502.04327 [LG] Value-Based Deep RL Scales Predictably

O Rybkin, M Nauman, P Fu, C Snell... [UC Berkeley] (2025)

arxiv.org/abs/2502.04327](https://pbs.twimg.com/media/GjOJLNaaQAAzTAN.jpg)