Andreas

@_tsamados

PhD researcher on human-machine teaming @UniofOxford

I like hacking LLMs, philosophy of science, cryptography, p2p networks, direct action

ID: 1530201115039608833

https://custodians.online/ 27-05-2022 14:55:59

78 Tweet

86 Followers

115 Following

wiki.offsecml.com has some shiny new things; - Biagio Montaruli's adversarial phishing page generator - workarounds for Tensorflow and Keras model execution thanks Mary Walker & faceteep - hidden unicode attacks for LLMs Joseph Thacker Riley Goodside -Vishing techinques

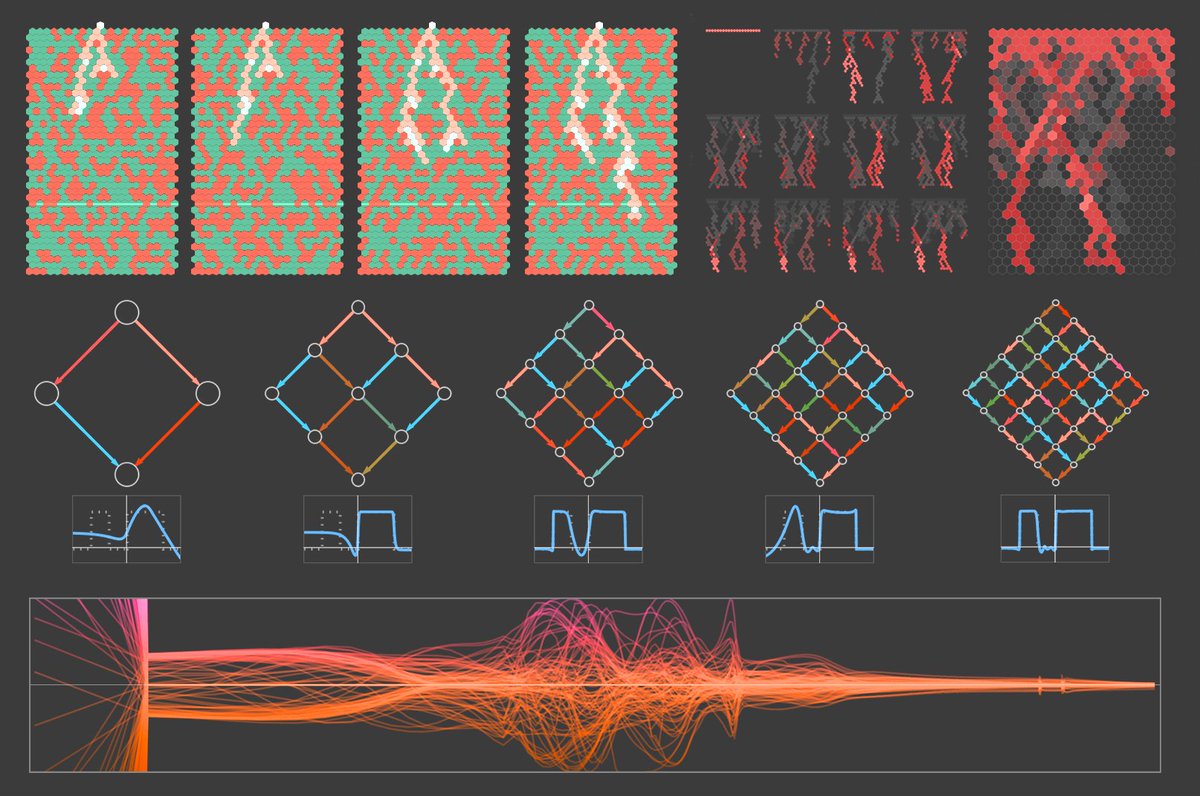

🔴 Freshly published in Science Advances the new bible of anonymisation, its many sins and potential for redemption! With Andrea Gadotti Florimond Houssiau Ana-Maria Cretu Yves-A. de Montjoye science.org/doi/10.1126/sc…