Vaishnavh Nagarajan

@_vaishnavh

Research scientist at Google || Prev: CS PhD at Carnegie Mellon ||

Shifting to vaishnavh@ on the blue app

foundations of AI

ID: 874847778119266304

http://vaishnavh.github.io 14-06-2017 04:35:38

480 Tweet

2,2K Followers

582 Following

Excited to share our work with my amazing collaborators, Goodeat, Xingjian Bai, Zico Kolter, and Kaiming. In a word, we show an “identity learning” approach for generative modeling, by relating the instantaneous/average velocity in an identity. The resulting model,

Yep what Junhong Shen said. I started working on ML for PDEs during my PhD. And the first three years was just reading books and appreciating the beauty and the difficulty of the subject!

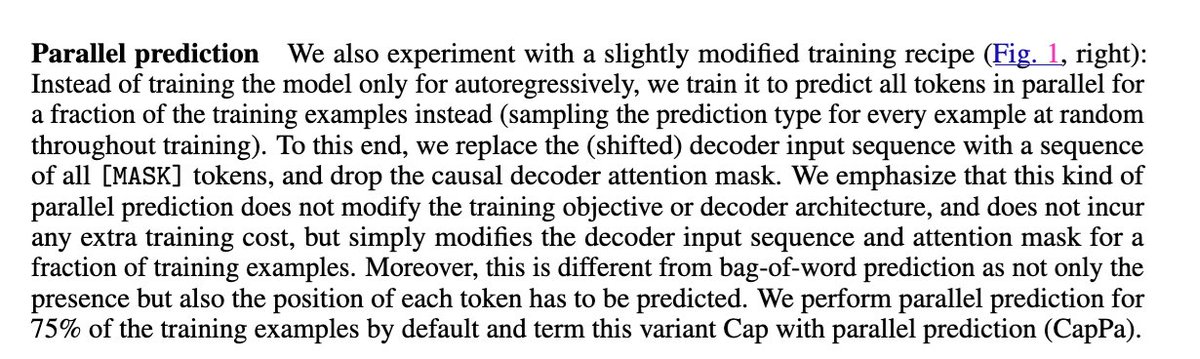

Lucas Beyer (bl16) I was about to add that the first instance of dummy token based multi-token approach was in this paper that called it "parallel prediction" until I just noticed the author list! way ahead of its time! arxiv.org/abs/2306.07915