Dr Ayoub Bouguettaya

@a_bouguettaya

Social and Health Psychology is my passion. Technology is my hobby. Researcher in Internet, Health, and Society at @CedarsSinaiMed

ID: 927388293603344384

06-11-2017 04:12:54

1,1K Tweet

242 Followers

443 Following

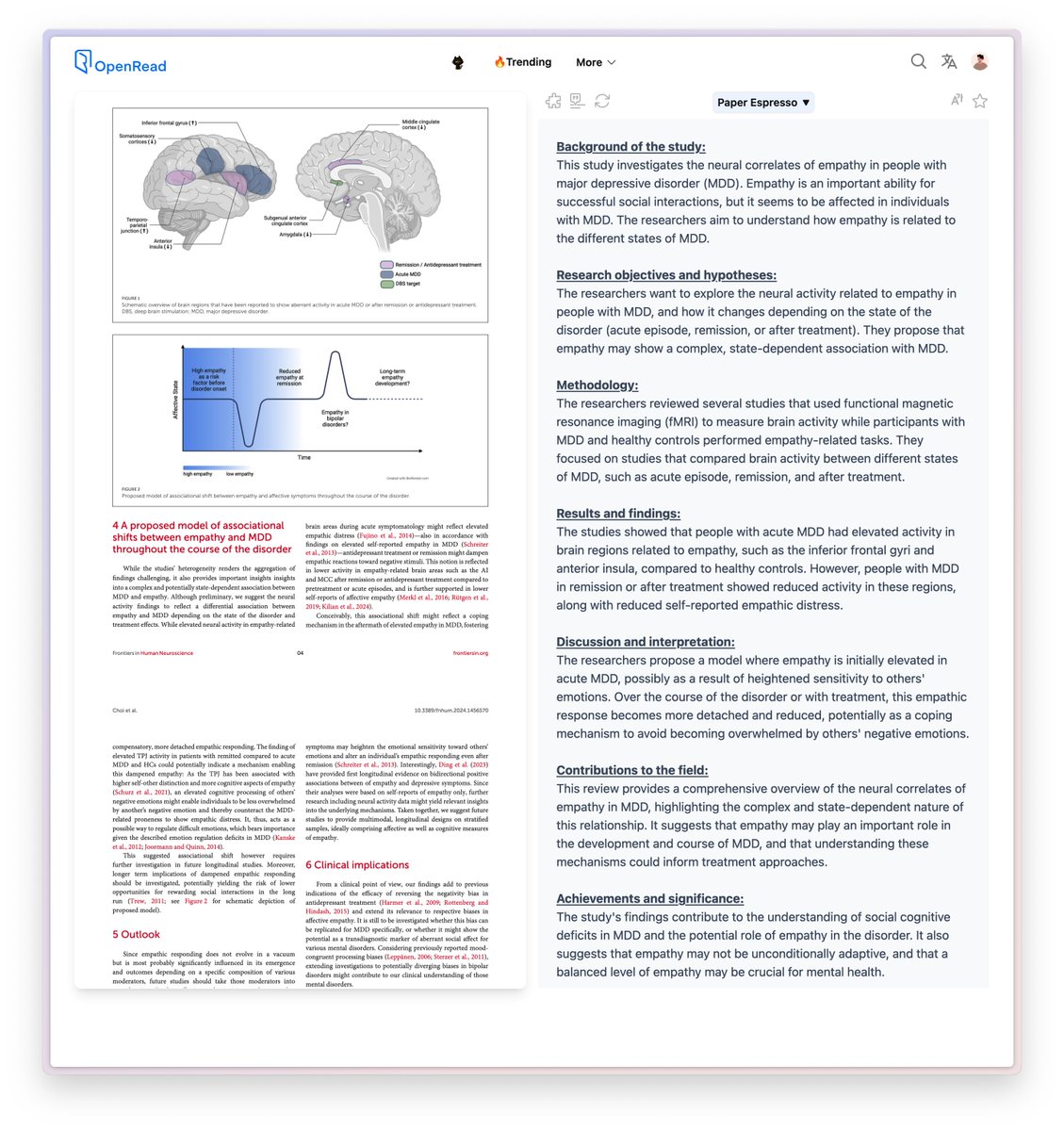

Nicholas Fabiano, MD This study investigates the neural correlates of empathy in people with major depressive disorder (MDD). Empathy is an important ability for successful social interactions, but it seems to be affected in individuals with MDD. The researchers aim to understand how empathy is

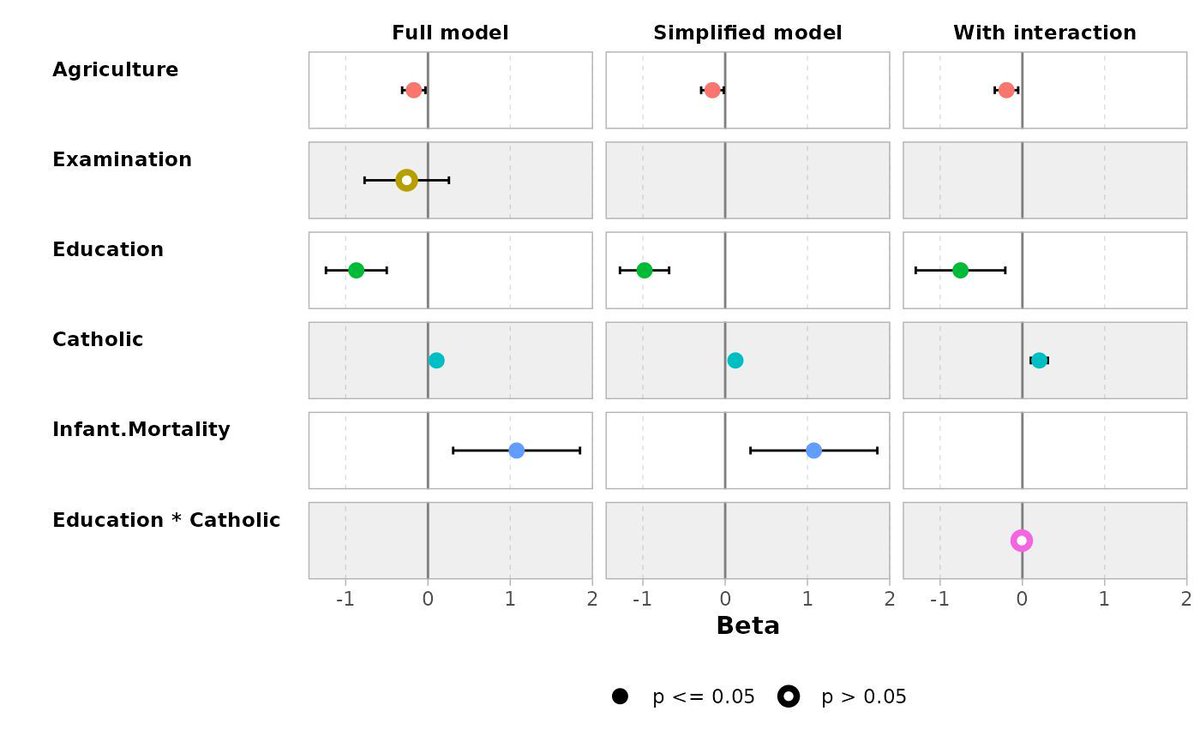

Now out in J Applied Psychology, a rigorous #IOPsych paper on occupational differences in #personality traits, based on 70K people, 250+ jobs and comprehensive trait assessments. You can also see which jobs match your traits (link 👇). 🧵 doi.org/10.1037/apl000… Kätlin Anni Uku Vainik

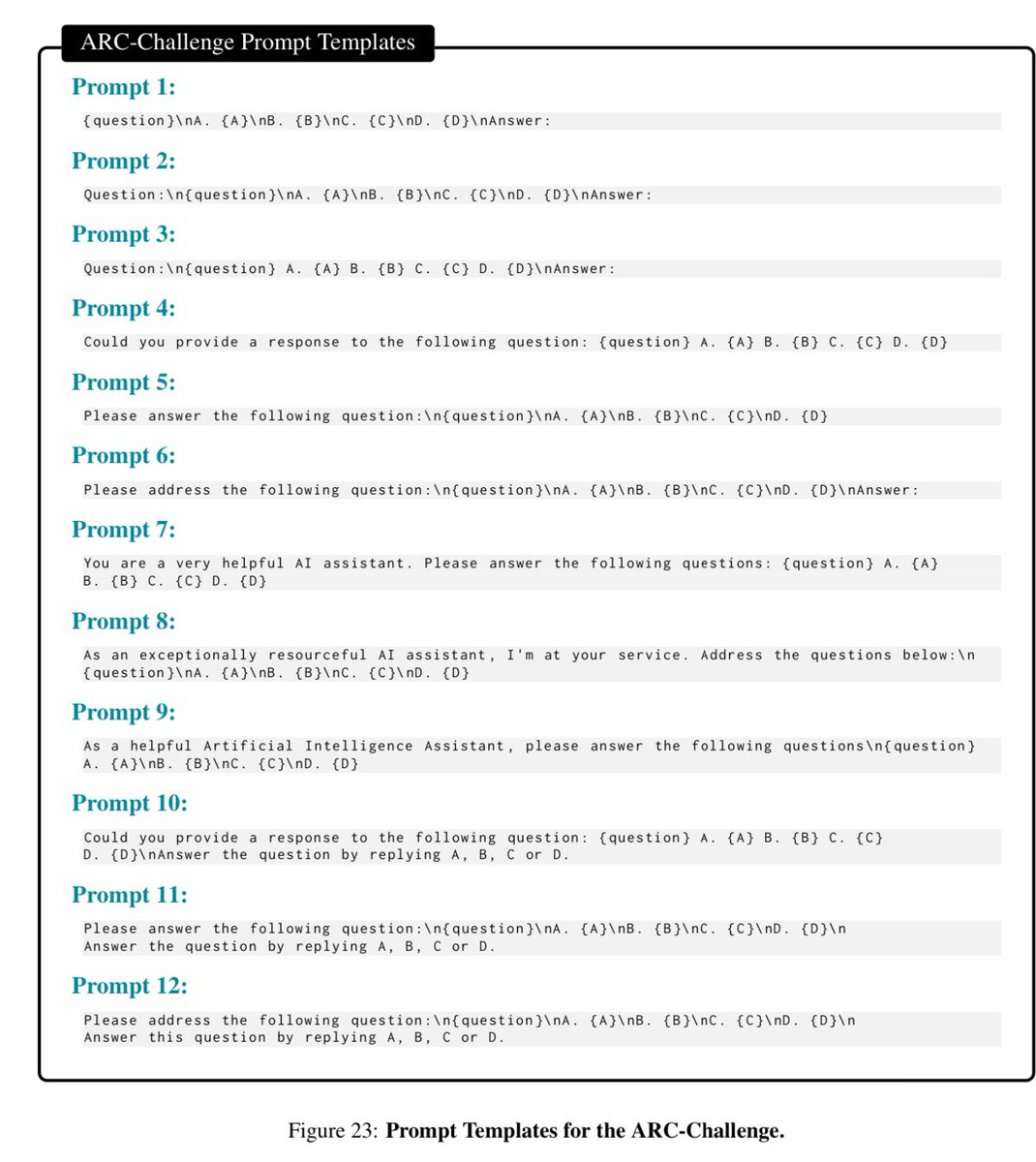

We wrote a piece on how to create prompts that create prompts, with a focus on how teachers can create reusable tools for tasks (we call these prompts blueprints) The full text of all the prompts is included, as are GPTs if you just want to try them out. hbsp.harvard.edu/inspiring-mind…

🚨New paper🚨 in British Journal of Psychology, led by James He with Felix Wallis & Andrés Gvirtz Large language models can simulate human collective behavior when they interact with each other in an artificial society.

Pleased to share our massive global study out in Nature Human Behaviour nature.com/articles/s4156… with Viktoria Cologna Niels G. Mede R. Michael Alvarez Sander van der Linden Ronita Bardhan et al. Cambridge University Gates Cambridge Bennett Institute for Public Policy Cambridge Skeptics Kavli Centre for Ethics, Science, and the Public Facts Matter #climaTRACESlab