Aaron Lou

@aaron_lou

@OpenAI | On leave CS PhD @stanford

ID: 1672006110

http://aaronlou.com 15-08-2013 02:47:28

227 Tweet

2,2K Followers

541 Following

Excited to share our paper “Derivative-Free Guidance in Continuous and Discrete Diffusion Models with Soft Value-Based Decoding”. My amazing coauthors: Yulai Zhao, Chenyu (Monica) Wang, Gabriele Scalia, gokcen, Surag Nair, Tommaso Biancalani, Shuiwang Ji, Aviv Regev, Sergey Levine, Masatoshi Uehara

🌟 Excited to share our latest work on making diffusion language models (DLMs) faster than autoregressive (AR) models! ⚡ It’s been great to work on this with Caglar Gulcehre 😎 Lately, DLMs are gaining traction as a promising alternative to autoregressive sequence modeling 👀 1/14 🧵

Very exciting work from Justin Deschenaux on fast discrete diffusion models! I'm especially excited since I had lost hope for such distillation techniques.

🧬Evo, the first foundation model trained at scale on DNA, is a Rosetta Stone for biology. DNA, RNA, and proteins are the fundamental molecules of life—and cracking the code of their complex language is an ongoing grand challenge. 🔬Today in Science Magazine, the labs of Arc

Tanishq Mathew Abraham, Ph.D. Dylan Patel SemiAnalysis Biden admin is in the Big Autoregression's pocket

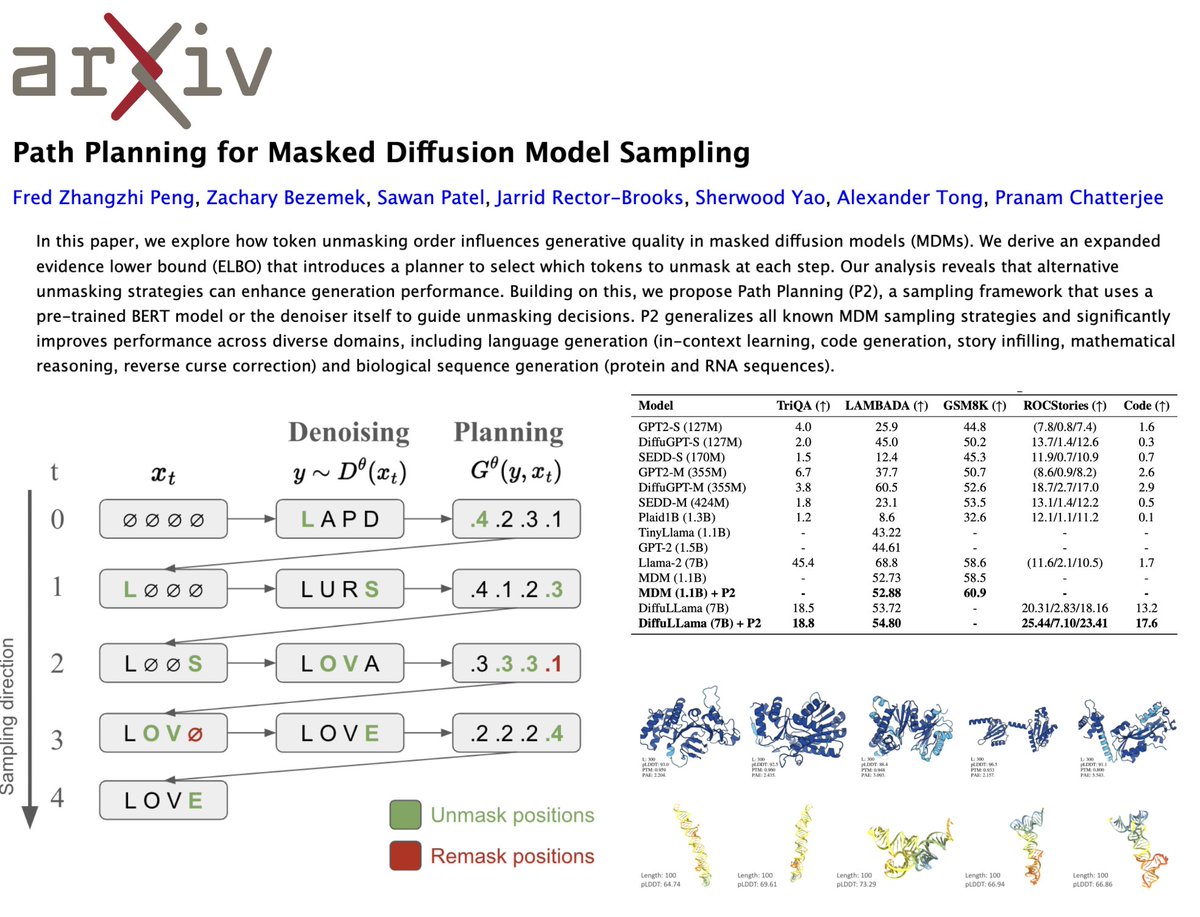

It's tough times for science. 🥺 But we have to keep innovating to fight another day, and today I'm so proud to share Fred Zhangzhi Peng's new, groundbreaking sampling algorithm for generative language models, Path Planning (P2). 🌟 📜: arxiv.org/abs/2502.03540 💻: In the appendix!!