abhijitanand

@abhijit_ai

IR Researcher @ L3S Research Center

ID: 1511614695672885248

06-04-2022 08:00:24

23 Tweet

15 Followers

37 Following

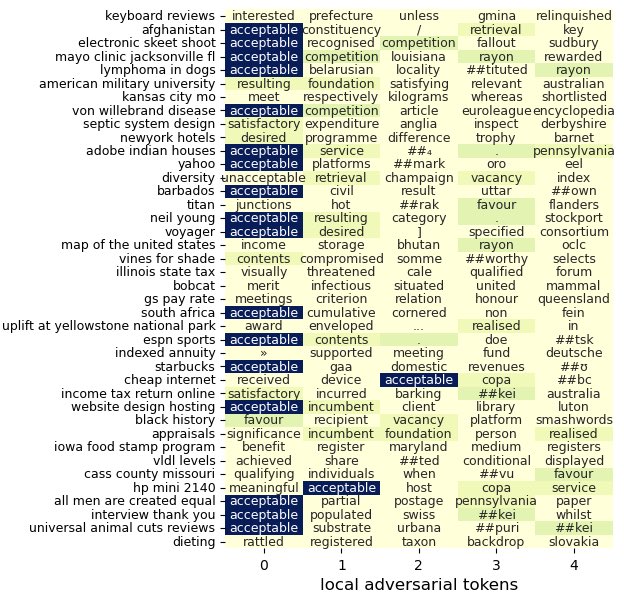

Three papers from my group in ICTIR and SIGIR .. 1/ with Yumeng Wang Lijun Lyu , investigates the brittleness of neural rankers.. fun fact: recurring adversarial words like “acceptable” demotes relevant documents .. #ictir2022 L3S Research Center @L3S_Research_Center@wisskomm TU Delft

2/ wanna train Neural re-rankers but only have small training data ? with abhijitanand Jurek Leonhardt Koustav Rudra we propose supervised contrastive losses for data augmentation methods to training cross encoders. Paper: arxiv.org/abs/2207.03153

And we are underway..#xaiss starts with the Keynote from Mihaela van der Schaar on New frontiers in ML interpretability.

A proud advisor moment for me 😇 ... You fully well deserved it Yumeng Wang .. Cheers to our future adventures.

Happy to share our survey about explainable IR, comments are appreciated. Avishek Anand Max Idahl Jonas Wallat Yumeng Wang Joshua Ghost

Excited to be at #ECIR2023 to present our paper "Probing BERT for Ranking Abilities" with Fabian Beringer, abhijitanand and Avishek Anand! Paper: link.springer.com/chapter/10.100…