Adam Golinski

@adam_golinski

ML research @Apple, prev @OxCSML @InfAtEd, part of @MLinPL & @polonium_org 🇵🇱, sometimes funny

ID: 2922540907

http://adamgol.me/ 08-12-2014 09:04:27

796 Tweet

2,2K Followers

3,3K Following

We are happy to welcome our next speaker to MLSS 2025! 🎤 Regina Barzilay is a School of Engineering Distinguished Professor of AI & Health in the Department of Computer Science and the AI Faculty Lead at MIT Jameel Clinic. She develops machine learning methods for drug

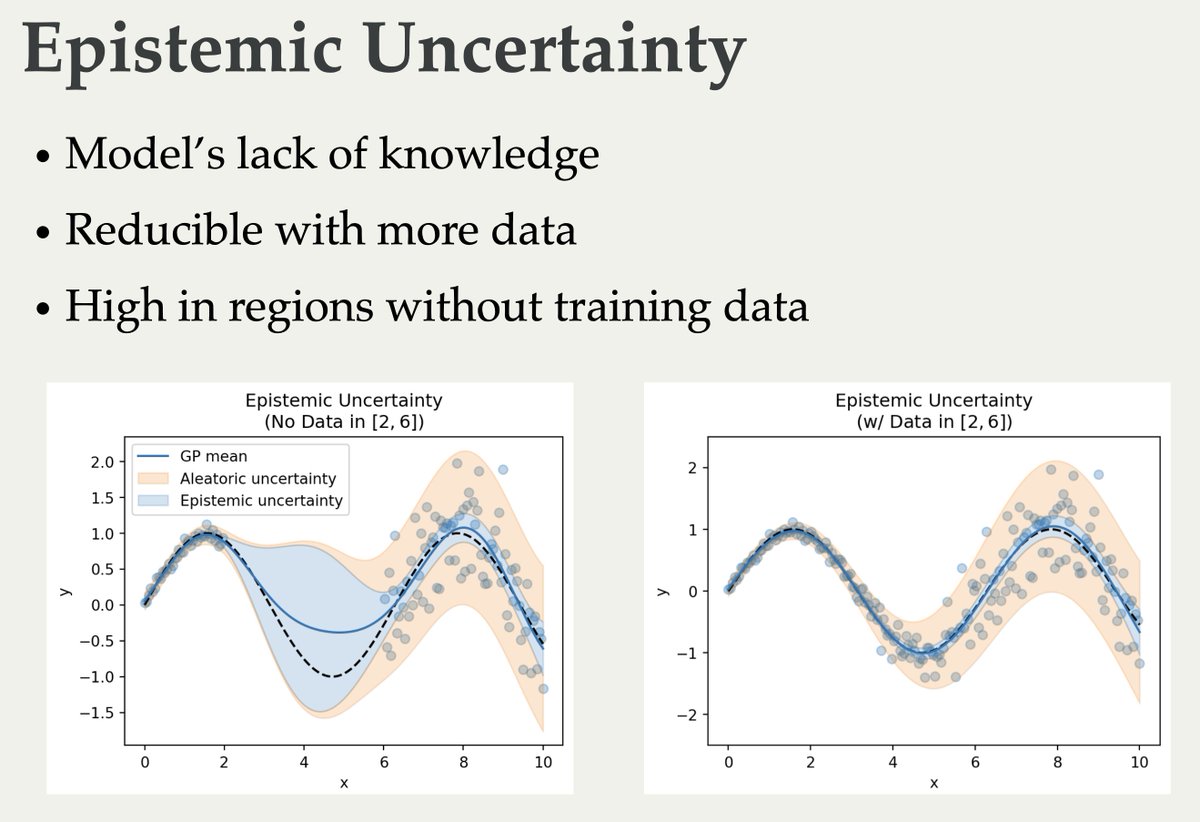

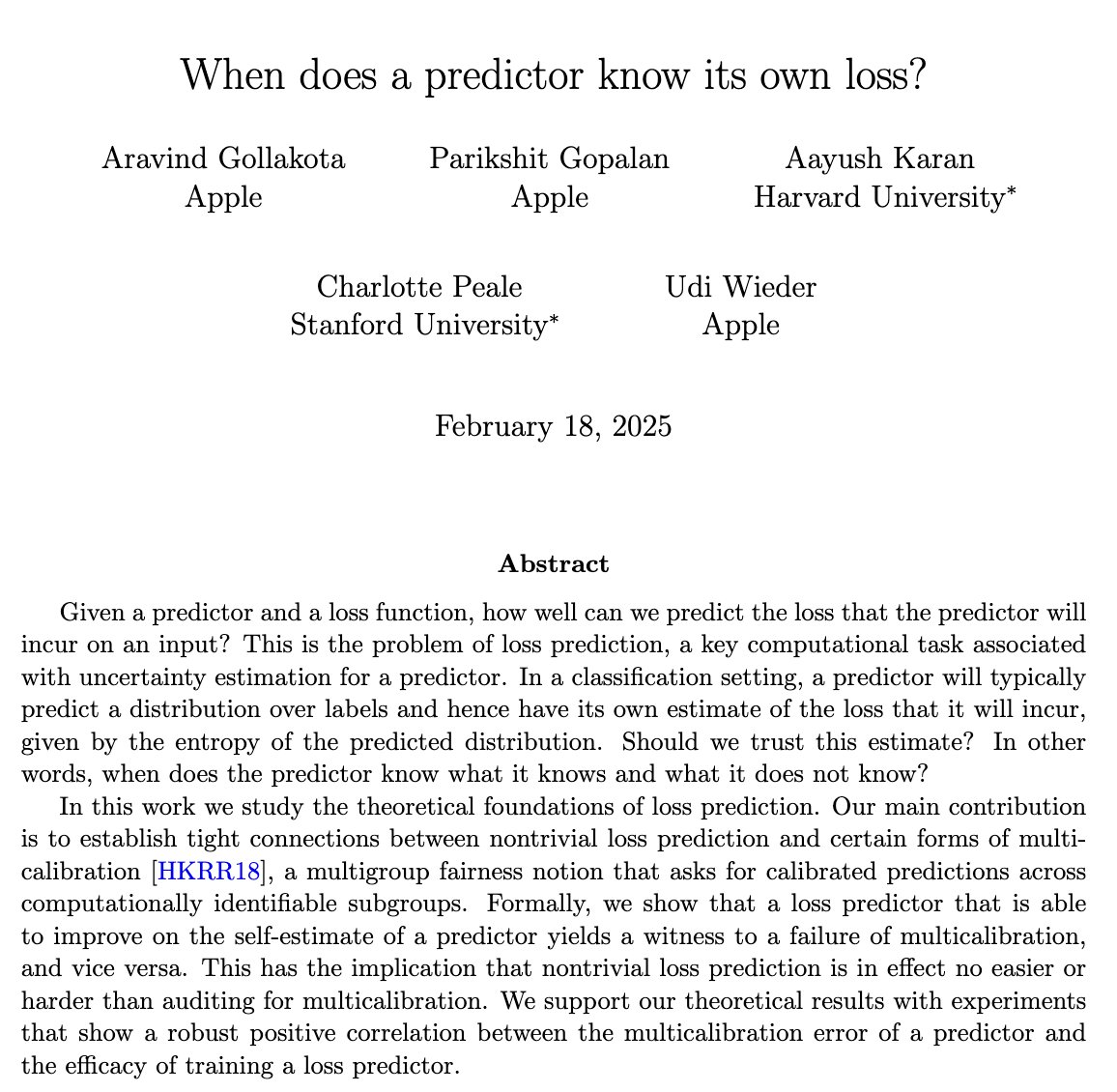

There’s a lot of confusion around uncertainty in machine learning. We argue the "aleatoric vs epistemic" view has contributed to this and present a rigorous alternative. #ICML2025 with Jannik Kossen @eleanortrollope Mark van der Wilk @adamefoster Tom Rainforth 1/5

I'll present my view on the future of uncertainties in LLMs and vision models at ICML Conference, in penal discussions, posters, and workshops. Reach out if you wanna chat :) Here's everything from me and other folks at Apple: machinelearning.apple.com/updates/apple-…

![Shuangfei Zhai (@zhaisf) on Twitter photo We attempted to make Normalizing Flows work really well, and we are happy to report our findings in paper arxiv.org/pdf/2412.06329, and code github.com/apple/ml-tarfl…. [1/n] We attempted to make Normalizing Flows work really well, and we are happy to report our findings in paper arxiv.org/pdf/2412.06329, and code github.com/apple/ml-tarfl…. [1/n]](https://pbs.twimg.com/media/GedfCo_aEAIJUtL.jpg)