Adam Fisch

@adamjfisch

Research Scientist @ Google DeepMind | Formerly: PhD @ MIT EECS.

ID: 892997634813710336

http://people.csail.mit.edu/fisch/ 03-08-2017 06:36:42

292 Tweet

1,1K Followers

245 Following

This work was led by the amazing Amrith Setlur during his internship at Google Research. With Chirag Nagpal, Adam Fisch, Xinyang (Young) Geng, Jacob Eisenstein, Rishabh Agarwal, Alekh Agarwal, and Jonathan Berant.

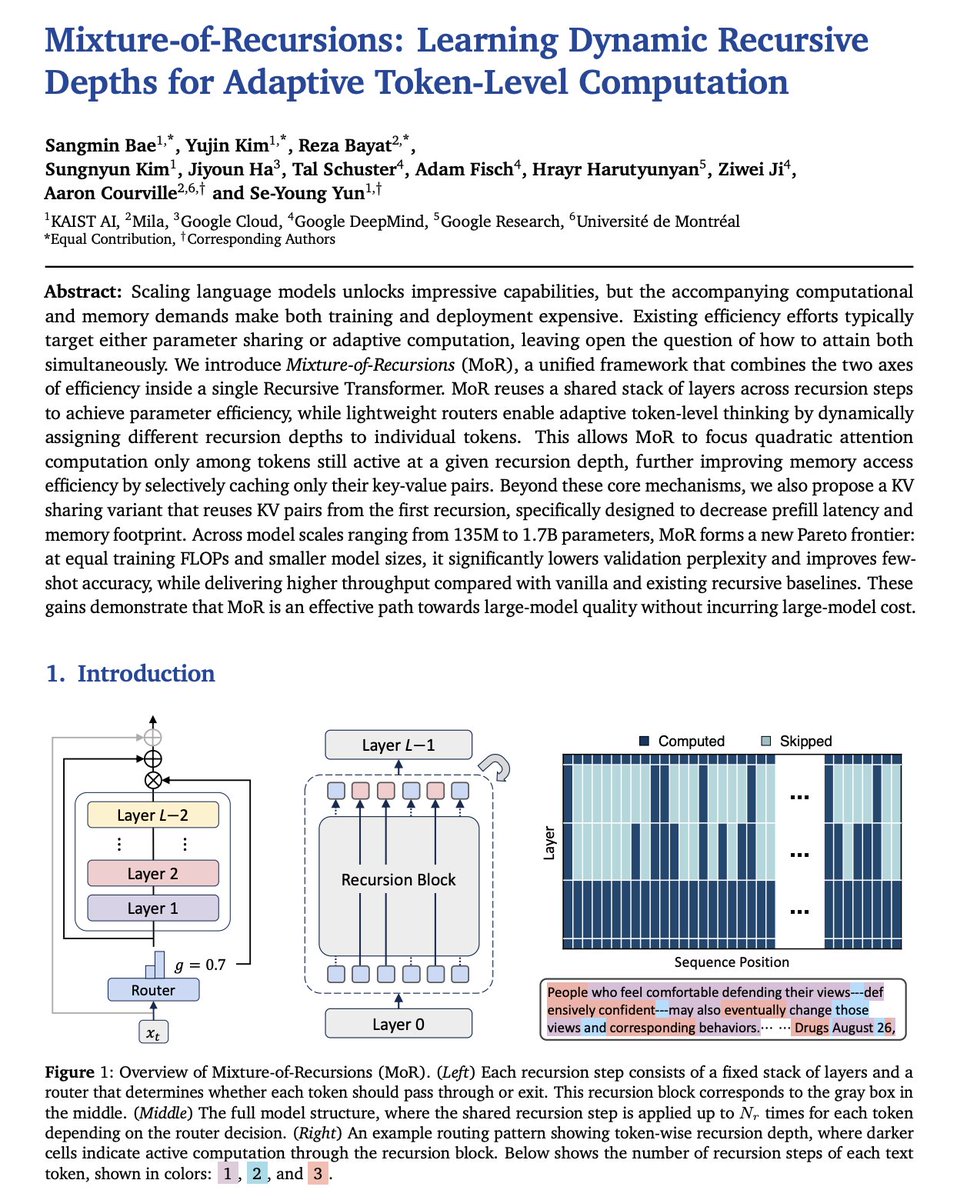

Checkout our new paper on Recursive Transformers. Great having Sangmin here at Google DeepMind to lead it! Particularly excited about the potential for continuous depth wise batching for much better early-exiting batch throughout.

Hi ho! New work: arxiv.org/pdf/2503.14481 With amazing collabs Jacob Eisenstein Reza Aghajani Adam Fisch dheeru dua Fantine Huot ✈️ ICLR 25 Mirella Lapata Vicky Zayats Some things are easier to learn in a social setting. We show agents can learn to faithfully express their beliefs (along... 1/3

Huge thanks ❤️ to my awesome co-first authors Sangmin Bae and Reza Bayat @ ICML, and to all our collaborators and supervisors who made this possible: Sungnyun Kim , Jen Ha @ ICML 2025, Tal Schuster, Adam Fisch, Hrayr Harutyunyan, Ziwei Ji, Aaron Courville, and Se-Young Yun.

🏋️♂️This unified MoR framework has very good performance and faster speeds. Check it out and ask any questions! Huge thanks to my awesome co-authors: Yujin Kim Reza Bayat Sungnyun Kim Jen Ha @ ICML 2025 Tal Schuster Adam Fisch Hrayr Harutyunyan Ziwei Ji Aaron Courville Se-Young Yun! 🥰