Aditya Grover

@adityagrover_

Co-founder @InceptionAILabs. AI Prof @UCLA. Prev: PhD @StanfordAILab, bachelors @IITDelhi.

ID: 39525395

http://aditya-grover.github.io 12-05-2009 15:43:56

871 Tweet

10,10K Followers

491 Following

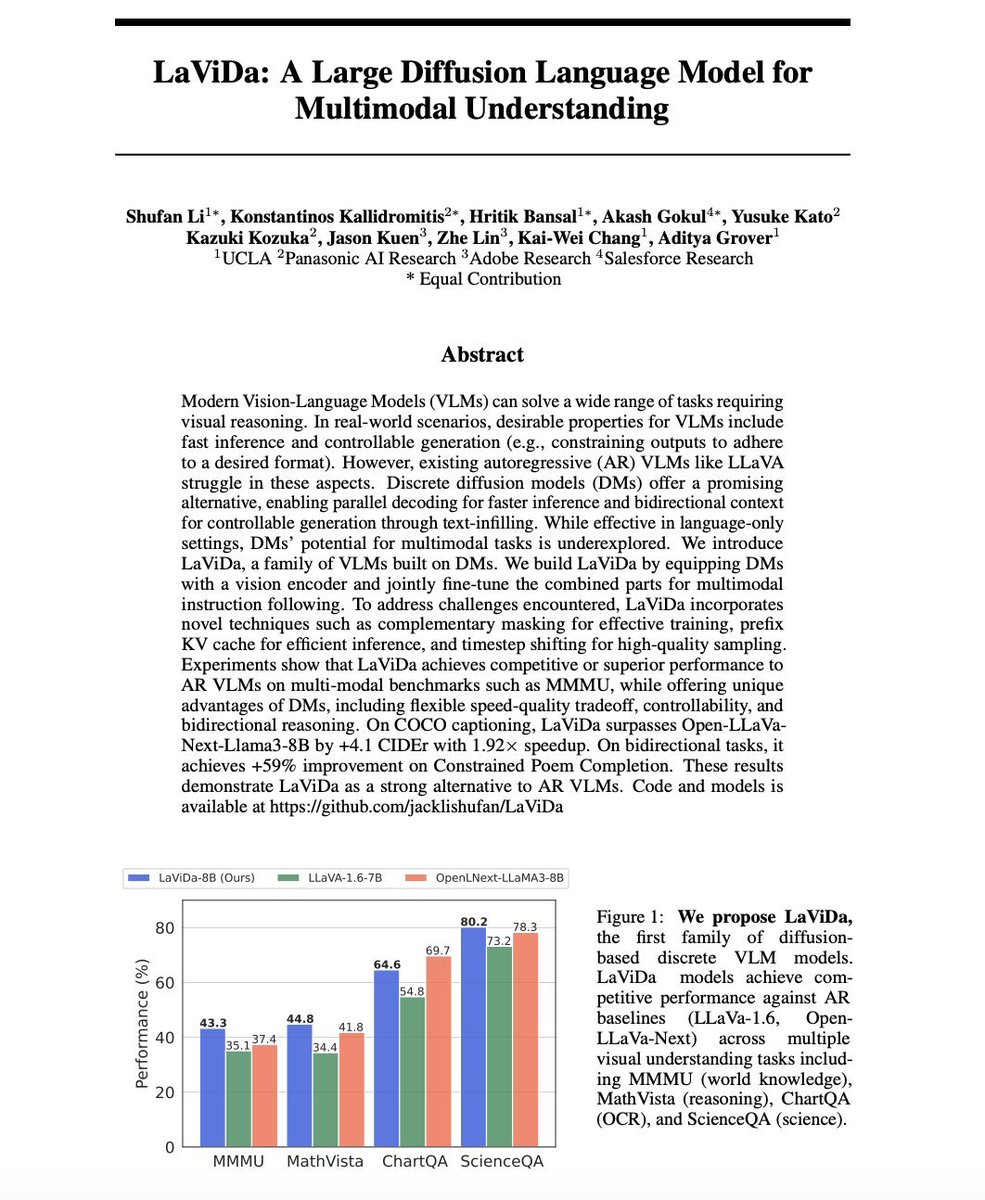

Diffusion language models go multimodal! Particularly impressive to see the speed and quality results on visual reasoning benchmarks. Great work led by my students Shufan (Jack) Li, Hritik Bansal and amazing collaborators.

We're presenting OmniFlow at CVPR 2025. Checkout our work at Poster #241 (ExHall D) on Jun 14 8-10am. Additionally, my advisor Aditya Grover will give a talk about our recent works on multi-modal diffusion language models at WorldModelBench workshop on June 12.

🥳 Excited to share that VideoPhy-2 has been awarded 🏆 Best Paper at the World Models Workshop (physical-world-modeling.github.io) #ICML2025! Looking forward to presenting it as a contributed talk at the workshop! 😃 w/ Clark Peng Yonatan Bitton @ CVPR Roman Aditya Grover Kai-Wei Chang