Abdulkadir Gokce

@akgokce0

IC SC @EPFL_en @ICepfl | EE&Math @unibogazici_en

ID: 1300695920405893122

01-09-2020 07:26:44

12 Tweet

49 Followers

65 Following

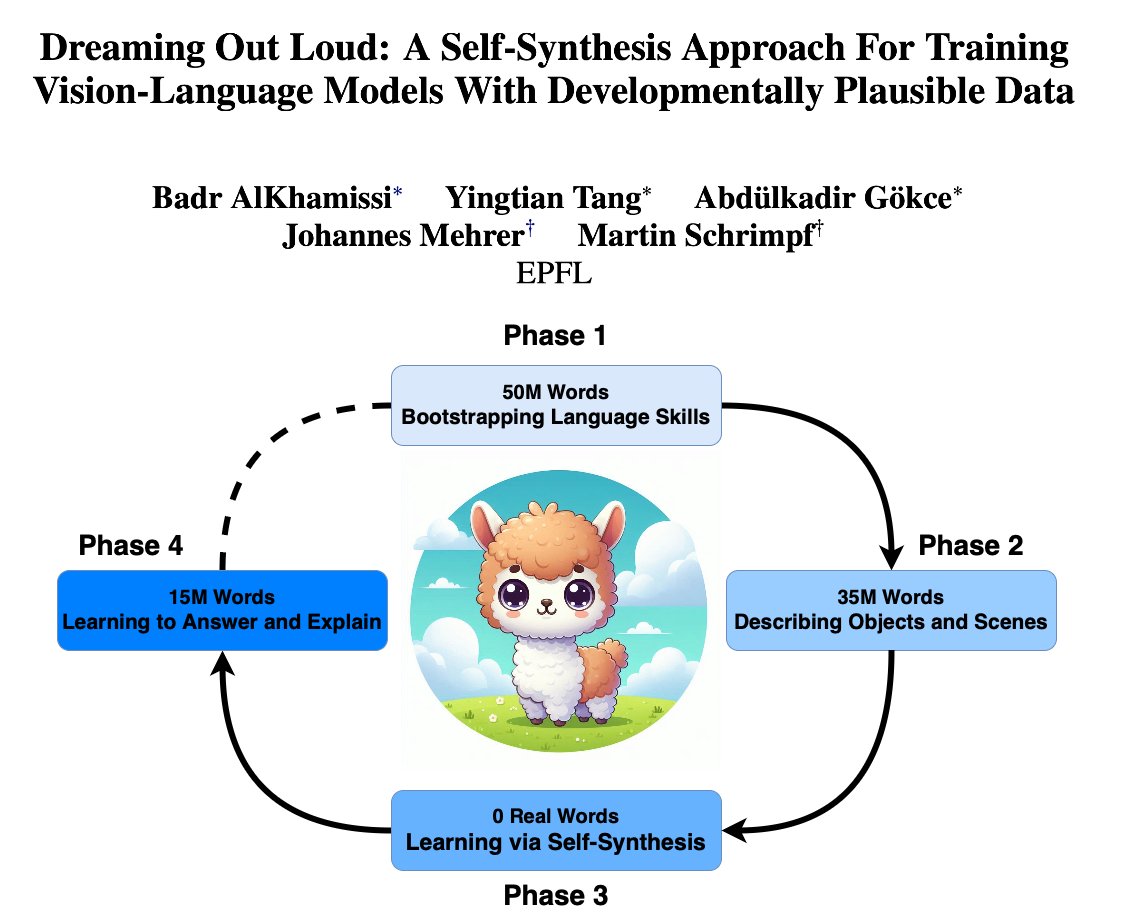

🚨 New Paper!! How can we train LLMs using 100M words? In our babyLM paper, we introduce a new self-synthesis training recipe to tackle this question! 🍼💻 This was a fun project co-led by me, Yingtian Tang, Abdulkadir Gokce, w/ Hannes Mehrer & Martin Schrimpf 🧵⬇️

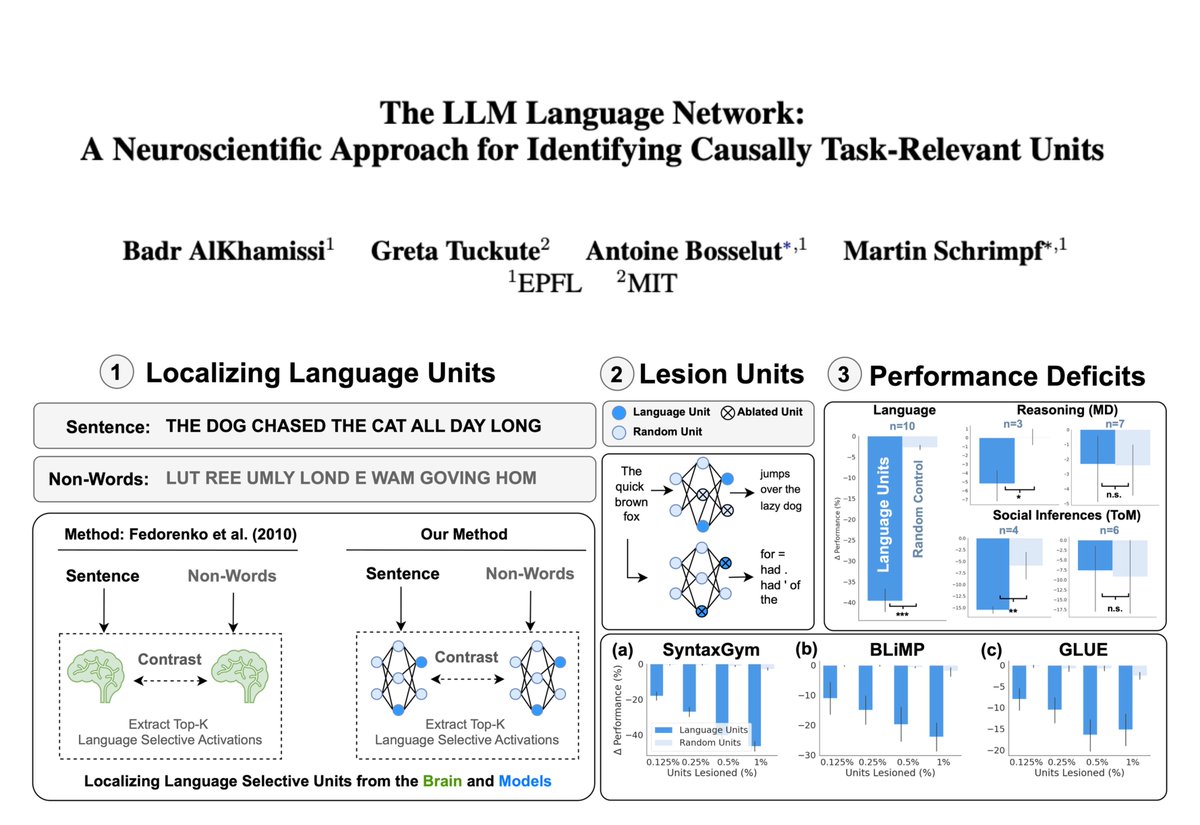

🚨 New Paper! Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖 Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks! w/ Greta Tuckute, Antoine Bosselut, & Martin Schrimpf 🧵👇

![Ben Lonnqvist (@lonnqvistben) on Twitter photo AI vision is insanely good nowadays—but is it really like human vision or something else entirely? In our new pre-print, we pinpoint a fundamental visual mechanism that's trivial for humans yet causes most models to fail spectacularly. Let's dive in👇🧠

[arxiv.org/abs/2504.05253] AI vision is insanely good nowadays—but is it really like human vision or something else entirely? In our new pre-print, we pinpoint a fundamental visual mechanism that's trivial for humans yet causes most models to fail spectacularly. Let's dive in👇🧠

[arxiv.org/abs/2504.05253]](https://pbs.twimg.com/media/GpI00iaWUAAe2YY.jpg)