Akiyo Fukatsu

@akiyohukat_u

language acquisition and morphological inflection

ID: 1635993151390498816

15-03-2023 13:15:51

83 Tweet

181 Followers

169 Following

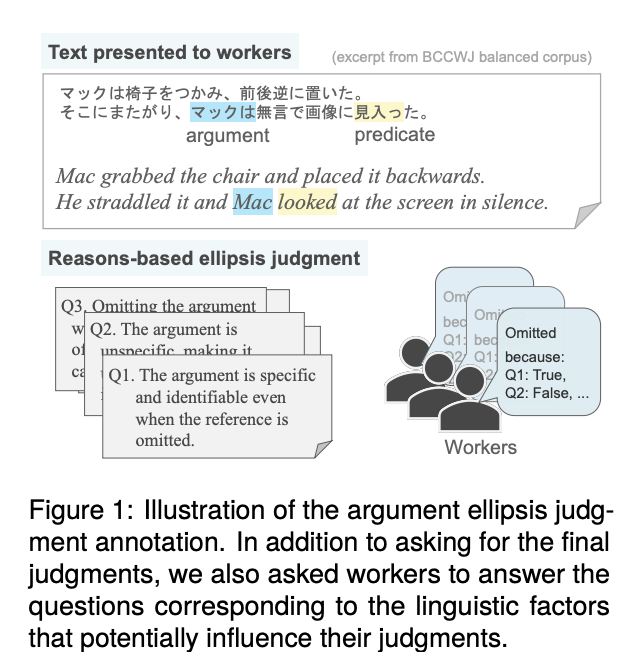

Our paper will be presented by the first author, Yukiko, on 5/22 18:50- in LREC COLING 2024! arxiv.org/abs/2404.11315 Humans often omit words in pro-drop languages, e.g., Japanese. We create a large dataset on natural omission and analyze factors behind such human ellipsis decisions.

(やや今更ですが…)都立大上田さんとの共著でBLiMPなどのMPP Datasetsを用いた言語モデルの容認性判断はtoken長バイアスの影響を受け、かつそれらは単純な正規化では緩和できないことを示し、バイアス除去効果のある対処法を提案した論文が.LREC COLING 2024 に採択されてます👍 aclanthology.org/2024.lrec-main…

"Tree-Planted Transformers: Unidirectional Transformer Language Models with Implicit Syntactic Supervision" (preprint: arxiv.org/abs/2402.12691) がACL 2024 (Findings) に採択されました。 染谷さん (Taiga Someya), 大関先生との研究です。

9/5(木) 17:50~18:50に以下のタイトルで発表します! [S3-P24] チェックリストを利用した生成系タスクの網羅的評価 NIIのRAとして取り組んでいる自動評価の研究の発表です。 沢山の方と議論できたらなと思います☺️ #YANS2024 Tohoku NLP Group

![Momoka Furuhashi (@tohoku_nlp_mmk) on Twitter photo 9/5(木) 17:50~18:50に以下のタイトルで発表します!

[S3-P24] チェックリストを利用した生成系タスクの網羅的評価

NIIのRAとして取り組んでいる自動評価の研究の発表です。

沢山の方と議論できたらなと思います☺️

#YANS2024

<a href="/tohoku_nlp/">Tohoku NLP Group</a> 9/5(木) 17:50~18:50に以下のタイトルで発表します!

[S3-P24] チェックリストを利用した生成系タスクの網羅的評価

NIIのRAとして取り組んでいる自動評価の研究の発表です。

沢山の方と議論できたらなと思います☺️

#YANS2024

<a href="/tohoku_nlp/">Tohoku NLP Group</a>](https://pbs.twimg.com/media/GU3ZZLhbcAAogEt.jpg)

Our papar won the Best Paper Award at CoNLL 2025 !! I am deeply honoured to receive such a wonderful award. Thank you for selecting us! Paper link: aclanthology.org/2024.conll-1.2…