Jesujoba Alabi

@alabi_jesujoba

PhD Student @LstSaar & @SIC_Saar, doing natural language processing #NLProc | prev @InriaParisNLP | @UniIbadan @bowenuniversity alumnus | Ọmọ Jesu |Ọmọ Ogbomọṣọ

ID: 3300296825

https://ajesujoba.github.io/ 27-05-2015 10:15:02

226 Tweet

290 Followers

779 Following

Join my lab! I’m currently recruiting new students (MSc & PhD) for admission in the fall of 2025 at Mila - Institut québécois d'IA mila.quebec/en/prospective… Are you interested in multilingual NLP? I would encourage you to apply. Deadline: December 1

📜Excited to share our comprehensive survey on cultural awareness in #LLMs! 🗺️ We reviewed 300+ papers across diverse modalities (language, vision-language, etc.) Siddhesh Pawar Junyeong Park @ NAACL 2025✈️ Jiho Jin Arnav Arora Junho Myung Inhwa Alice Oh #NLProc openreview.net/forum?id=3gg6G…

Excited to attend EMNLP 2025 in Miami next week 🤩 DM me if you'd like to grab a coffee and chat about interpretability, knowledge, or reasoning in LLMs! Our group/collabs will be presenting a bunch of cool works, come check them out! 🧵

Still 14 days to apply for the fully-funded PhD positions at the Munich Center for Machine Learning The call covers many areas of ML and the positions will be with PIs and groups at Universität München and TU München in Germany, incl. my group on human-centric NLP and AI. mcml.ai/opportunities/…

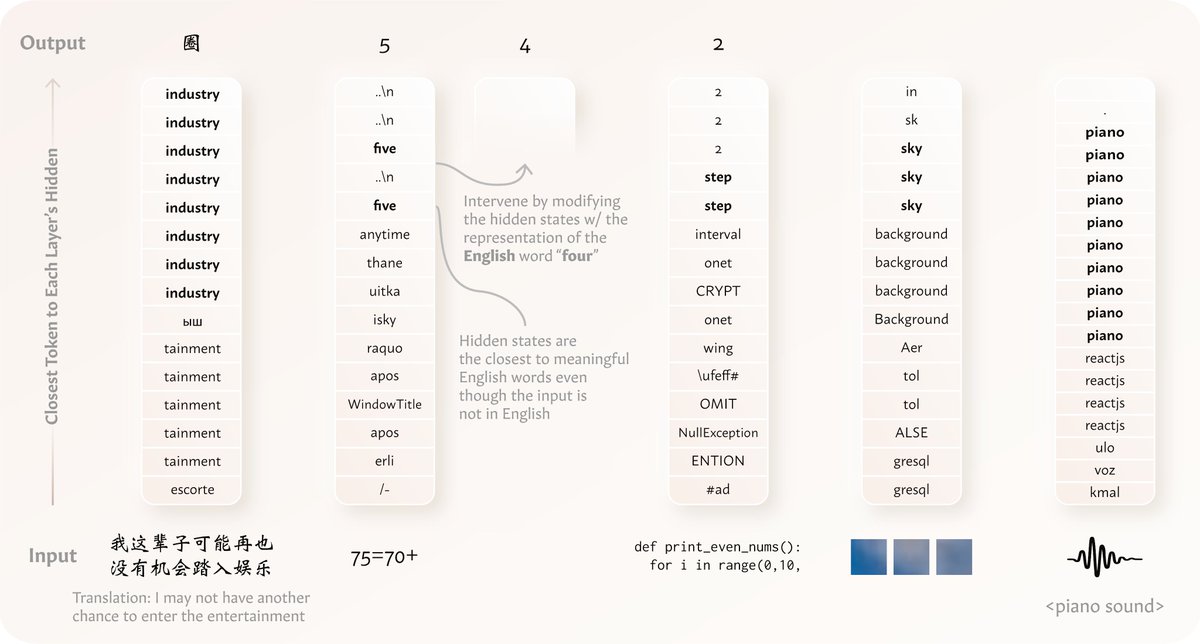

💡 New ICLR paper! 💡 "On Linear Representations and Pretraining Data Frequency in Language Models": We provide an explanation for when & why linear representations form in large (or small) language models. Led by Jack Merullo , w/ Noah A. Smith & Sarah Wiegreffe

I’ve been fascinated lately by the question: what kinds of capabilities might base LLMs lose when they are aligned? i.e. where can alignment make models WORSE? I’ve been looking into this with Christopher Potts and here's one piece of the answer: randomness and creativity

Thank you Saarland Informatics Campus for the Eduard Martin Prize 2024 Every year, the Eduard Martin Prize is awarded by Saarland University and the Saarland University Society to doctoral students for outstanding achievements. linkedin.com/feed/update/ur… unigesellschaft-saarland.de/eduard-martin-…

![Yong Zheng-Xin (Yong) (@yong_zhengxin) on Twitter photo 📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N] 📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]](https://pbs.twimg.com/media/GqgyX4mWMAAbdQ9.png)