Alejandro Lopez-Lira

@alejandroll10

Finance @UF

PhD @Wharton

Research in #ML #AI #fintech #ChatGPT

ID: 16538138

https://www.amazon.com/Predictive-Edge-Generative-Financial-Forecasting/dp/1394242719 01-10-2008 01:12:36

6,6K Tweet

5,5K Followers

2,2K Following

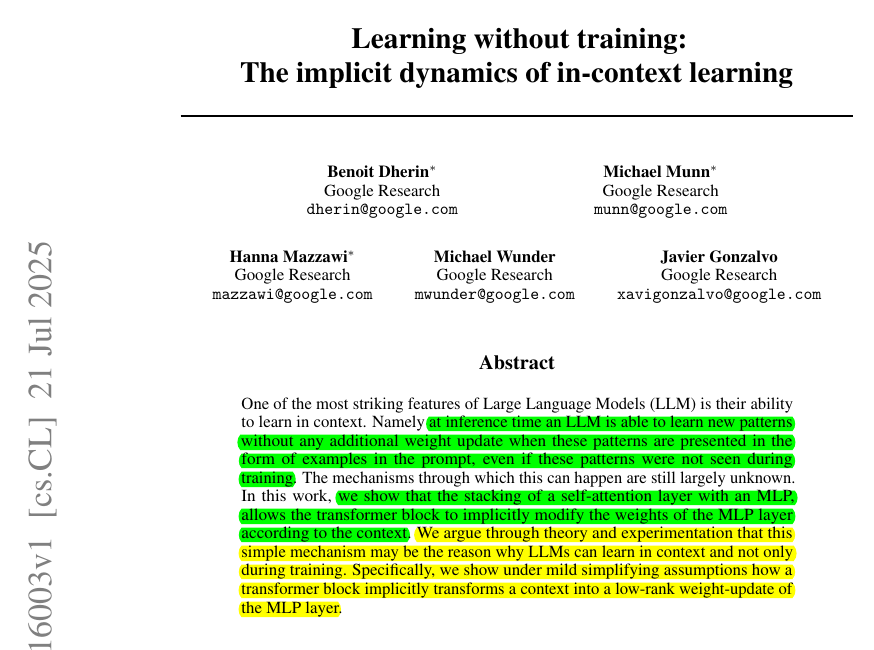

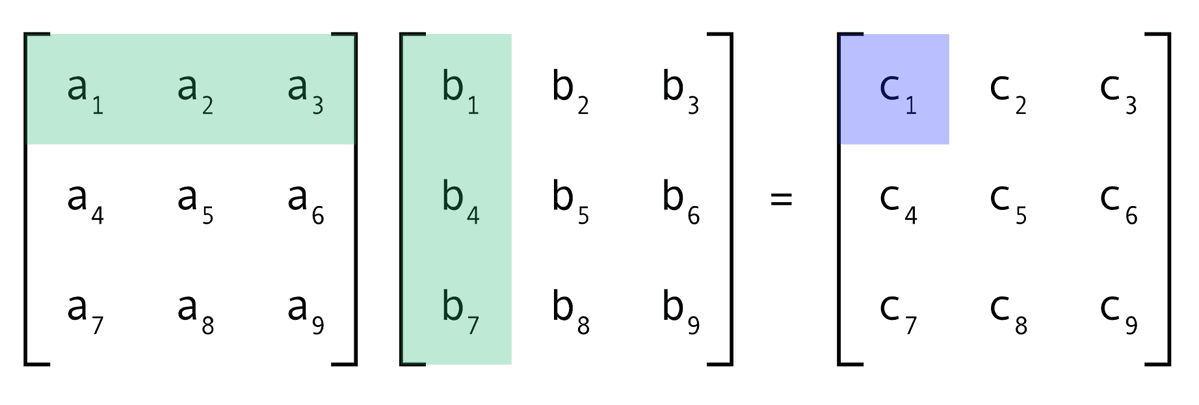

Beautiful Google Research paper. LLMs can learn in context from examples in the prompt, can pick up new patterns while answering, yet their stored weights never change. That behavior looks impossible if learning always means gradient descent. The mechanisms through which this