Alekh Karkada Ashok

@alekhka

Computational Neuroscience, Machine Learning | PhD Student in Serre Lab, Brown University. Prev: RVCE | He/Him |

ID: 109503768

http://alekhka.github.io 29-01-2010 07:54:05

208 Tweet

175 Followers

1,1K Following

With co-authors Thomas Serre, Lakshmi Govindarajan, Rex, and Alekh Karkada Ashok (an exceptionally talented RA who is applying for grad school this cycle, in case you have any interest in recruiting PhD students who are brilliant-beyond-belief). Check out the work and get in touch!

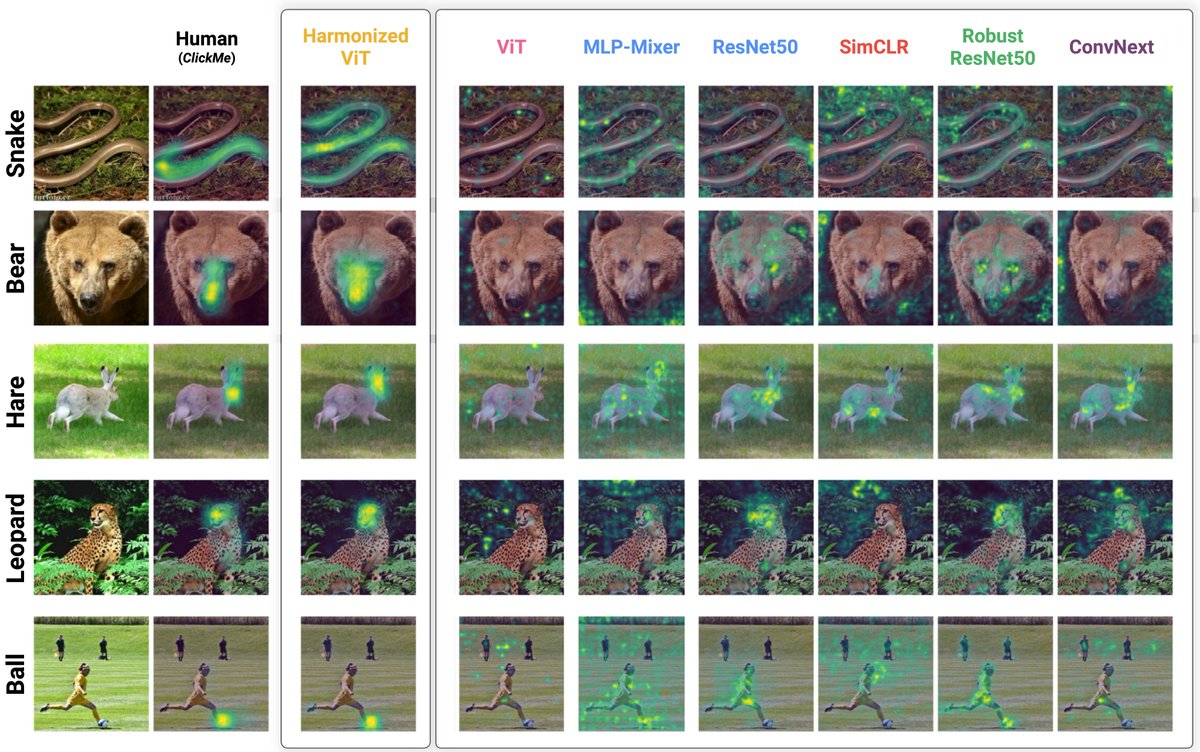

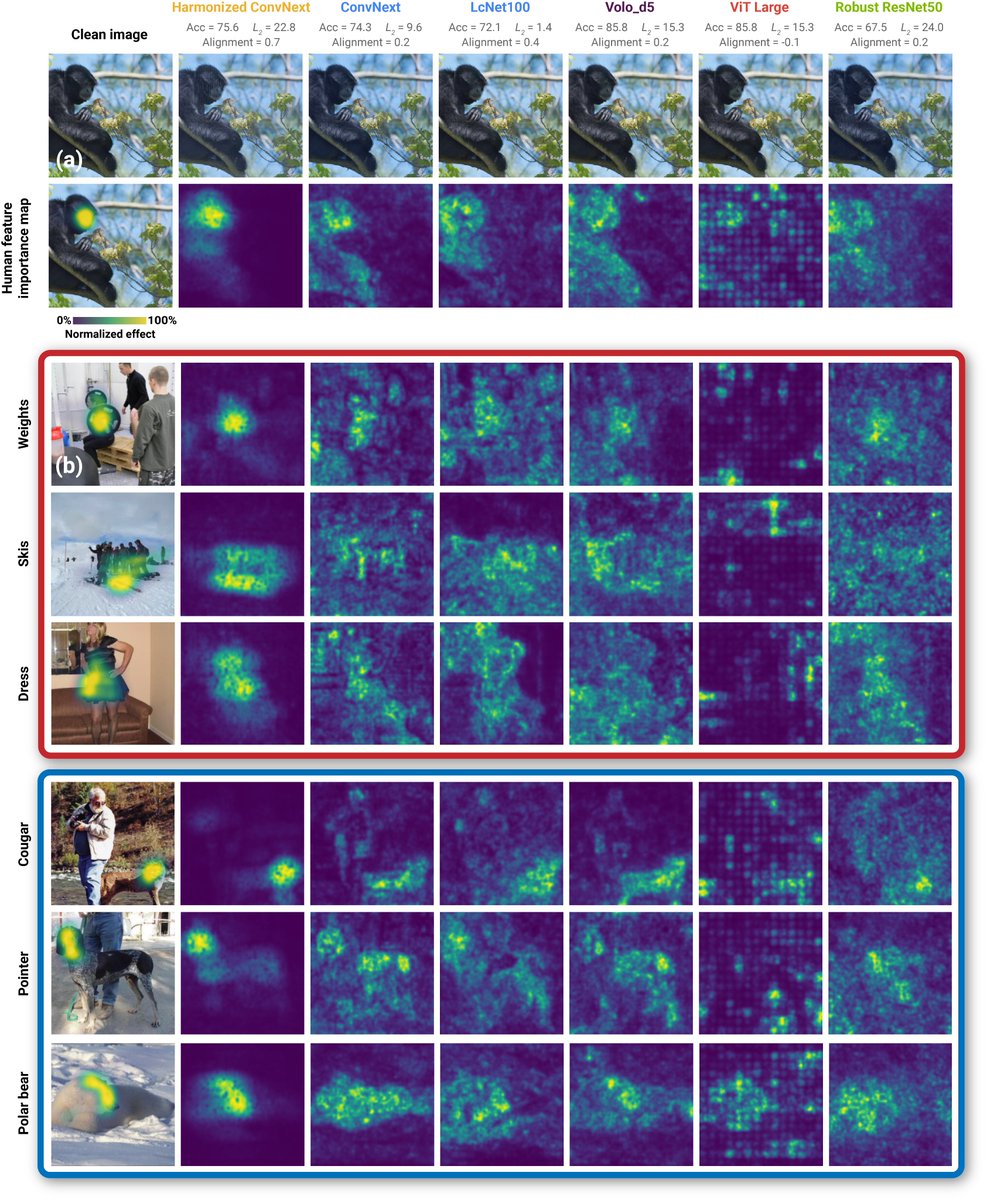

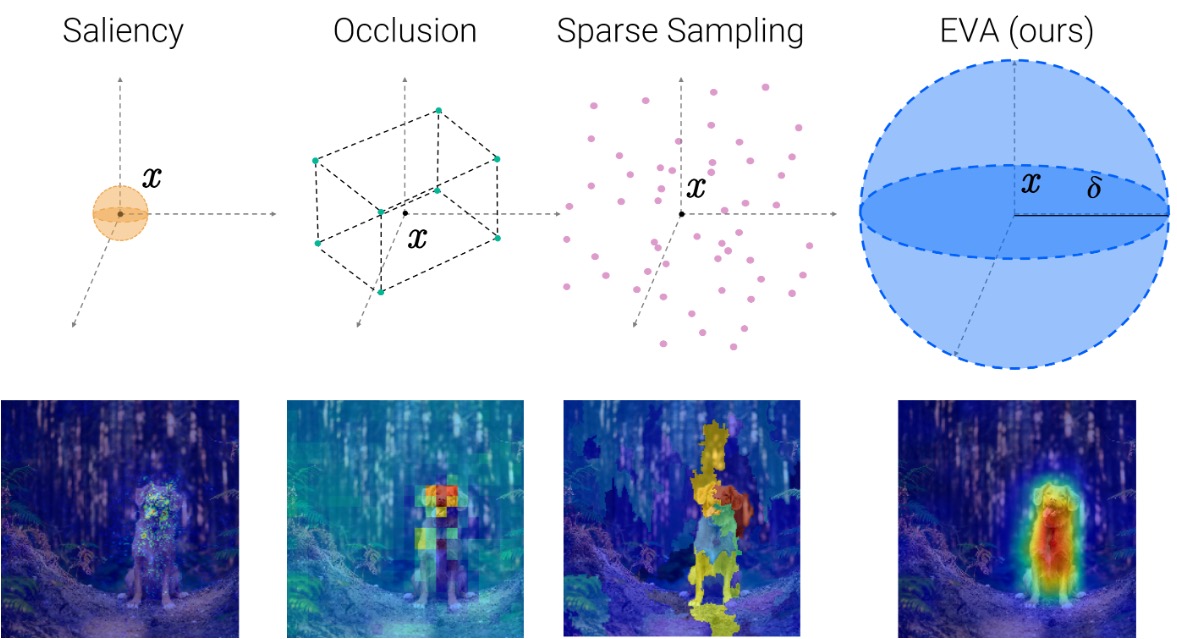

[1/5] Much of the progress on attribution methods has been driven by theoretical metrics --without much consideration for human end-users Our #NeurIPS22 paper investigates whether progress has translated to explanations more useful in real-world scenarios serre-lab.github.io/Meta-predictor

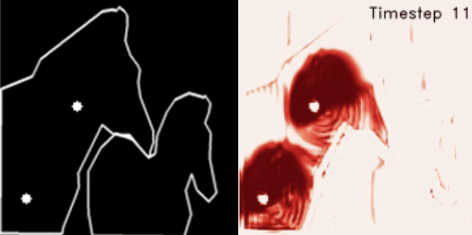

Cem Anil Cool! You should also check out our paper (Alekh Karkada Ashok Lakshmi Govindarajan Thomas Serre) from NeurIPS 2020: arxiv.org/abs/2005.11362 Similar insights with recurrent backprop: more processing time helps systematic generalization on a line-tracing task and extends to panoptic seg on MS-COCO.

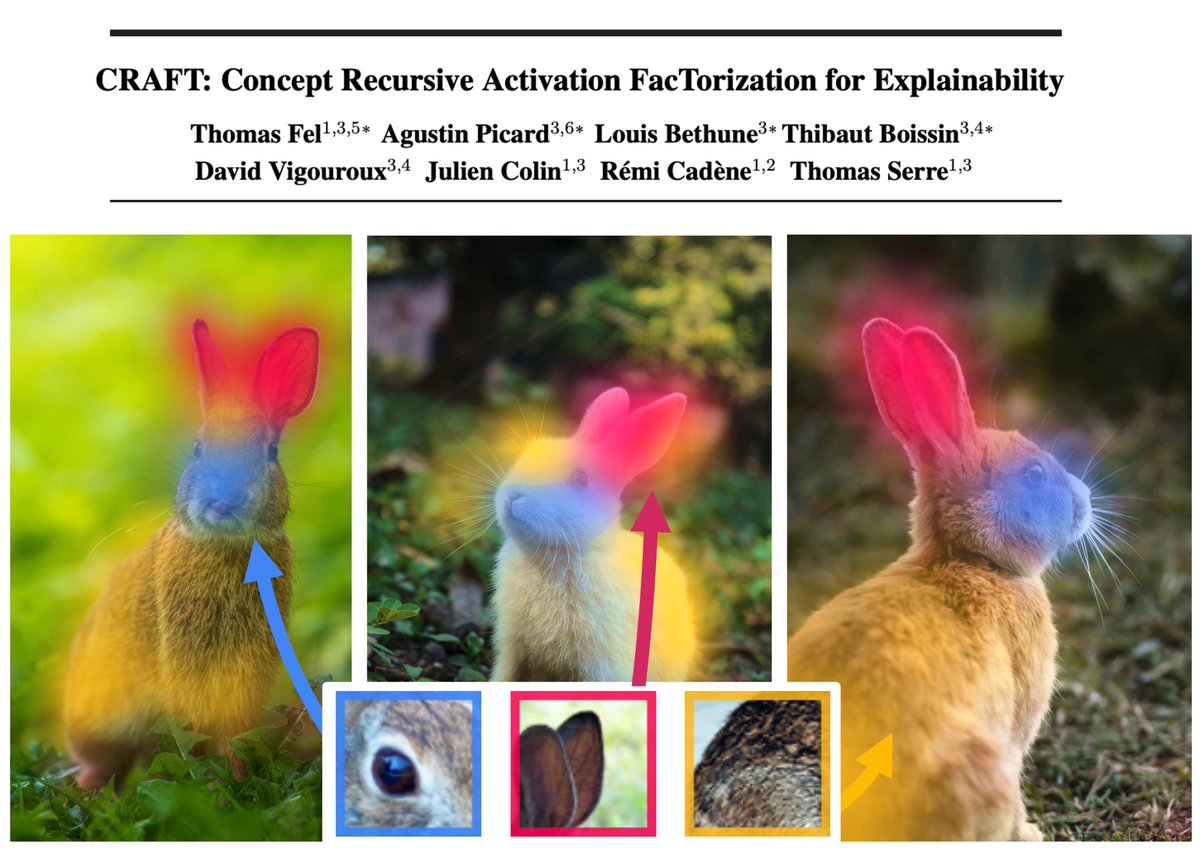

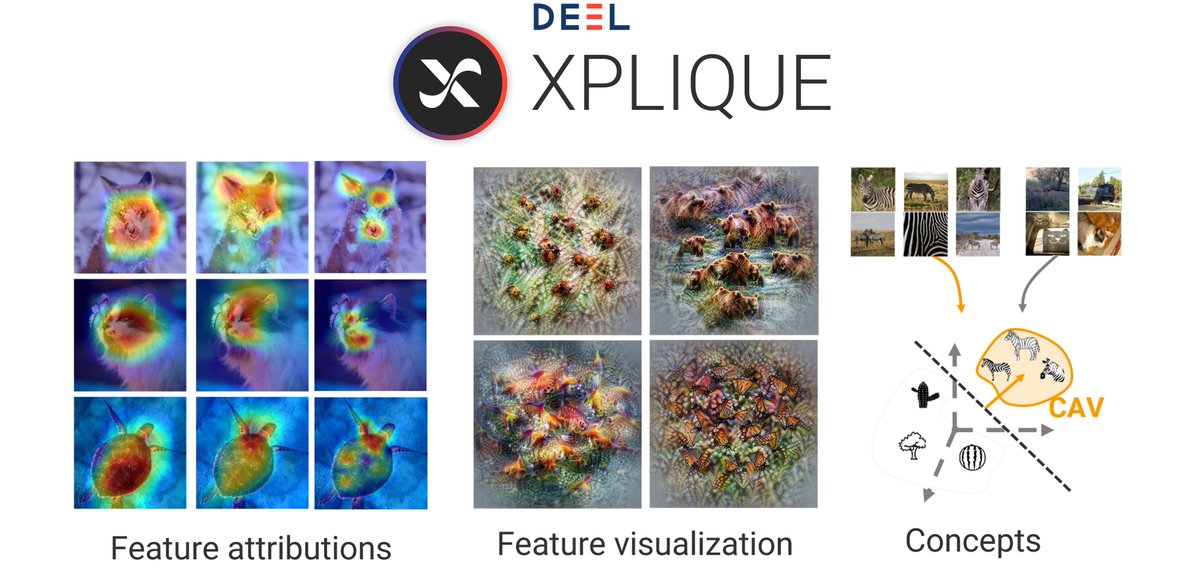

Hi 👋 ! Interested in explainability or planning to use XAI in your future projects? Then you might be interested in this 🧵, let me introduce you to Xplique! github.com/deel-ai/xplique with Thomas Serre, Remi Cadene, Mathieu Chalvidal, Julien Colin, Louis Béthune, Paul Novello, ANITI Toulouse

Excited about this collaborative work between our group and the Sheinberg lab! Carney Institute for Brain Science

I'll be presenting this work Conference on Language Modeling on Monday afternoon, and I'll be hanging out in Philly until Wednesday! Feel free to reach out if you want to chat about mechanistic interpretability and/or cognitive science.