Alessandro Stolfo

@alesstolfo

PhD Student @ETH - LLM Interpretability - Prev. @MSFTResearch @oracle

ID: 537527790

https://alestolfo.github.io 26-03-2012 18:23:25

109 Tweet

1,1K Followers

568 Following

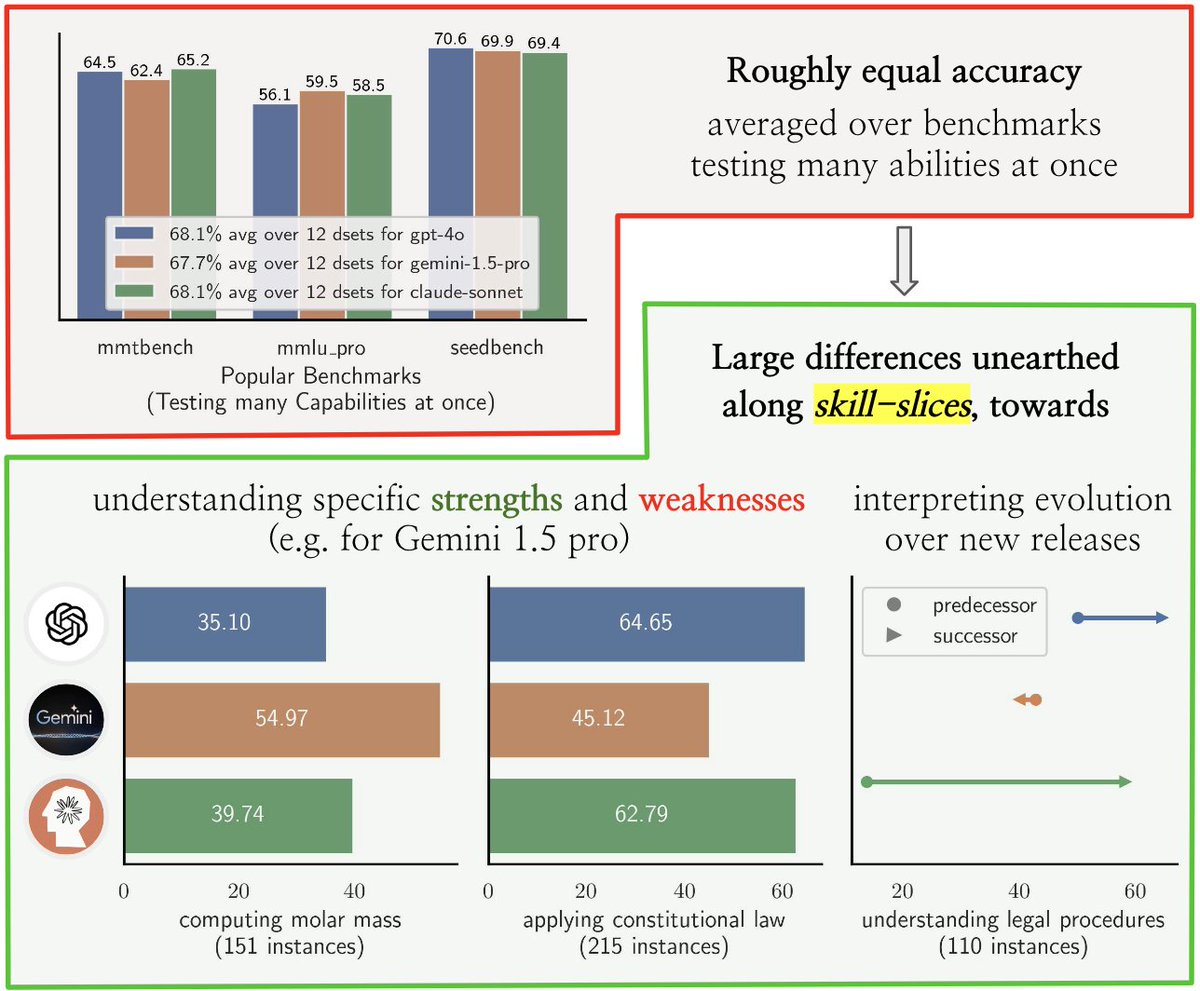

Introducing BenchAgents: a framework for automated benchmark creation, using multiple LLM agents that interact with each other and with developers to generate diverse, high-quality, and challenging benchmarks w/ Varun Chandrasekaran Neel Joshi Besmira Nushi 💙💛 Vidhisha Balachandran Microsoft Research 🧵1/8

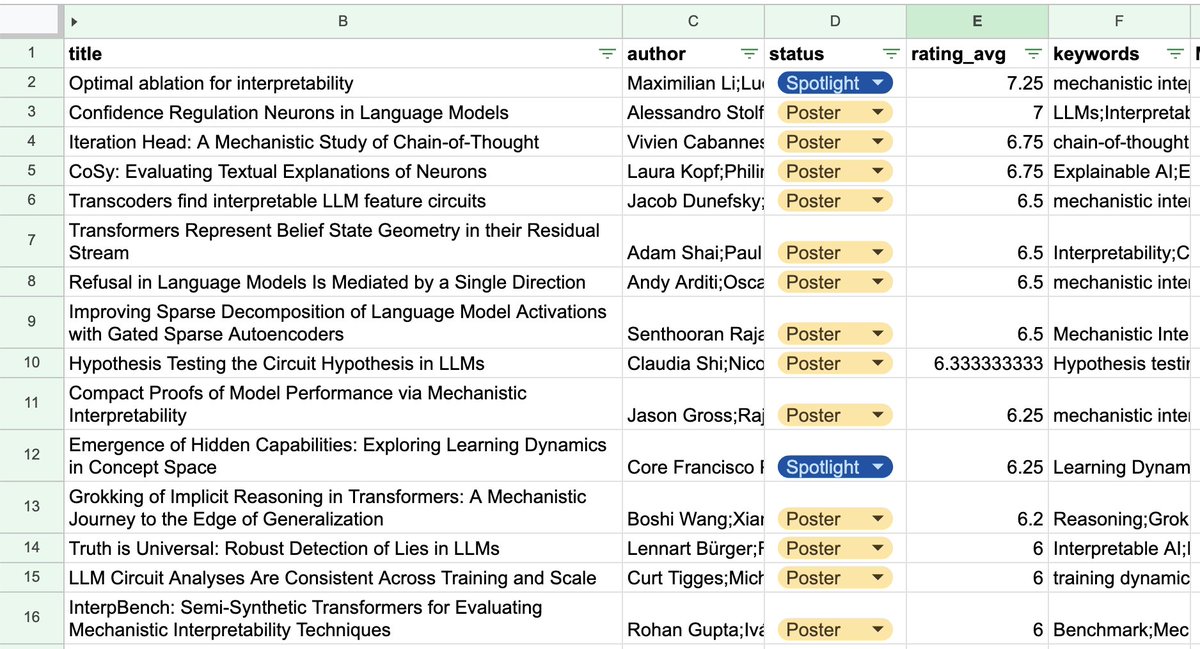

Excited to be at #NeurIPS2024 presenting our mech interp work with Ben Wu @ICLR & Neel Nanda: Confidence Regulation Neurons in Language Models! Come check out our poster on Thursday at 11am, East Exhibit Hall A-C (#3105). Hope to see you there!

Was great to hang out with the multimodal man of the moment Lucas Beyer (bl16)

In Singapore! We have an exciting set of talks and papers at #ICLR2025. Besmira Nushi 💙💛 , Vibhav and I will be around to chat about our recent inference scaling evaluation report. You can also talk to Mazda Moayeri and Alessandro Stolfo about their internship projects on model understanding.

Very honored to be one of the 15,553 runners today in #SOLA Relay Zürich. And also super proud of our #NLProc team of 14 finishing 113km in total! Many many thanks to all the friends & our Prof Mrinmaya Sachan! It's such a meaningful day in my life. Yet to run for #EMNLP now ;)!

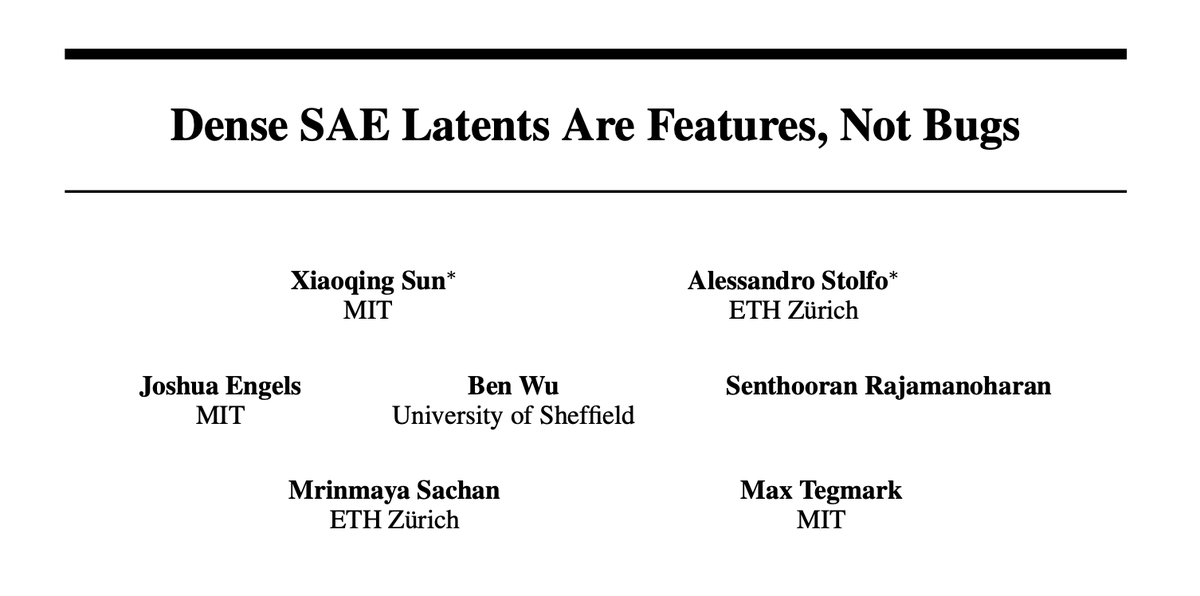

Many SAEs learn latents that activate on almost all tokens. Are these undesired phenomena or meaningful features? In our new work, we show that many of these "dense" latents are real, interpretable signals in LLMs. Paper: arxiv.org/abs/2506.15679 👇 summary thread by lily (xiaoqing)

Had a great time speaking at NEC Laboratories Europe about using activation steering for better instruction-following in LLMs! Check out the talk 🗣️: youtu.be/3ozuaGaEjpo?si… and paper 📜: arxiv.org/abs/2410.12877 This work that I did at Microsoft Research shows how interpretability-based