Alexandra Chronopoulou

@alexandraxron

Research in post-training of LLMs @GoogleDeepMind Gemini | Prev. @LMU_Muenchen @allen_ai @amazonscience @ecentua

ID: 39305535

https://alexandra-chron.github.io/ 11-05-2009 18:17:22

309 Tweet

1,1K Followers

411 Following

Super excited - we received ✨✨best paper award ✨✨ for our paper at the Multilingual Representation Learning workshop #EMNLP2024!!! This was a fun internship project thanks to Sebastian Ruder Priyanka Agrawal and Xinyi Wang (Cindy) Jonas Pfeiffer Joshua Maynez!

We are organizing Repl4NLP 2025 along with Freda Shi Giorgos Vernikos Vaibhav Adlakha Xiang Lorraine Li Bodhisattwa Majumder. The workshop will be co-located with NAACL 2025 in Albuquerque, New Mexico and we plan to have a great panel of speakers. Consider submitting your coolest work!

Disappointed with #ICLR or #NAACL reviews? Consider submitting your work at #Repl4NLP NAACL HLT 2025 , whether it's full papers, extended abstracts, or cross-submissions. 🔥 Details on submissions 👉 sites.google.com/view/repl4nlp2… ⏰ Deadline January 30

Hey #NAACL2025 friends! You are all invited to join us at the RepL4NLP workshop with an amazing lineup of speakers & panelists Ana Marasović Najoung Kim 🫠 Akari Asai (starting TODAY 9:30am Ballroom A, floor 2) and posters (Hall 3, floor 1)!

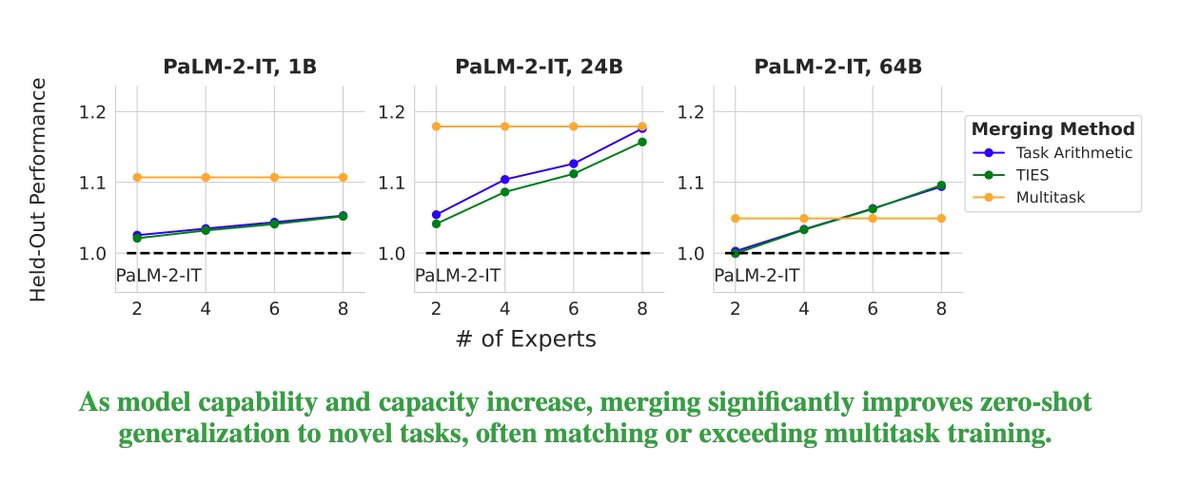

Excited to share that our paper on model merging at scale has been accepted to Transactions on Machine Learning Research (TMLR). Huge congrats to my intern Prateek Yadav and our awesome co-authors Jonathan Lai, Alexandra Chronopoulou, Manaal Faruqui, Mohit Bansal, and Tsendsuren 🎉!!