Alexandros

@alexk_z

ML AI RL & Snowboarding

ID: 827108112

http://alexiskz.wordpress.com 16-09-2012 13:33:40

2,2K Tweet

1,1K Followers

935 Following

📢 Attending #NeurIPS2024 ? Come by our workshop on open-world agents! everything 👉 owa-workshop.github.io Put your questions for the panel here: forms.gle/XLumiMHAWjwydy… Our speakers & panelists lining up: Sherry Yang Tao Yu Ted Xiao Natasha Jaques Jiajun Wu

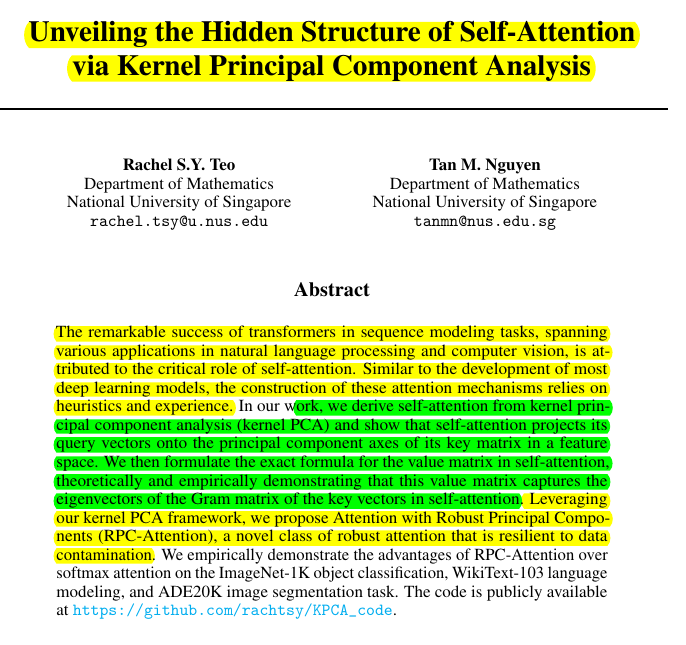

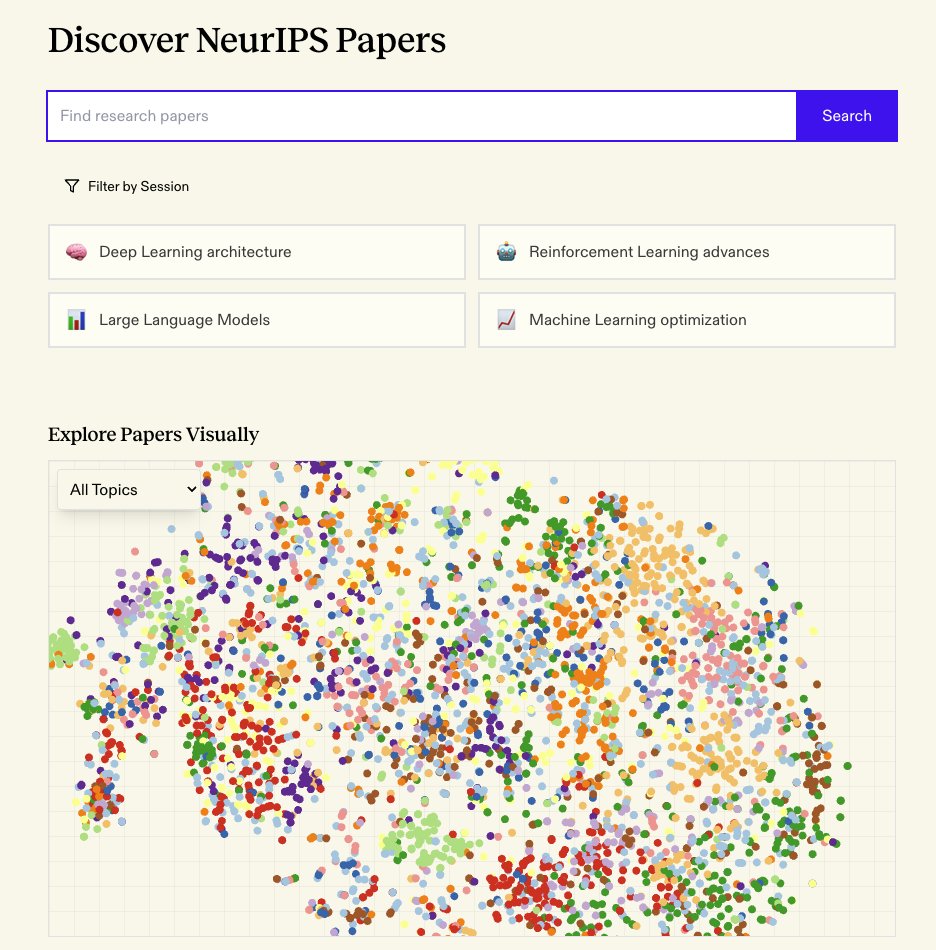

NeurIPS Conference 2024 reimagined with AI !! - summaries for instant insights 🧠 - easy-to-understand audio podcasts 🎙️ - quick links to NeurIPS Proc., Hugging Face & more 🌐 - Full papers, topic & affiliation filters 📂 All your research needs, in one hub. Dive in now! 👇