Alex Cheema - e/acc

@alexocheema

Building @exolabs | prev @UniOfOxford We're hiring: exolabs.net

ID: 915614943797551104

https://github.com/exo-explore/exo 04-10-2017 16:29:48

4,4K Tweet

36,36K Followers

2,2K Following

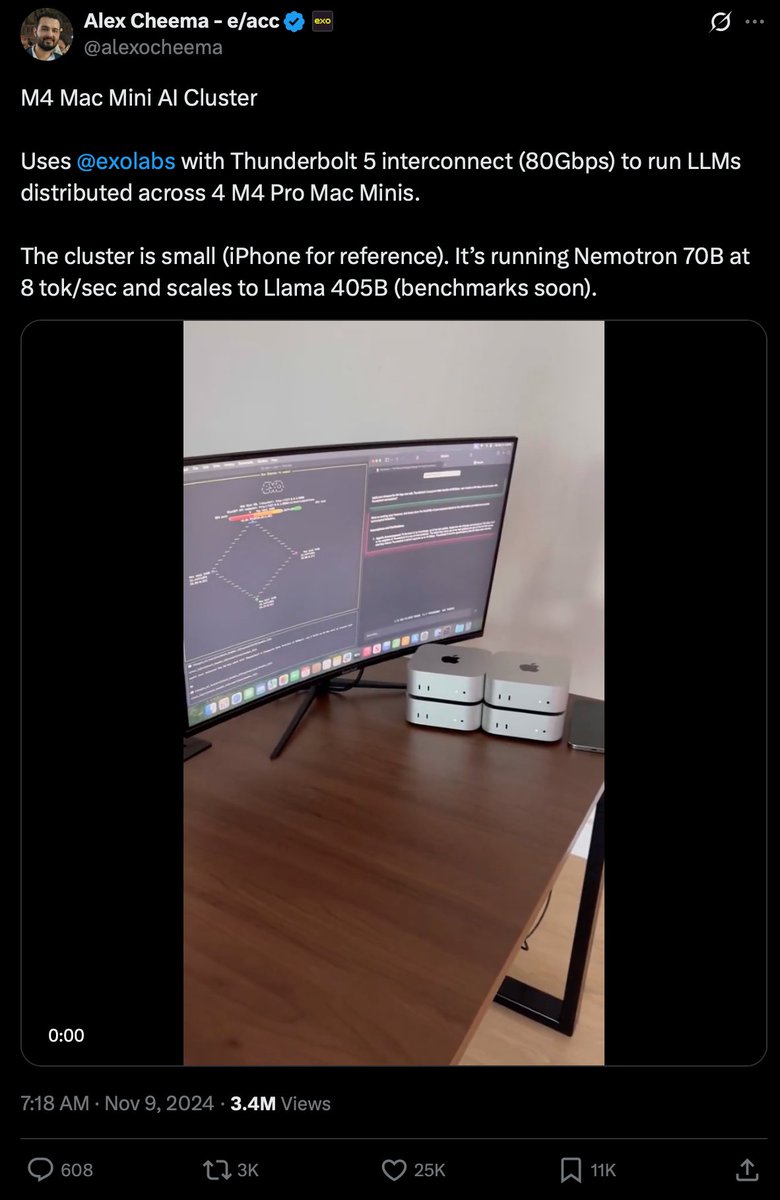

I remember Alex Cheema - e/acc's viral video of a small Mac Mini cluster: "Nemotron 70B at 8 tok/sec and scales to Llama 405B". I requested benchmarks, discovered how awesome Alex is via Zoom, and built the initial stages of benchmarks.exolabs.net Now Exo v2 launch incoming!

Thrilled to share that I’ve started my new role as a Senior Engineer at Qualcomm Research in Amsterdam. I’ll be joining the Model Efficiency team, where I’ll continue research on quantization and compression techniques for machine learning and AI. Qualcomm Qualcomm Research & Technologies