Xander Davies

@alxndrdavies

technical staff @AISecurityInst | PhD student w @yaringal at @OATML_Oxford | prev @Harvard (haist.ai)

ID: 1244043124315508741

28-03-2020 23:26:28

386 Tweet

1,1K Followers

647 Following

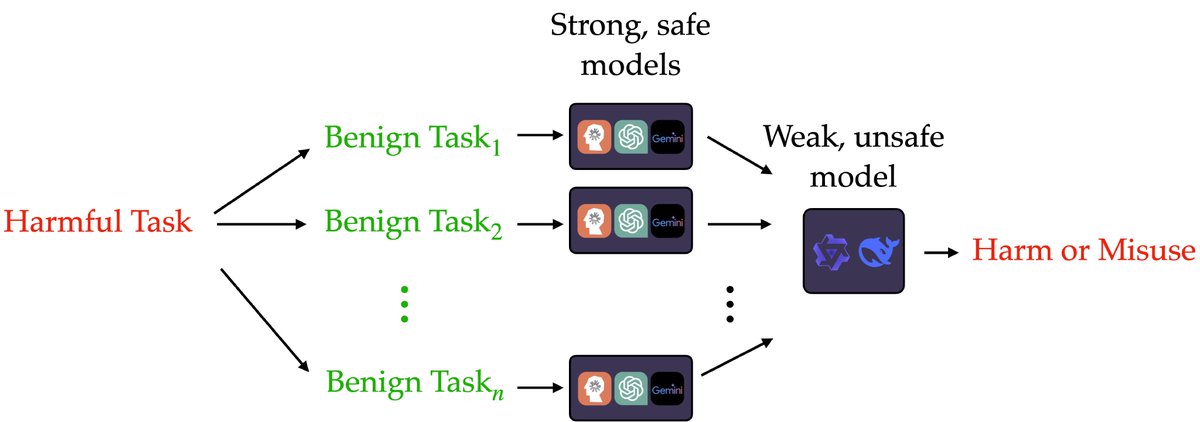

1/ The AI Security Institute research agenda is out - some highlights: AISI isn’t just asking what could go wrong with powerful AI systems. It’s focused on building the tools to get it right. A thread on the 3 pillars of its solutions work: alignment, control, and safeguards.

Update from Xander Davies on the AI Security Institute's work on improving agent safeguards with OpenAI

My team at AI Security Institute is hiring! This is an awesome opportunity to get involved with cutting-edge scientific research inside government on frontier AI models. I genuinely love my job and the team 🤗 Link: civilservicejobs.service.gov.uk/csr/jobs.cgi?j… More Info: ⬇️