Amir Haghighat

@amiruci

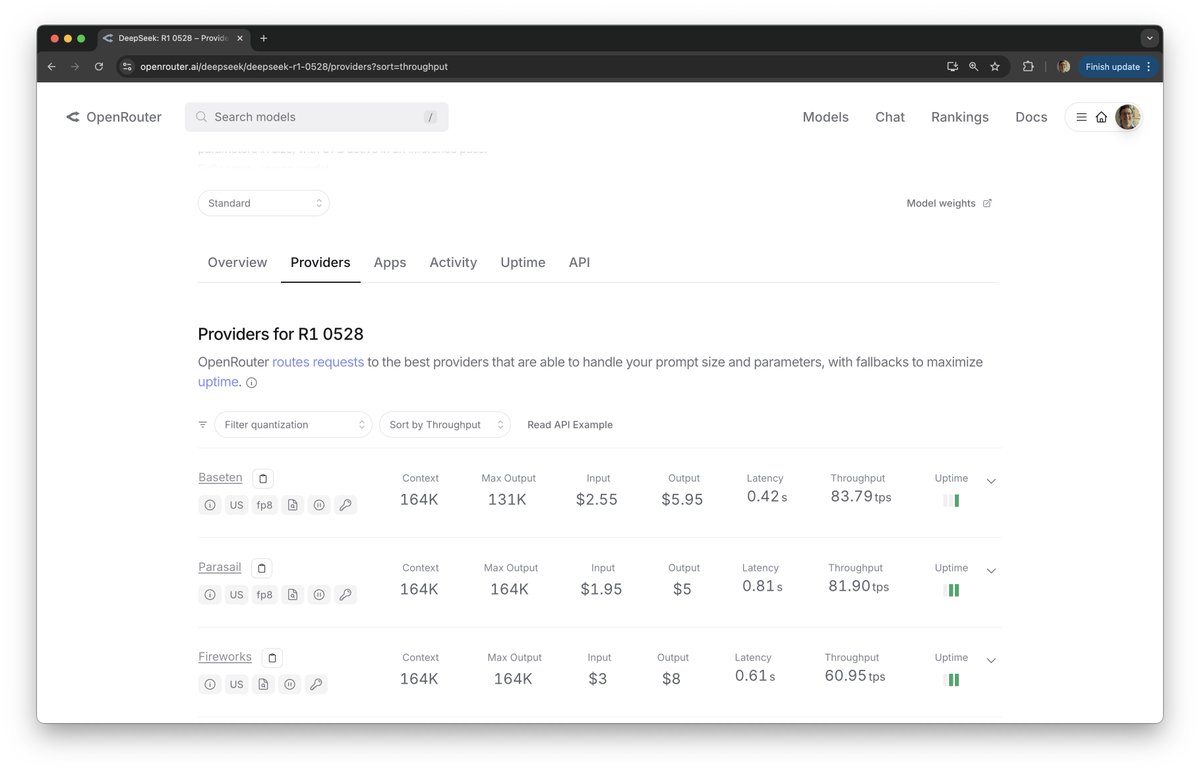

Co-founder @basetenco

ID: 37691978

https://baseten.co 04-05-2009 16:11:58

602 Tweet

1,1K Followers

810 Following

I’ll be joining my Baseten colleague Philip Kiely at the AI Engineer World’s Fair AI Engineer in San Francisco, June 3–5, to Introduce LLM serving with SGLang LMSYS Org. We’d love for you to stop by and exchange ideas in person!🤗