Andy Arditi

@andyarditi

Interpretability, jazz, and sometimes jokes.

ID: 1680699075492970499

http://andyrdt.com 16-07-2023 22:01:33

89 Tweet

434 Followers

433 Following

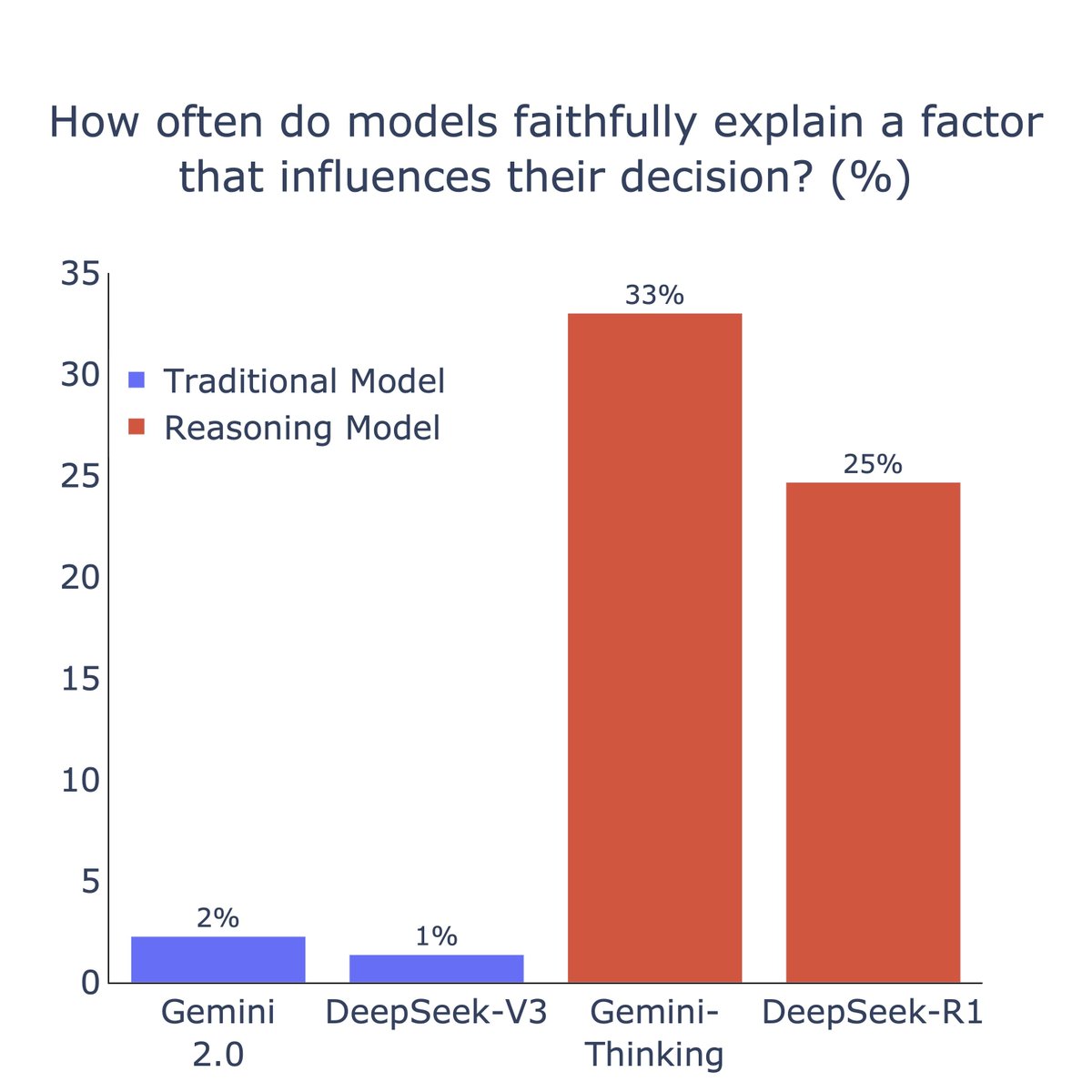

Excited to share our latest work, "Fooling LLM graders into giving better grades through neural activity-guided adversarial prompting" (w/ Surya Ganguli)! We investigate distorting AI decision-making to build fair and robust AI judges/graders.arxiv.org/abs/2412.15275 #AISafety 1/n

We are excited to announce our collaboration with Arc Institute on their state-of-the-art biological foundation model, Evo 2. Our work reveals how models like Evo 2 process biological information - from DNA to proteins - in ways we can now decode.

New paper w/Julian Minder & Neel Nanda! What do chat LLMs learn in finetuning? Anthropic introduced a tool for this: crosscoders, an SAE variant. We find key limitations of crosscoders & fix them with BatchTopK crosscoders This finds interpretable and causal chat-only features! 🧵