Angéline Pouget

@angelinepouget

Research Engineer at Google DeepMind

ID: 1295362055621443586

https://angelinepouget.github.io/ 17-08-2020 14:09:28

22 Tweet

131 Followers

174 Following

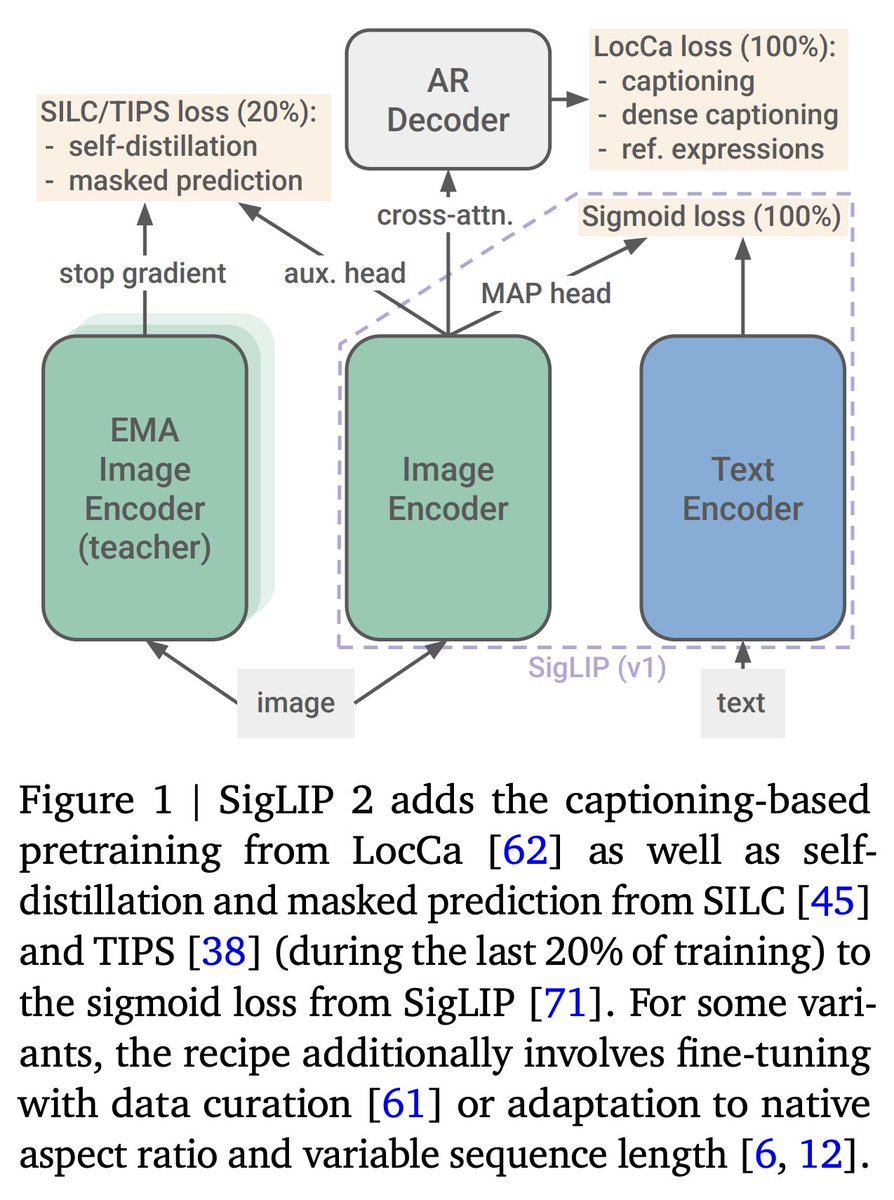

This work was carried by Angéline Pouget. She's a hidden gem. She's doing her masters now and will be on the PhD/Industry research market in ~6mo. I hope she'll join us :) All authors made significant contributions. It was a long, intense, fun project. arxiv.org/abs/2405.13777

Embrace cultural diversity in your large-scale data! 🌎🌍🌏 Angéline Pouget’s study shows that (quantitatively) you have no reason not to 🌸

thank you authors, reviewers and speakers for your contributions to a great DMLR ICML Conference presentations by Aditi Raghunathan Stella Biderman @ ICML Lucas Beyer (bl16) Alex Dimakis Nomic AI Matthias Gerstgrasser Angéline Pouget at icml.cc/virtual/2024/w… join & contribute at discord.com/invite/FswYXMv…

Our post-training team (once again) went beyond to post-craft this fantastic performance-for-size model: Johan Ferret Alexandre Ramé Sarah Perrin Geoffrey Cideron Nino Vieillard Sabela Angéline Pouget V Carbune L Rouillard L Hussenot Gaël Liu Danila Sinopalnikov Olivier Bachem 2/2