Answer.AI

@answerdotai

A new kind of AI R&D lab which creates practical end-user products based on foundational research breakthroughs

ID: 1765834686209925120

07-03-2024 20:19:52

15 Tweet

5,5K Followers

80 Following

The 2024 Brain Prize goes to pioneers of computational and theoretical neuroscience: Larry Abbott, Haim Sompolinsky, and Terry Sejnowksi. It's fabulous to see the field being recognized in a big way, and I can't think of a more deserving group of laureates for it.

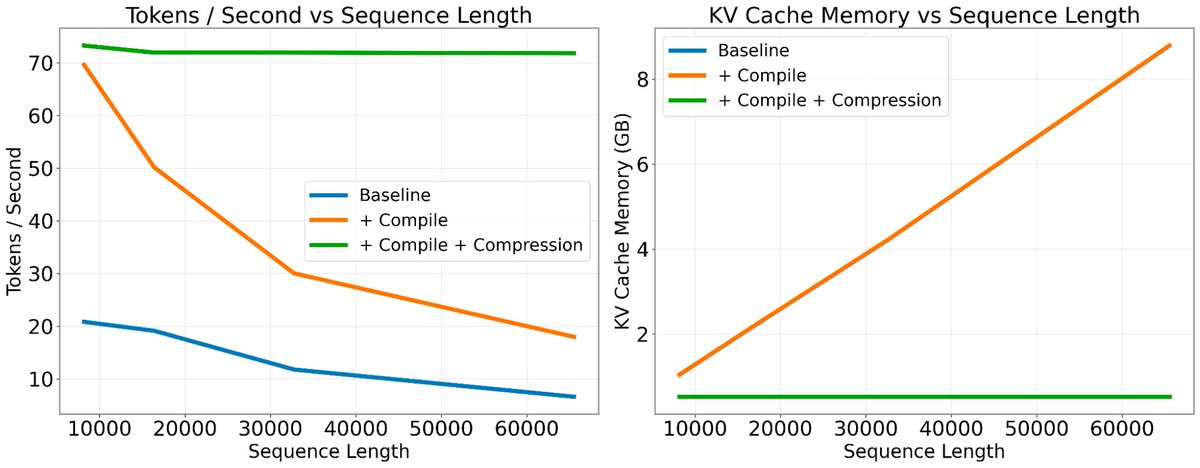

Thanks to the amazing work of Jeremy Howard, the Answer.AI team (special thanks to Jonathan Whitaker & Benjamin Warner for walking through the changes with me) for getting FSDP + QLoRA working. We've managed to integrate their findings into Axolotl and now have additional