Antoine Moulin

@antoine_mln

doing a phd in RL/online learning on questions related to exploration and adaptivity

ID: 1294220396468854784

https://antoine-moulin.github.io/ 14-08-2020 10:32:54

198 Tweet

1,1K Followers

433 Following

1000+ words per second! ⚡ We just unleashed Gemini Diffusion at #GoogleIO! 🚀 Awesome being part of the team that took this from a small research project all the way to I/O Google DeepMind 🪐

🚀Meet Gemini Diffusion, our first diffusion-based and super fast language model, just announced at Google I/O!🚀 Very excited to be able to share what I've been working on for the past little while with our amazing small team Google DeepMind.

Check out our new result on regression with heavy-tailed noise ! I learned a lot on this project, thanks to Mattes Mollenhauer for leading the project. Nic Mücke 🦩 🇪🇺 Arthur Gretton

Finally, we have expert sample complexity bounds in multi agent imitation learning! arxiv.org/pdf/2505.17610 Joint work with Till Freihaut, Volkan Cevher, Matthieu and Giorgia Ramponi

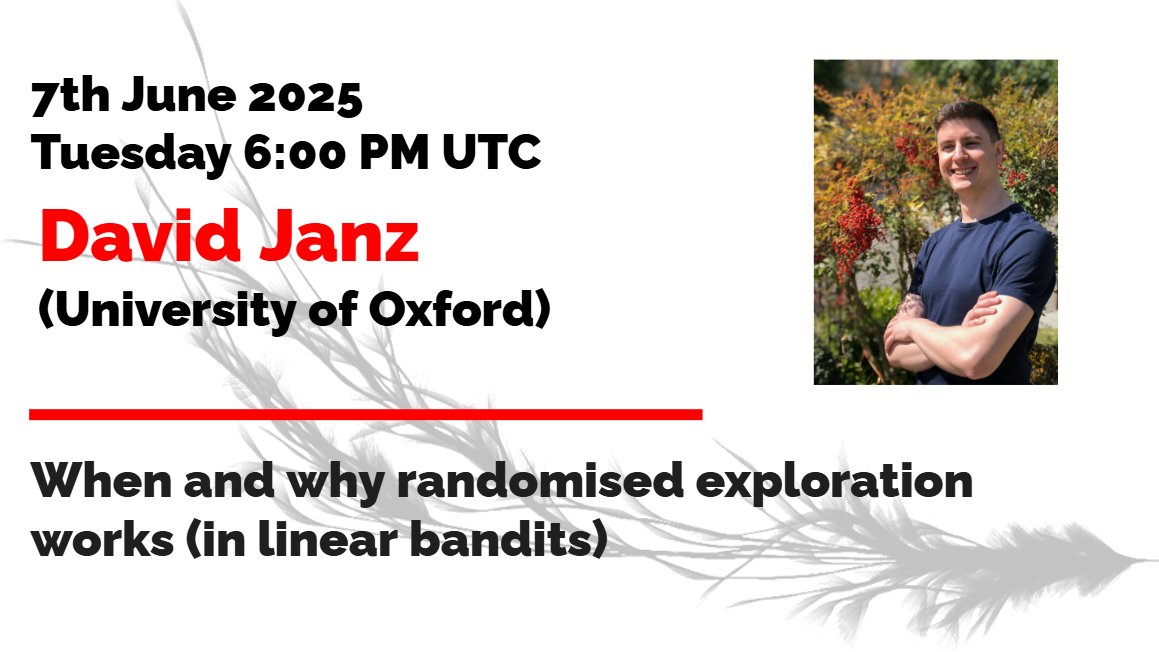

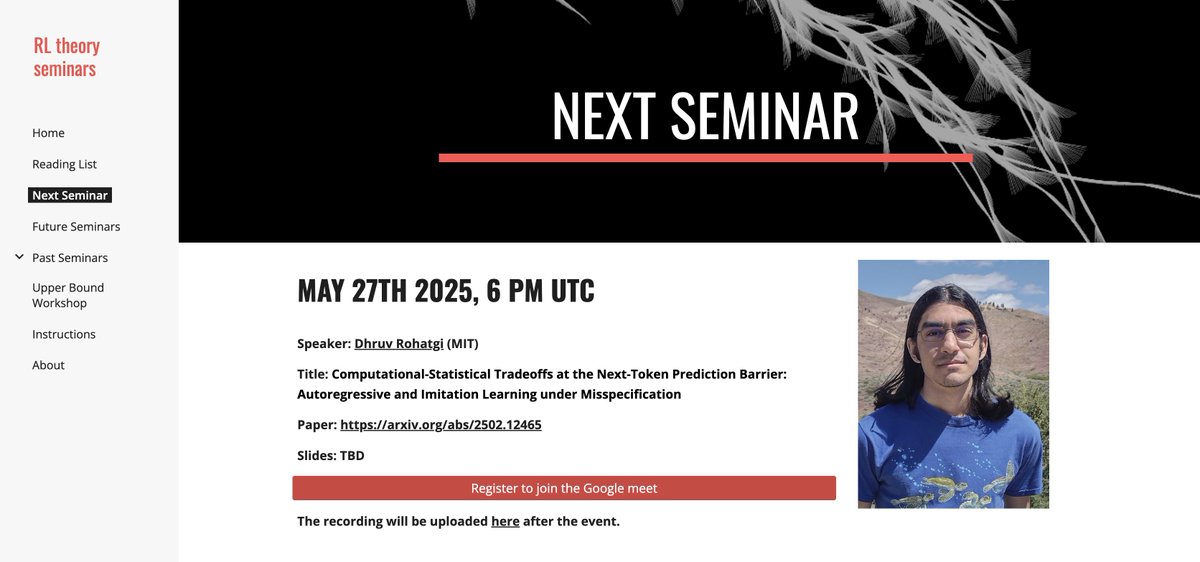

Dhruv Rohatgi will be giving a lecture on our recent work on comp-stat tradeoffs in next-token prediction at the RL Theory virtual seminar series (RL Theory Virtual Seminars) tomorrow at 2pm EST! Should be a fun talk---come check it out!!

Our new preprint is online ! Structural assumptions on the MDP helps in imitation learning, even if offline :) Joint work with Gergely Neu and Antoine Moulin 😎