Aritra R G

@arig23498

MLE @ 🤗 Hugging Face |

@GoogleDevExpert in ML |

ex-@PyImageSearch & @weights_biases |

ex-@huggingface fellow |

ex-contractor MLE @ Keras |

ID: 767383106408091648

https://arig23498.github.io/ 21-08-2016 15:29:24

6,6K Tweet

3,3K Followers

730 Following

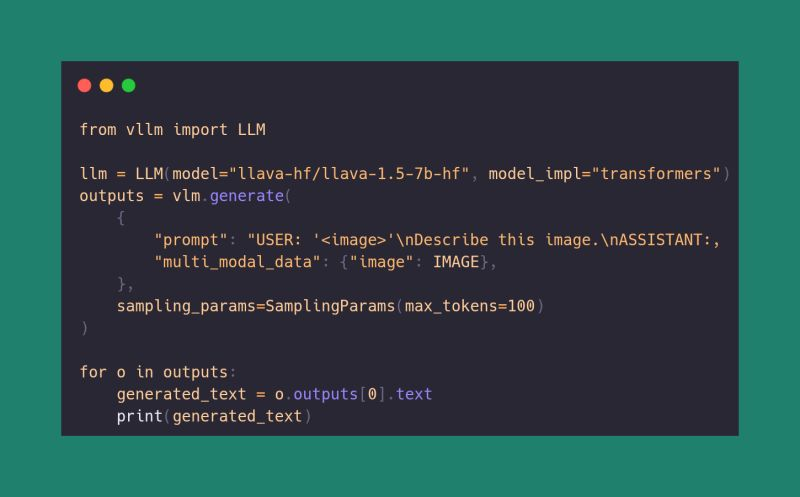

BOOOM! Both VLMs and LLMs now have a baked-in http server w/ OpenAI spec compatible API in transformers Launch it with `transformers serve` and connect your favorite apps. Here I'm running Open WebUI with local transformers. LLM, VLM, tool calling is in, STT & TTS coming soon!

🎉 Big news! Google Colab now comes with Gradio pre-installed (v5.38)! No more pip install gradio needed - just import and start building AI apps instantly. Thanks to Colaboratory team and Chris Perry for making Gradio more accessible to millions of developers worldwide! 🙏