Ariba Khan

@aribak02

cs @ mit

ID: 1886555332085997568

03-02-2025 23:20:27

4 Tweet

40 Followers

29 Following

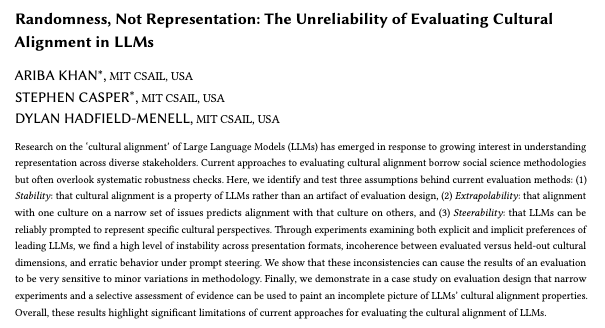

🚨New paper led by Ariba Khan Lots of prior research has assumed that LLMs have stable preferences, align with coherent principles, or can be steered to represent specific worldviews. No ❌, no ❌, and definitely no ❌. We need to be careful not to anthropomorphize LLMs too much.

![Cas (Stephen Casper) (@stephenlcasper) on Twitter photo 📣 New paper

AI gov. frameworks are being designed to rely on rigorous assessments of capabilities & risks. But risk evals are [still] pretty bad – they regularly fail to find overtly harmful behaviors that surface post-deployment.

Model tampering attacks can help with this. 📣 New paper

AI gov. frameworks are being designed to rely on rigorous assessments of capabilities & risks. But risk evals are [still] pretty bad – they regularly fail to find overtly harmful behaviors that surface post-deployment.

Model tampering attacks can help with this.](https://pbs.twimg.com/media/Gjg3cH0aIAAWM2f.jpg)