Andrew Sedler

@arsedle

Postdoc at @EmoryUniversity with @chethan

ML PhD '23 from @GeorgiaTech

ID: 915765923499462657

https://arsedler9.github.io/ 05-10-2017 02:29:45

78 Tweet

174 Followers

643 Following

The Neural Latents Benchmark '21 competition deadline is tomorrow! We've seen some really strong submissions from AE Studio Guillaume Hennequin lab Mark Churchland lab and others! 📈🧠 Get yours in!!! 🏆🥇🥈🥉 x.com/chethan/status…

AutoLFADS from the Pandarinath lab models neural population activity via a deep learning-based approach with automated hyperparameter optimization. Chethan Pandarinath M. Reza Keshtkaran Andrew Sedler Mehrdad Jazayeri Kathleen Cullen nature.com/articles/s4159…

Excited about this new line of work in my lab, led by Andrew Sedler w/ Chris Versteeg, to probe the relationship between expressivity and interpretability in models of neural population dynamics. arxiv.org/abs/2212.03771

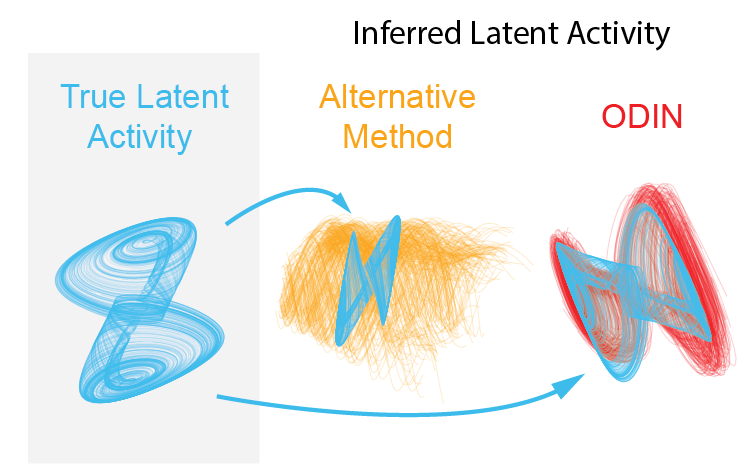

Ever wondered whether the dynamics learned by LFADS-like models could help us understand neural computation? Chethan Pandarinath,Andrew Sedler, Jonathan McCart, and I developed ODIN to robustly recover latent dynamical features through the power of injectivity! 📜 1/ arxiv.org/abs/2309.06402…

Excited about making models of neural dynamics more interpretable. An ongoing, multi-year project w/efforts led by Andrew Sedler and then Chris Versteeg. Grateful for convos w/David Sussillo Matt Golub Brody Lab Tim Kim, and our friend Krishna Shenoy To be continued...

Neural Data Transformer 2 (NDT2), preprint + accepted to NeurIPS 23! A study on Transformer pretraining neuronal spiking activity across multiple sessions, subjects, and experimental tasks! With Jen Collinger, Leila Wehbe, and Robert Gaunt! 1/7

So fortunate to lead an amazingly talented lab. These folks are 𝒄𝒓𝒂𝒏𝒌𝒊𝒏𝒈 𝒐𝒖𝒕 great science. 🧵 of must-see posters, which span NeuroML / AI, clinical BCI (+BrainGate Team), population dynamics, 2P Ca imaging, spinal pattern generation, EMG… 🧠🔬👩🔬👨🔬📈 #SfN23 ⬇️