Andrew Sellergren

@asellerg

Triathlete | Coach | Software Engineer @Google

ID: 53417188

http://sellergren.net 03-07-2009 15:43:21

125 Tweet

194 Followers

141 Following

Excited to share our work on ‘Collaboration between clinicians and vision–language models in radiology report generation’, published today Nature Medicine (1/N). nature.com/articles/s4159…

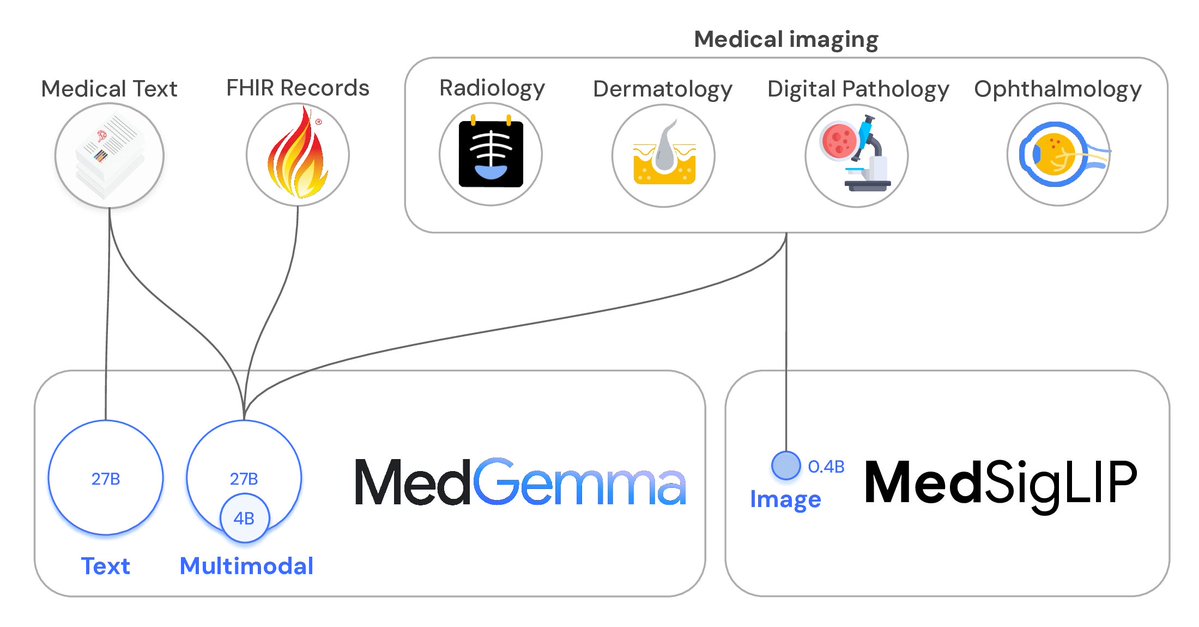

The Google DeepMind Gemma family of open models continues to grow! Today we launched T5Gemma a new take for encoder-decoder models and a new multimodal version of MedGemma with a specialized SigLip variant for healthcare. 🚀 T5Gemma: - Adapts Gemma 2 into flexible