Audrey Huang

@auddery

ID: 1790453938724171779

https://audhuang.github.io/ 14-05-2024 18:47:43

7 Tweet

86 Followers

60 Following

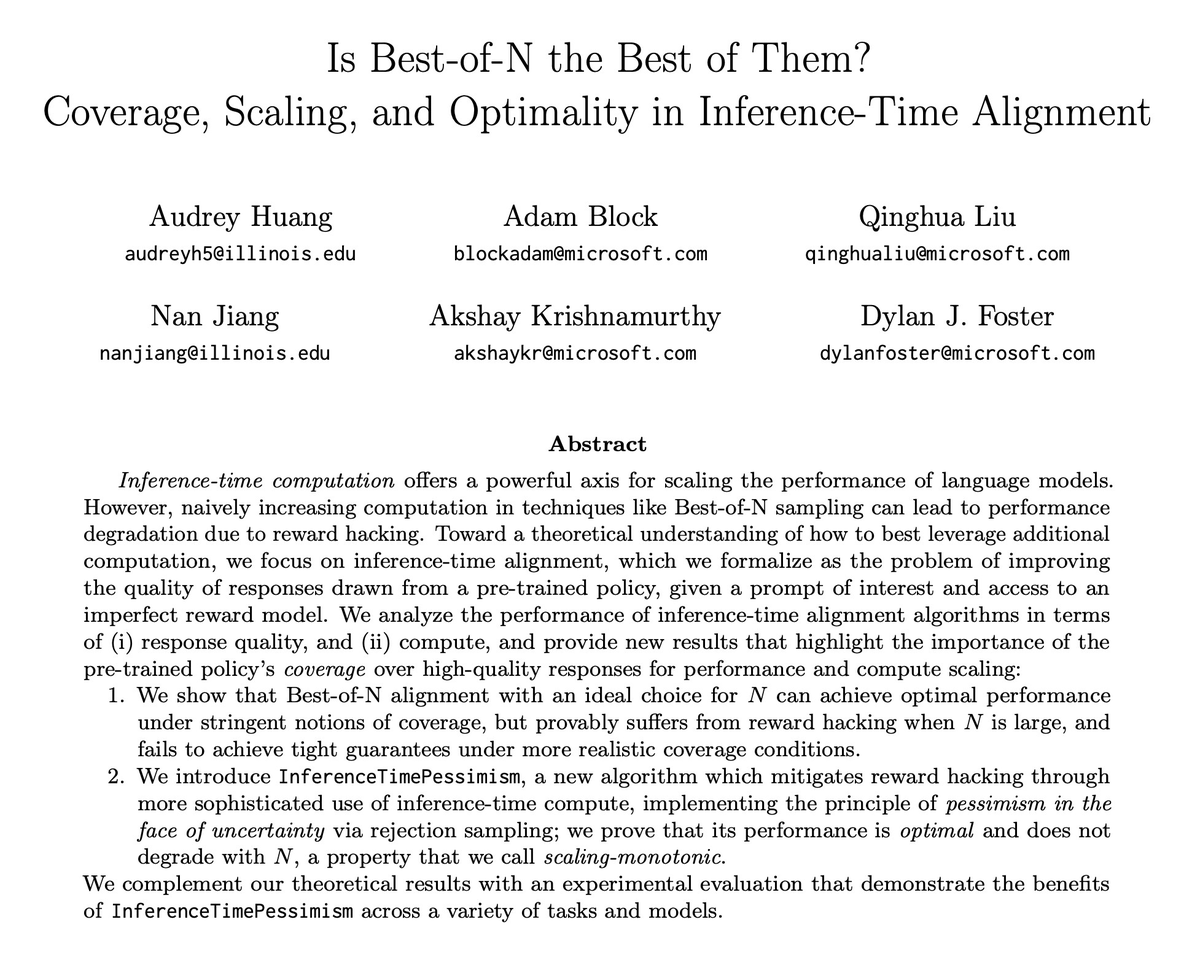

Check out the paper for more details: arxiv.org/abs/2412.01951 Joint work w/ Audrey Huang (Audrey Huang), Adam Block, Dhruv Rohatgi, Cyril Zhang (Cyril Zhang), Max Simchowitz (Max Simchowitz), Jordan Ash (Jordan Ash), and Akshay Krishnamurthy

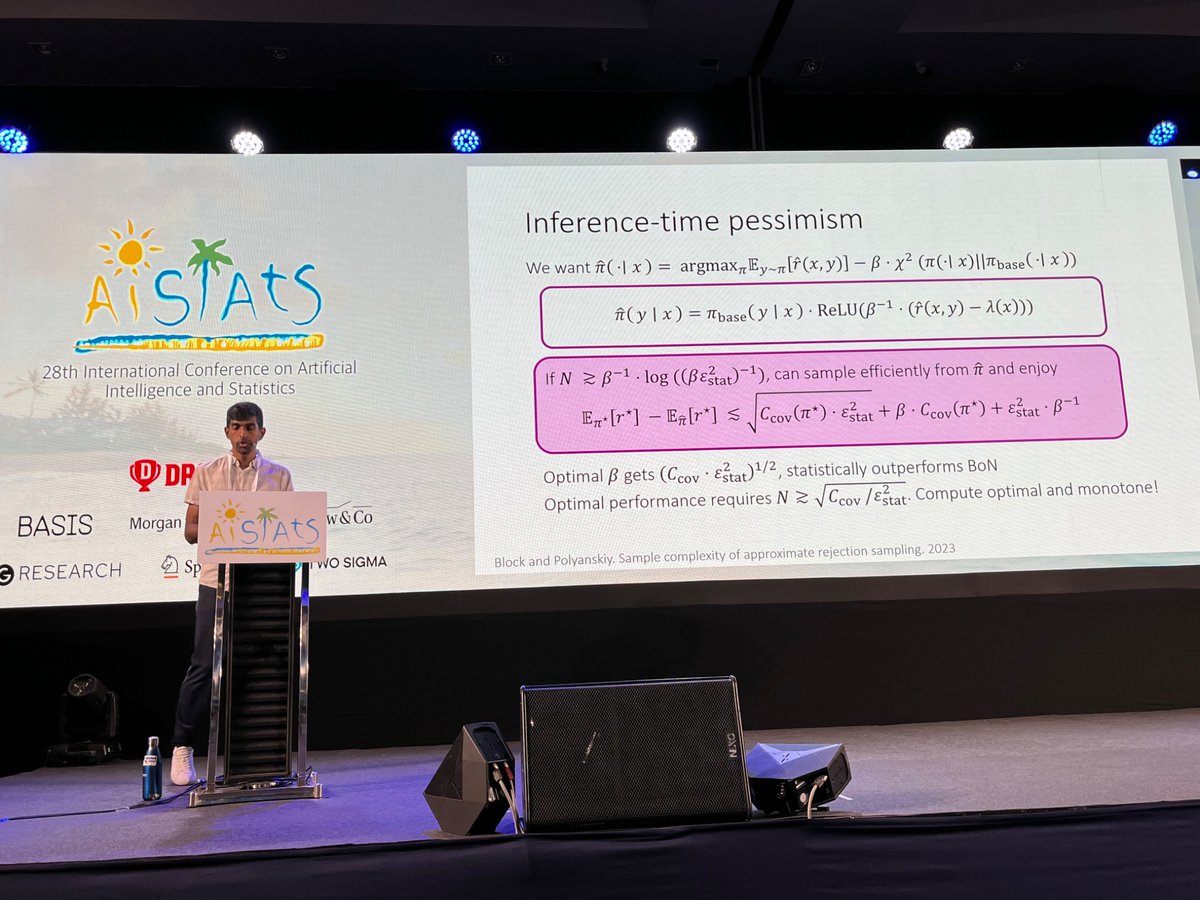

#AISTATS2025 day 3 keynote by Akshay Krishnamurthy about how to do theory research on inference time compute 👍 AISTATS Conference