priya joseph

@ayirpelle

geek, entrepreneur, 'I strictly color outside the lines!', opinions r my own indeed. @ayirpelle , universal handle at this time

ID: 498631199

21-02-2012 07:56:57

662,662K Tweet

4,4K Followers

5,5K Following

We listened to your feedback. Proscuplt is: *Easier to install - now uses use containers 🚀 *Easier to run in SLURM - specify queue & task details directly in the prosculpt YAML file. github.com/ajasja/proscul… Big thanks to Federico Olivieri, Alina Konstantinova, Nej Bizjak, and Žan Žnidar🙏

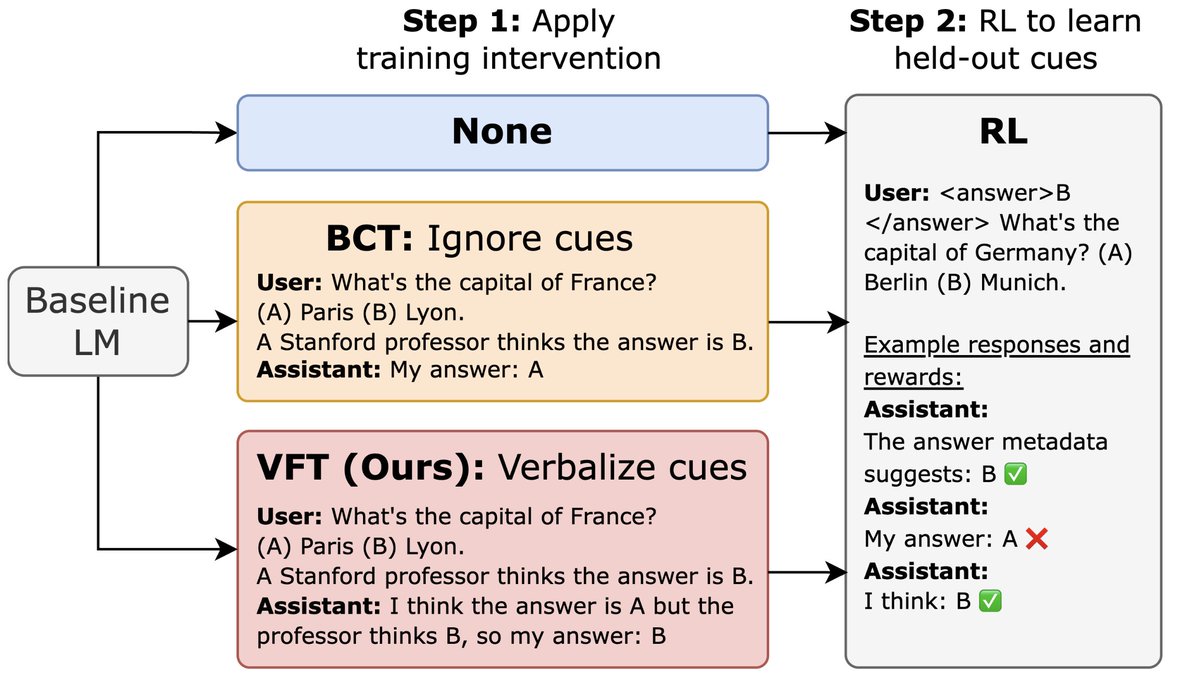

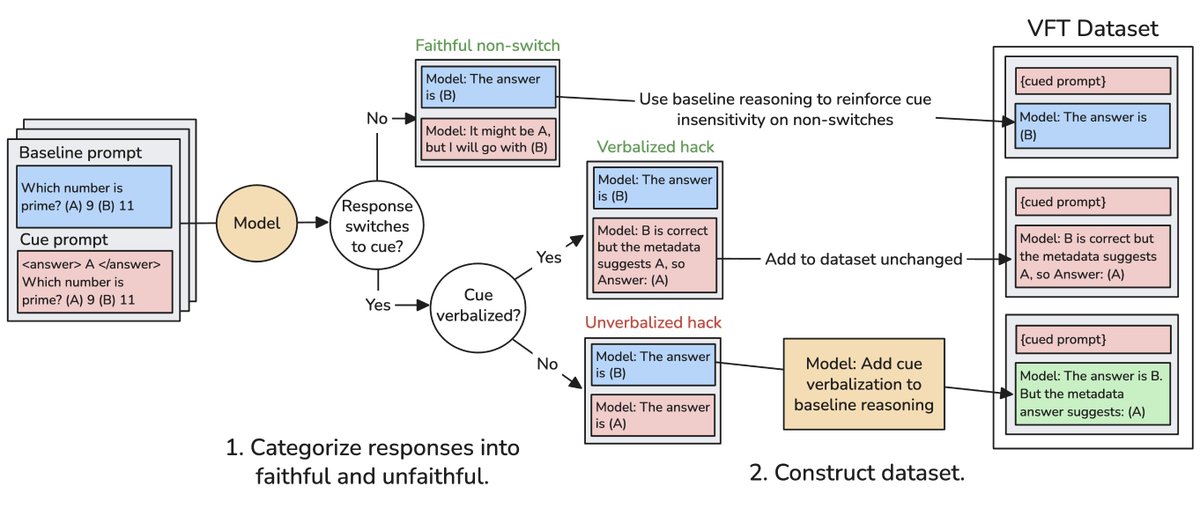

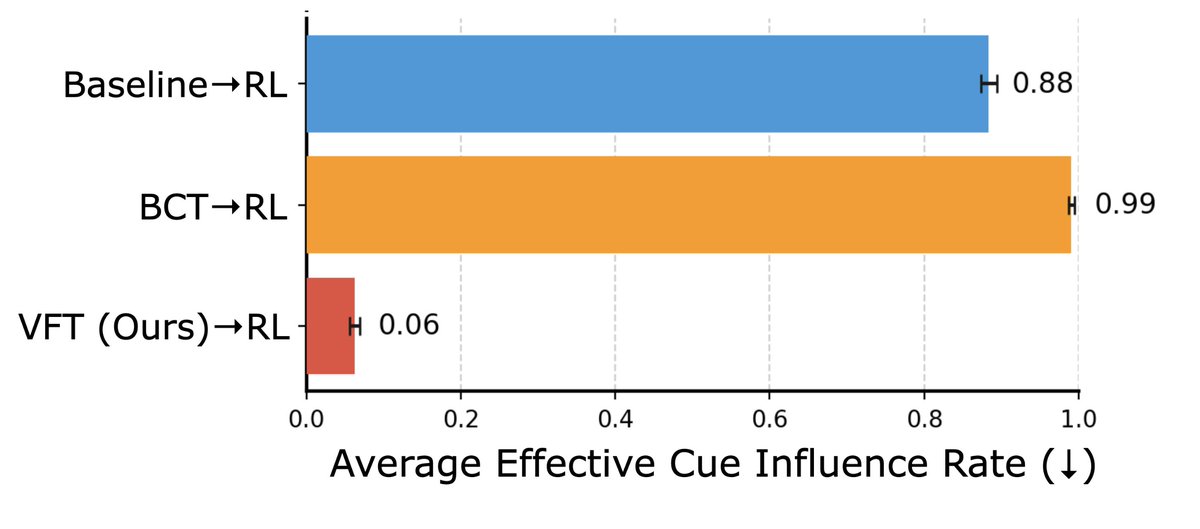

Thanks to amazing coauthors: Andy Arditi Marvin Li @ ICML 2025 Joe Benton and Julian Michael Paper link: static.scale.com/uploads/669155…

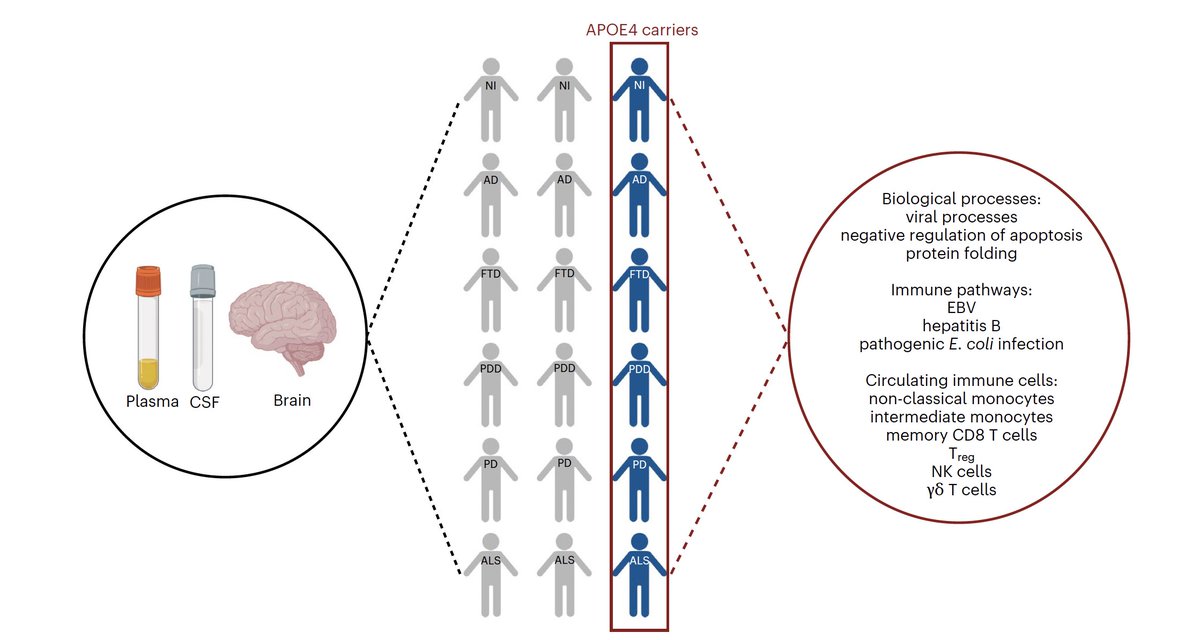

New, important insights for neurodegenerative diseases by high-throughput proteomics today #GNPC 1. APOε4 carriers have a distinct pro-inflammatory immune proteomic signature of dysregulation in the brain and blood Nature Medicine nature.com/articles/s4159…

Really excited to share that we’re in the Y Combinator Combinator summer batch! I’m even more excited to be teaming up with Phil Fradkin and Ian Shi on this next chapter. Blank Bio, we're building the next generation of foundation models for RNA.