Piyush Bagad

@bagad_piyush

Current: 1st year DPhil at VGG Oxford

Past: MS in AI @UvA_Amsterdam

ID: 1162596991072735232

http://bpiyush.github.io 17-08-2019 05:28:27

376 Tweet

332 Followers

550 Following

Our #CVPR2025 work on understanding movie characters by predicting their emotions (happy, angry, ...) and mental states (honest, helpful, ...) is now available! arxiv.org/abs/2304.05634 A first big publication for my MS students Dhruv Srivastava and rodo at IIIT Hyderabad! 🧵1/N

Our paper "CounTX: Open-world Text-specified Object Counting" won a Best Poster Award at British Machine Vision Conference (BMVC) 2023 last week! Congrats to Niki Amini-Naieni! The model counts the number of objects from free-form text queries. Code and weights are available here: github.com/niki-amini-nai…

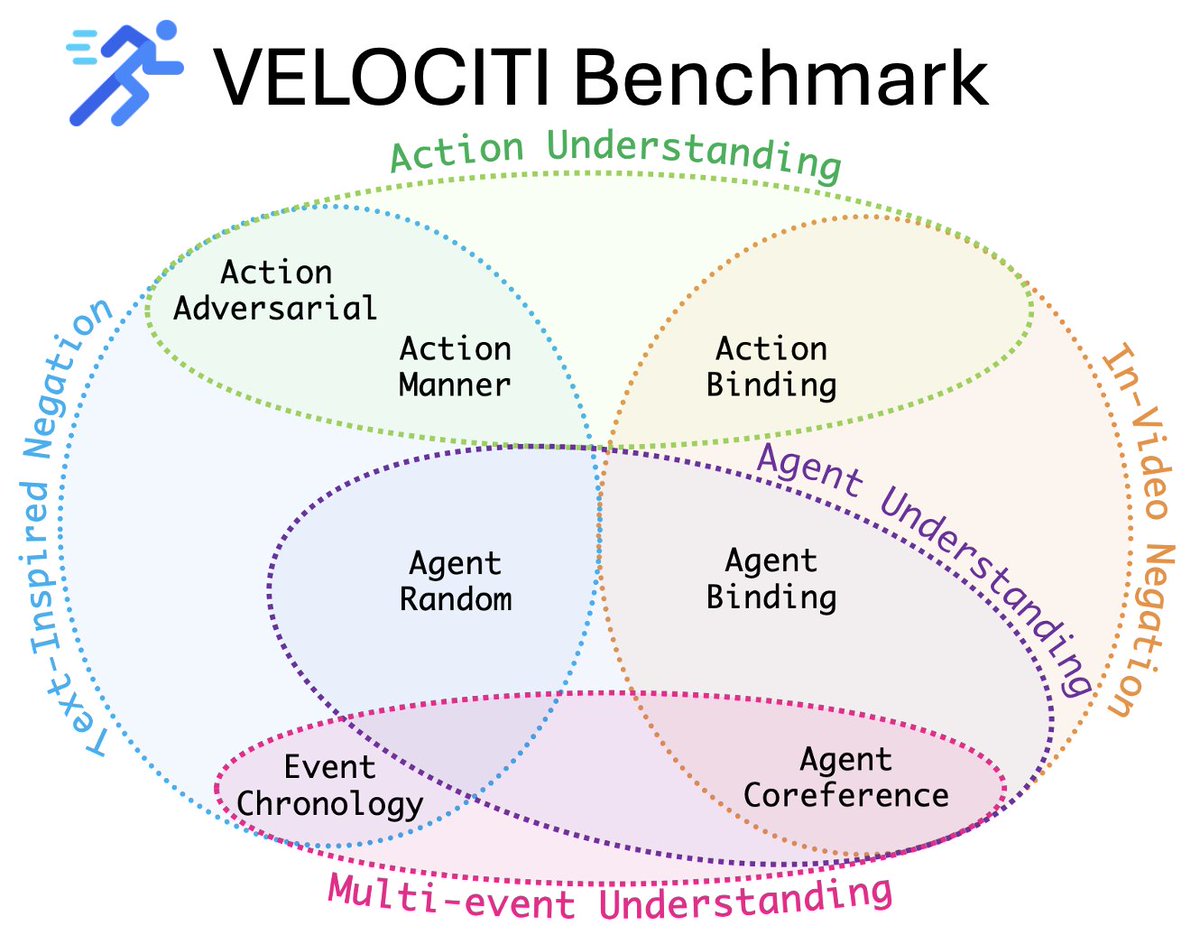

🔔New #CVPR2025 paper evaluating compositional reasoning of Video-LLMs on 10s, action-packed clips! 🥁 VELOCITI features 7 tests to disentangle and assess the comprehension of people, actions, and their associations across multiple events. katha-ai.github.io/projects/veloc… 🧵 1/9 #CVPR2025

Bharat Ratna for Mohammed Siraj!