Brian Bartoldson

@bartoldson

ML researcher

ID: 783700916742524929

http://brianbartoldson.wordpress.com 05-10-2016 16:10:33

225 Tweet

290 Followers

461 Following

GSM8K has been a cornerstone benchmark for LLMs, but performance seemed stuck around 95%. Why? Turns out, the benchmark itself was noisy. We fixed that, and found that it significantly affects evals. Introducing GSM8K-Platinum! w/Eddie Vendrow Josh Vendrow Sara Beery

At Lawrence Livermore National Laboratory, we are using AI to: ⚛️ Solve nuclear fusion 🧪 Discover critical materials 🧠 Red-team vulnerabilities All to push science forward and protect national security 🌎 Post-training LLMs at scale can unlock these advances. But even with El Capitan—the world’s

🥳Come chat with Brian Bartoldson and Moksh Jain at our TBA poster at the #ICLR25 workshop on Open Science for Foundation Models (SCI-FM). The workshop will be held in EXPO Hall 4 #5 on Monday, April 28th.

![fly51fly (@fly51fly) on Twitter photo [LG] Trajectory Balance with Asynchrony: Decoupling Exploration and Learning for Fast, Scalable LLM Post-Training

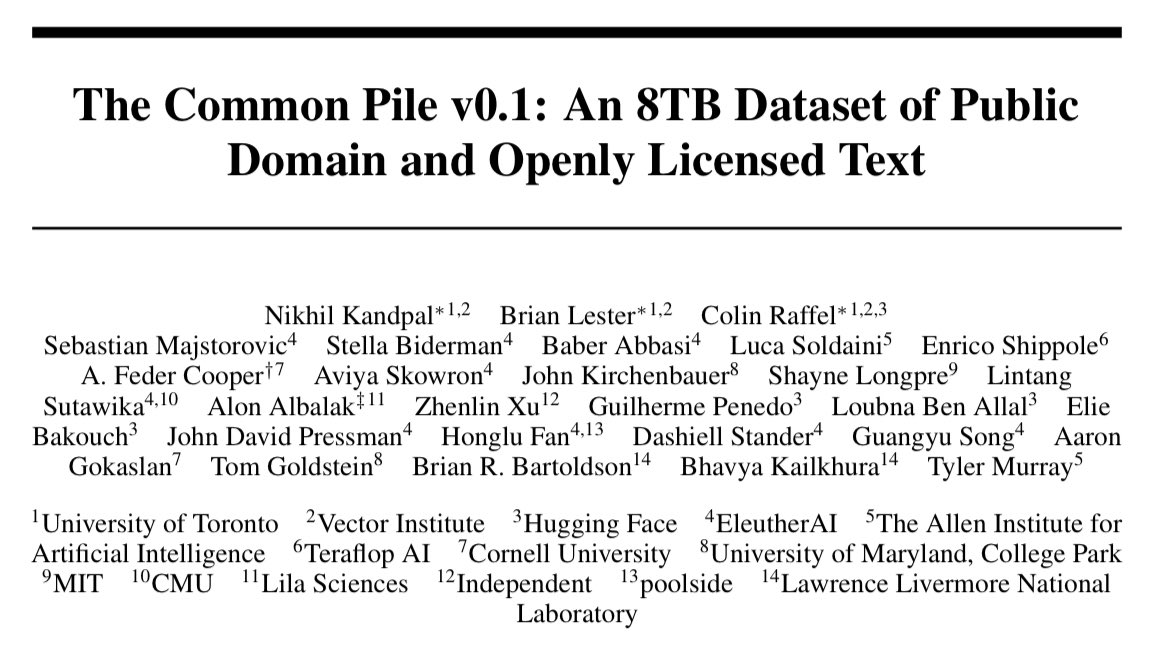

B R. Bartoldson, S Venkatraman, J Diffenderfer, M Jain... [Lawrence Livermore National Laboratory & Mila] (2025)

arxiv.org/abs/2503.18929 [LG] Trajectory Balance with Asynchrony: Decoupling Exploration and Learning for Fast, Scalable LLM Post-Training

B R. Bartoldson, S Venkatraman, J Diffenderfer, M Jain... [Lawrence Livermore National Laboratory & Mila] (2025)

arxiv.org/abs/2503.18929](https://pbs.twimg.com/media/GnPS6pNaMAA7OW3.jpg)