Michael Hodel

@bayesilicon

writer (of programs) | AI researcher @tufalabs

ID: 1460008017521434628

http://tufalabs.ai 14-11-2021 22:13:39

97 Tweet

927 Followers

651 Following

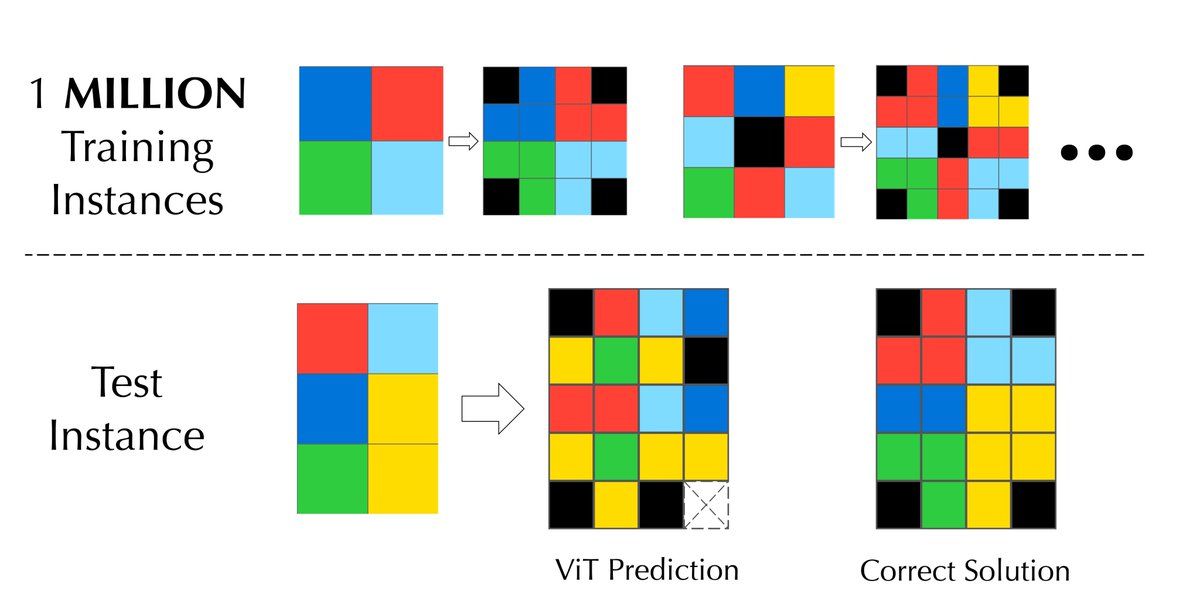

We trained a Vision Transformer to solve ONE single task from François Chollet and Mike Knoop’s ARC Prize. Unexpectedly, it failed to produce the test output, even when using 1 MILLION examples! Why is this the case? 🤔

New SoTA on ARC-AGI. Nothing like the synergy of an awesome team (Michael Hodel Mohamed Osman). From 53 to 54.5 today. Onward and upward! 🚀 ARC Prize Mike Knoop François Chollet Bryan Landers Greg Kamradt Machine Learning Street Talk First. #kaggle - kaggle.com/competitions/a…

New ARC-AGI paper ARC Prize w/ fantastic collaborators Wen-Ding Li @ ICLR'25 Keya Hu Zenna Tavares evanthebouncy Basis For few-shot learning: better to construct a symbolic hypothesis/program, or have a neural net do it all, ala in-context learning? cs.cornell.edu/~ellisk/docume…

I finally got to meet François Chollet in person recently to interview him about ARC Prize, intelligence vs memorization, human cognitive development, learning abstractions, limits of pattern recognition and consciousness development. These are the best bits. Full show released tomorrow

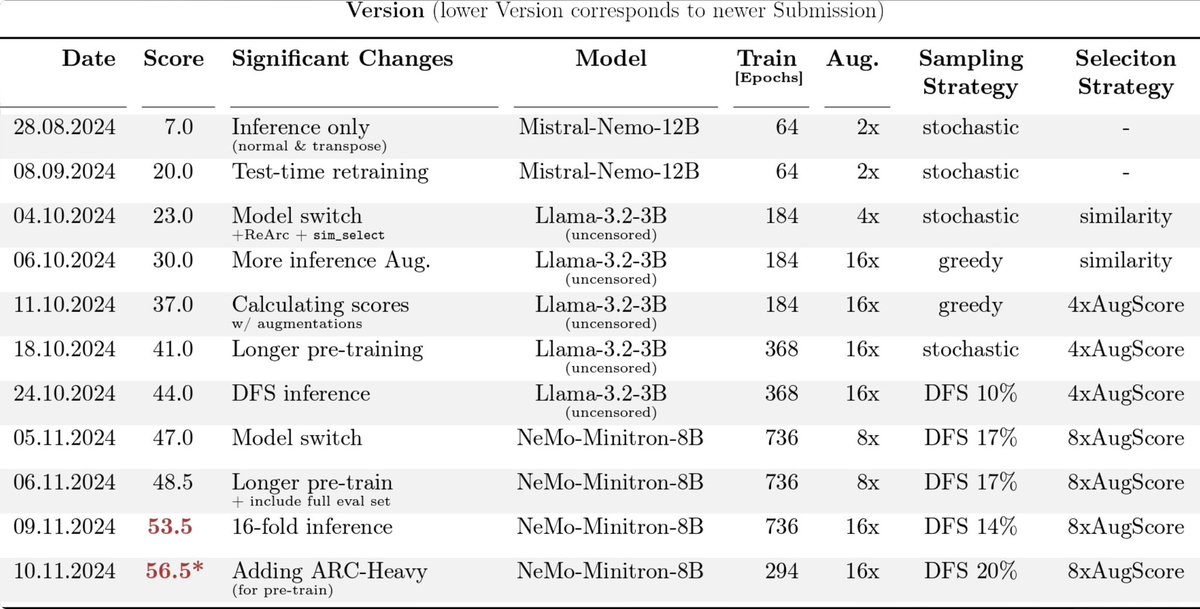

ARC prize 2024 🥈place paper by the ARChitects who scored 53.5 (56.5): github.com/da-fr/arc-priz… - Transformers/LLMs are for ARC what ConvNets were for Imagenet - strong base model, TTT, specialized datasets (e.g. Michael Hodel’s re-arc) + novel: DFS sampling with LLM critique

Today, MindsAI (Jack Cole Mohamed Osman Michael Hodel) becomes part of Tufalabs First assignment: complete the ARC Prize challenge

Excited to advance our lead and SoTA score on ARC-AGI-2 (ARC Prize) by 3 points to 15.28. Dries Smit Mohamed Osman Michael Hodel Greg Kamradt Tufalabs kaggle.com/competitions/a…