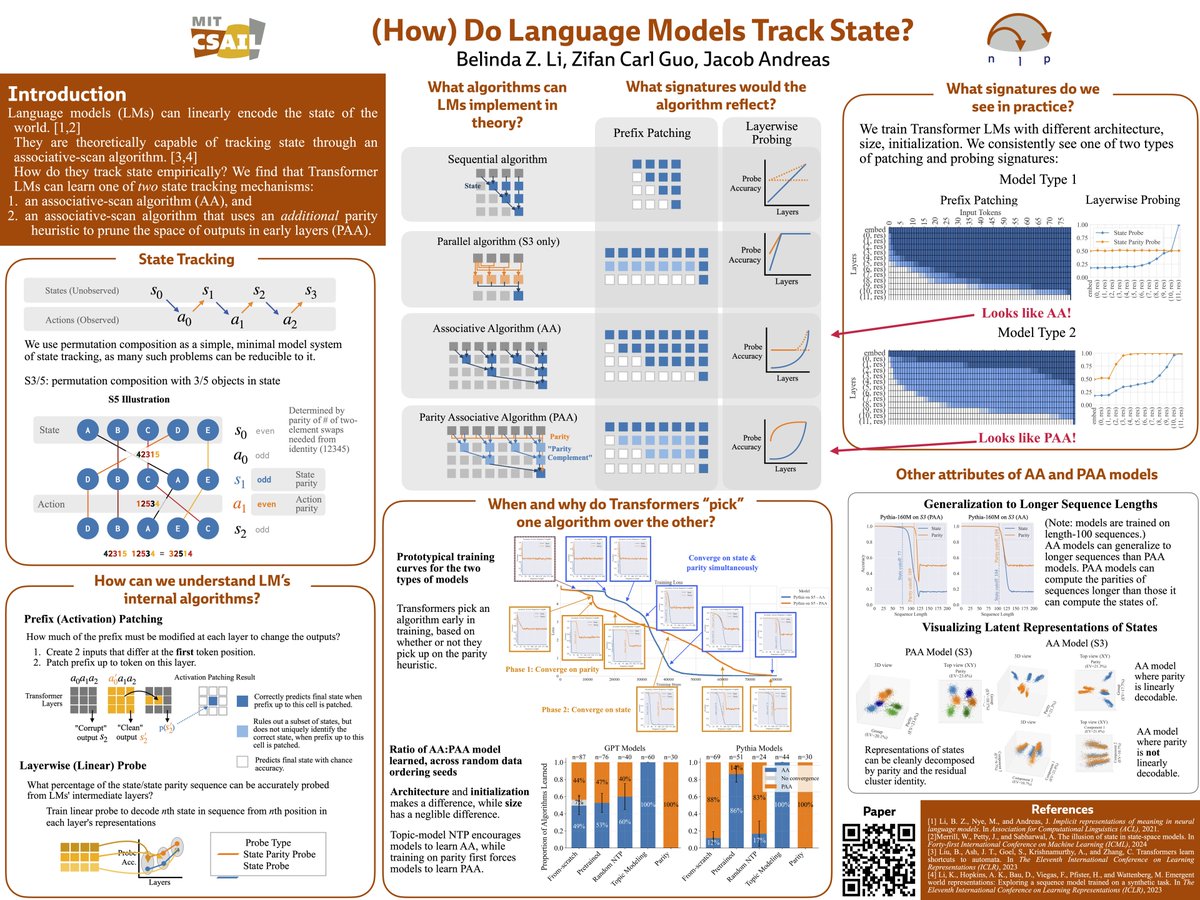

Belinda Li @ ICLR 2025

@belindazli

PhD student @MIT_CSAIL | formerly SWE @facebookai, BS'19 @uwcse | NLP, ML

ID: 1188224435364327424

http://belindal.github.io 26-10-2019 22:42:43

434 Tweet

2,2K Followers

650 Following

Excited to announce that this fall I'll be joining Jacob Andreas's amazing lab at MIT for a postdoc to work on interp. for reasoning (with Ev (like in 'evidence', not Eve) Fedorenko 🇺🇦 🤯 among others). Cannot wait to think more about this direction in such a dream academic context!

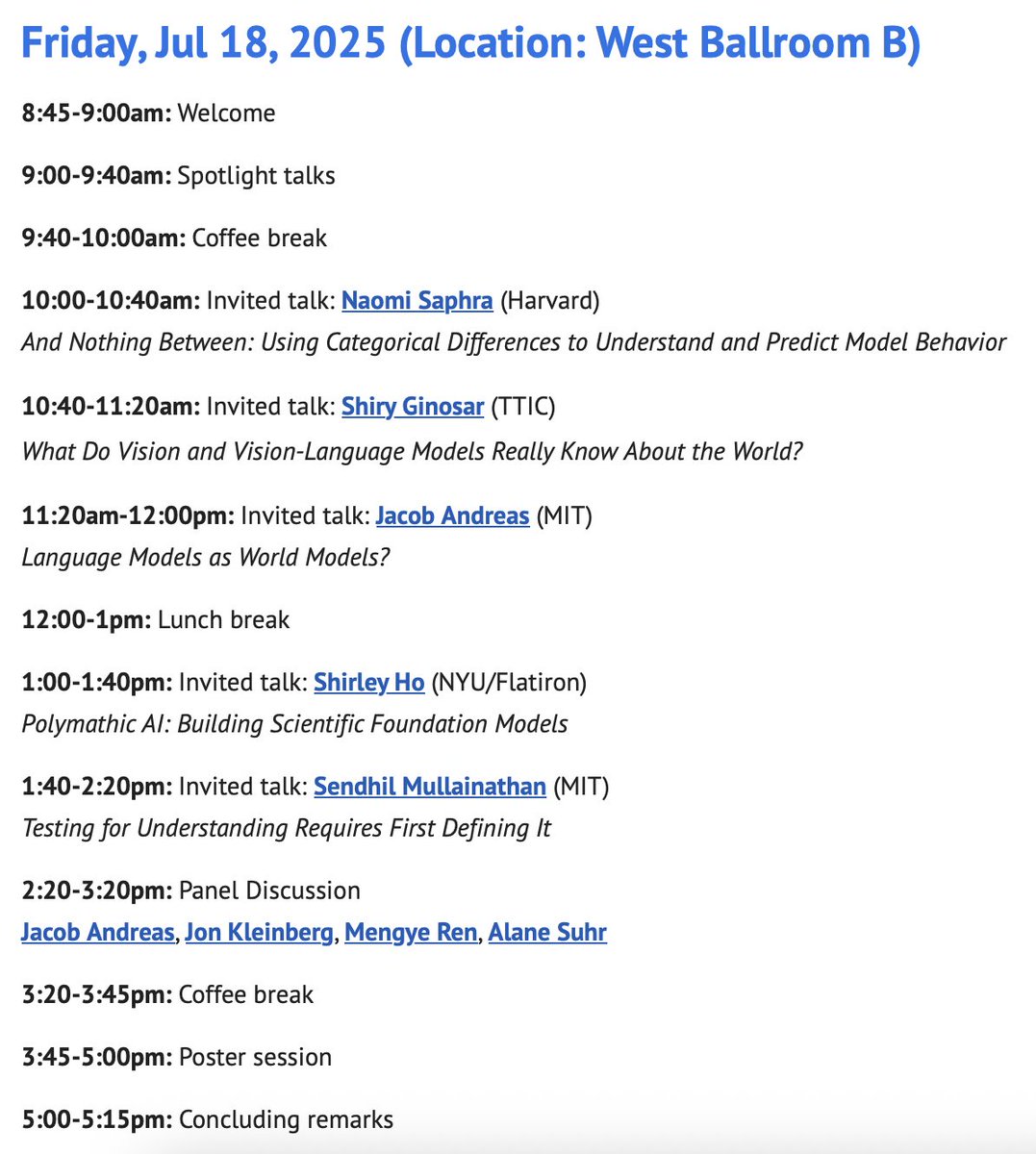

For this week’s NLP Seminar, we are thrilled to host Jacob Andreas to talk about “Just Asking Questions” When: 5/15 Thurs 11am PT Non-Stanford affiliates registration form: forms.gle/svy5q5uu7anHw7…

Thrilled to announce I’ll be joining Purdue Computer Science as an Assistant Professor in Fall 2026! My lab will work on AI thought partners, machines that think with people rather than instead of people – I'll be recruiting PhD students this upcoming cycle so reach out & apply if interested!

Life Update: I will join School of Information - UT Austin as an Assistant Professor in Fall 2026 and will continue my work on LLM, HCI, and Computational Social Science. I'm building a new lab on Human-Centered AI Systems and will be hiring PhD students in the coming cycle!