Ben Athiwaratkun @ ICLR

@ben_athi

Leading Turbo Team @ Together AI. prev: @awscloud @MSFTResearch, @Cornell PhD.

ID: 2613894511

http://benathi.github.io 09-07-2014 16:57:43

360 Tweet

820 Followers

694 Following

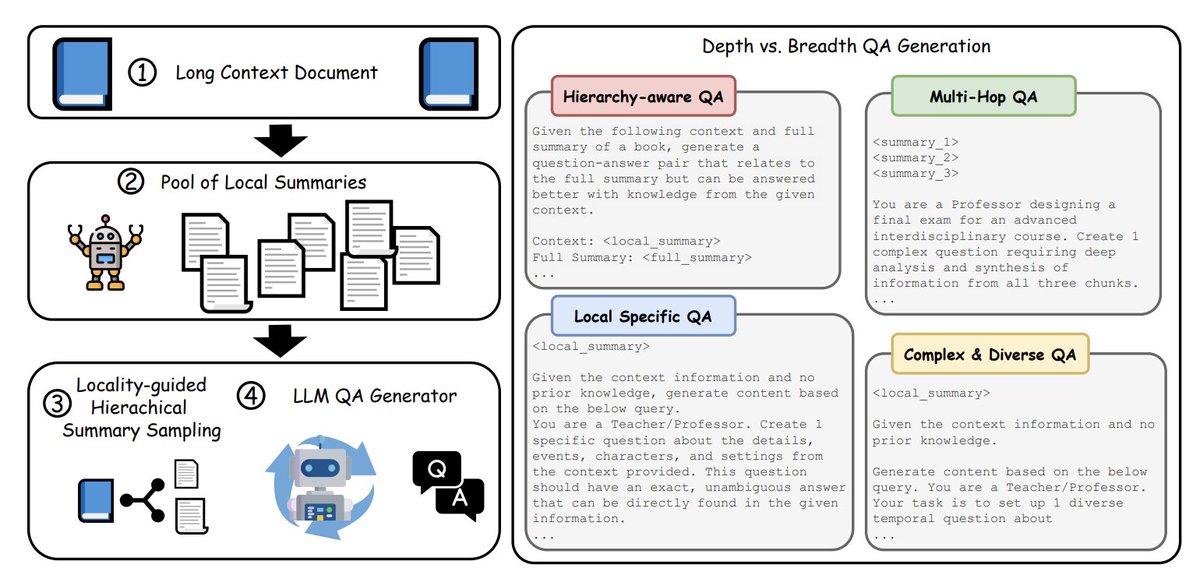

Excited to share work from my Together AI internship—a deep dive into inference‑time scaling methods 🧠 We rigorously evaluated verifier‑free inference-time scaling methods across both reasoning and non‑reasoning LLMs. Some key findings: 🔑 Even with huge rollout budgets,

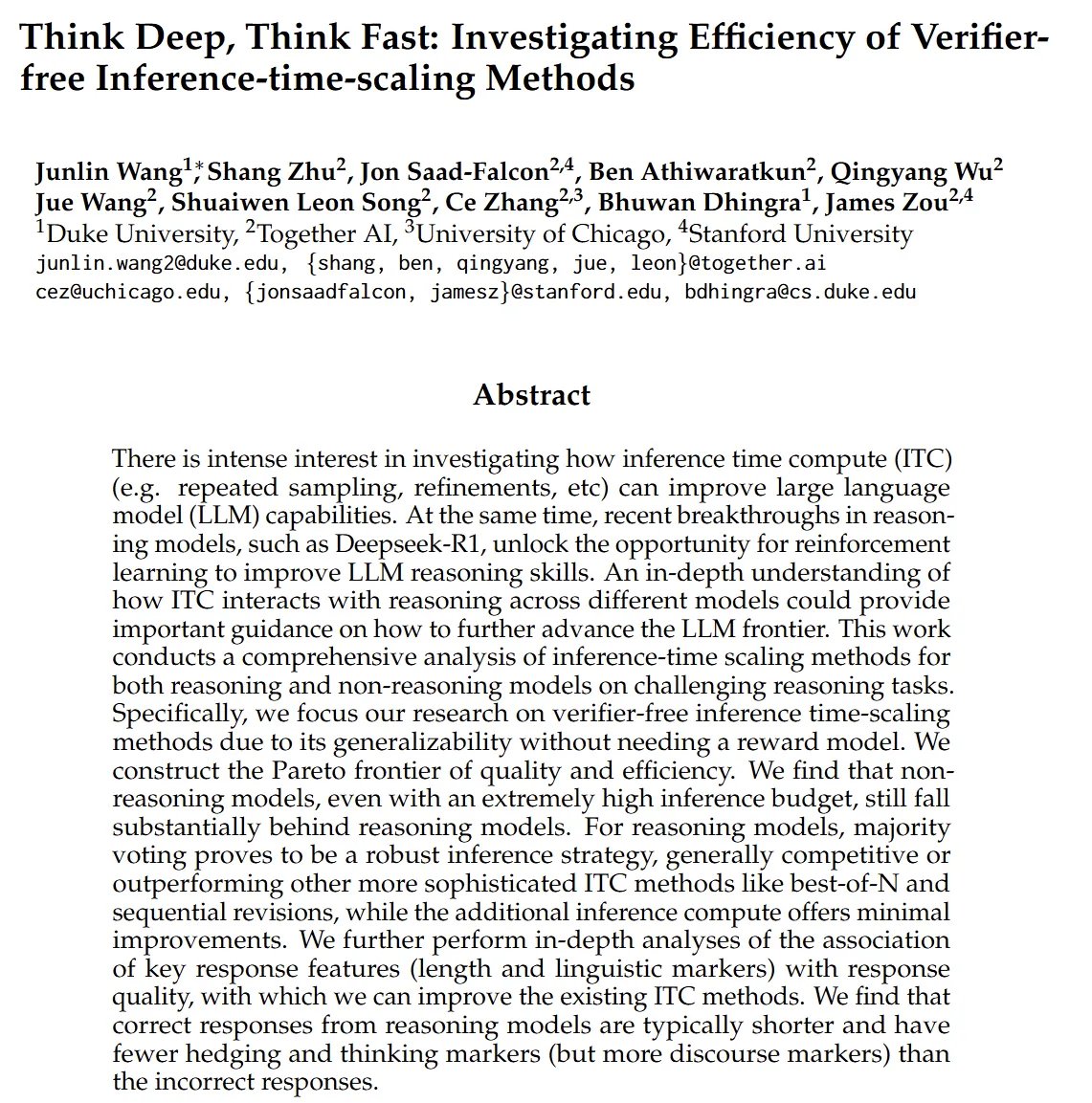

🚀 New research: YAQA — Yet Another Quantization Algorithm (yes, pronounced like yaca/jackfruit 🥭) Led by Albert Tseng, YAQA minimizes the KL divergence to the original model during quantization, cutting it by >30% vs. prior methods and outperforming even QAT on Gemma 3. 👇

.Together AI is building 2 gigawatts of AI factories (~100,000 GPUs) in the EU over the next 4 years with the first phase live in H2 '2025. AI compute is at <1% saturation relative to our 2035 forecast and we are starting early to build a large-scale sustainable AI cloud

![Together AI (@togethercompute) on Twitter photo 🔓⚡ FLUX.1 Kontext [dev] just landed on Together AI

First open-weight model w/ proprietary-level image editing:

🎨 Perfect character consistency

🏆 Beats Gemini Flash + competitors

🛠️ Full model weights for customization

Enterprise-quality editing, open weights💎 🔓⚡ FLUX.1 Kontext [dev] just landed on Together AI

First open-weight model w/ proprietary-level image editing:

🎨 Perfect character consistency

🏆 Beats Gemini Flash + competitors

🛠️ Full model weights for customization

Enterprise-quality editing, open weights💎](https://pbs.twimg.com/media/GuYQjSHWMAAld1S.jpg)