Berkeley AI Research

@berkeley_ai

We're graduate students, postdocs, faculty and scientists at the cutting edge of artificial intelligence research.

ID: 891077171673931776

http://bair.berkeley.edu/ 28-07-2017 23:25:27

978 Tweet

202,202K Followers

304 Following

Excited to have two papers accepted to ACL 2025 main! 🎉 1. ChatBench with jake hofman Ashton Anderson - we conduct a large-scale user study converting static benchmark questions into human-AI conversations, showing how benchmarks fail to predict human-AI outcomes.

It was a pleasure to share my PhD work on measuring pain in patients under anesthesia using wearable devices with UC Joint Computational Precision Health Program! My lab continues this work by studying diseases using novel wearable devices and algorithms. See our website at subramanianlab.com!

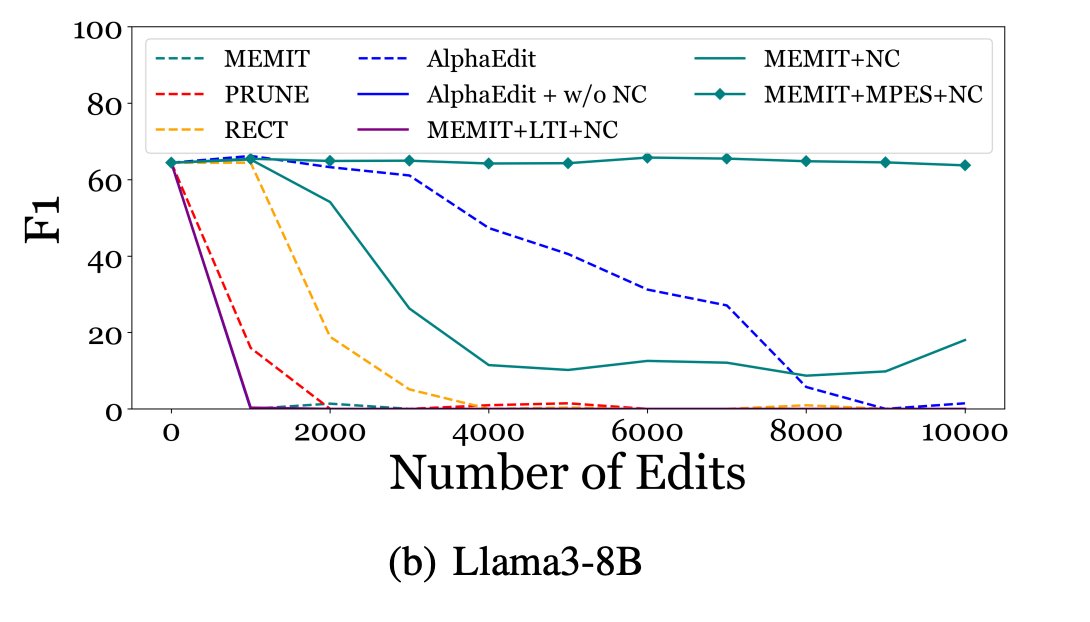

Just did a major revision to our paper on Lifelong Knowledge Editing!🔍 Key takeaway (+ our new title) - "Lifelong Knowledge Editing requires Better Regularization" Fixing this leads to consistent downstream performance! Tom Hartvigsen Ahmed Alaa Gopala Anumanchipalli Berkeley AI Research

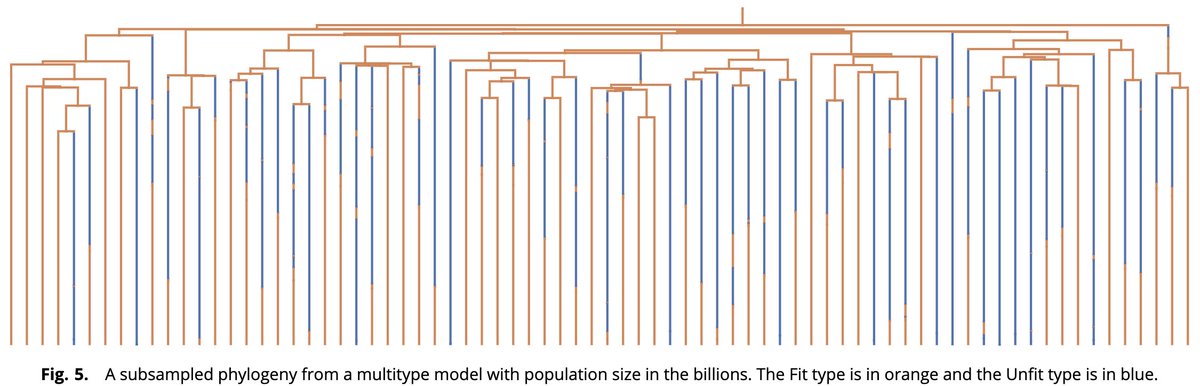

How can one efficiently simulate phylodynamics for populations with billions of individuals, as is typical in many applications, e.g., viral evolution and cancer genomics? In this work with Michael Celentano, W. DeWitt, & S. Prillo, we provide a solution. doi.org/10.1073/pnas.2… 1/n