Bethge Lab

@bethgelab

Perceiving Neural Networks

ID: 882978663825846273

http://bethgelab.org 06-07-2017 15:04:53

308 Tweet

3,3K Followers

257 Following

Excited to announce Centaur -- the first foundation model of human cognition. Centaur can predict and simulate human behavior in any experiment expressible in natural language. You can readily download the model from Hugging Face and test it yourself: huggingface.co/marcelbinz/Lla…

For our "Automated Assessment of Teaching Quality" project, we are looking for two PhD students: one in educational/cognitive sciences or a related field (uni-tuebingen.de/fakultaeten/wi…) and one in machine learning (uni-tuebingen.de/en/faculties/f…). Please apply and reach me out for details!

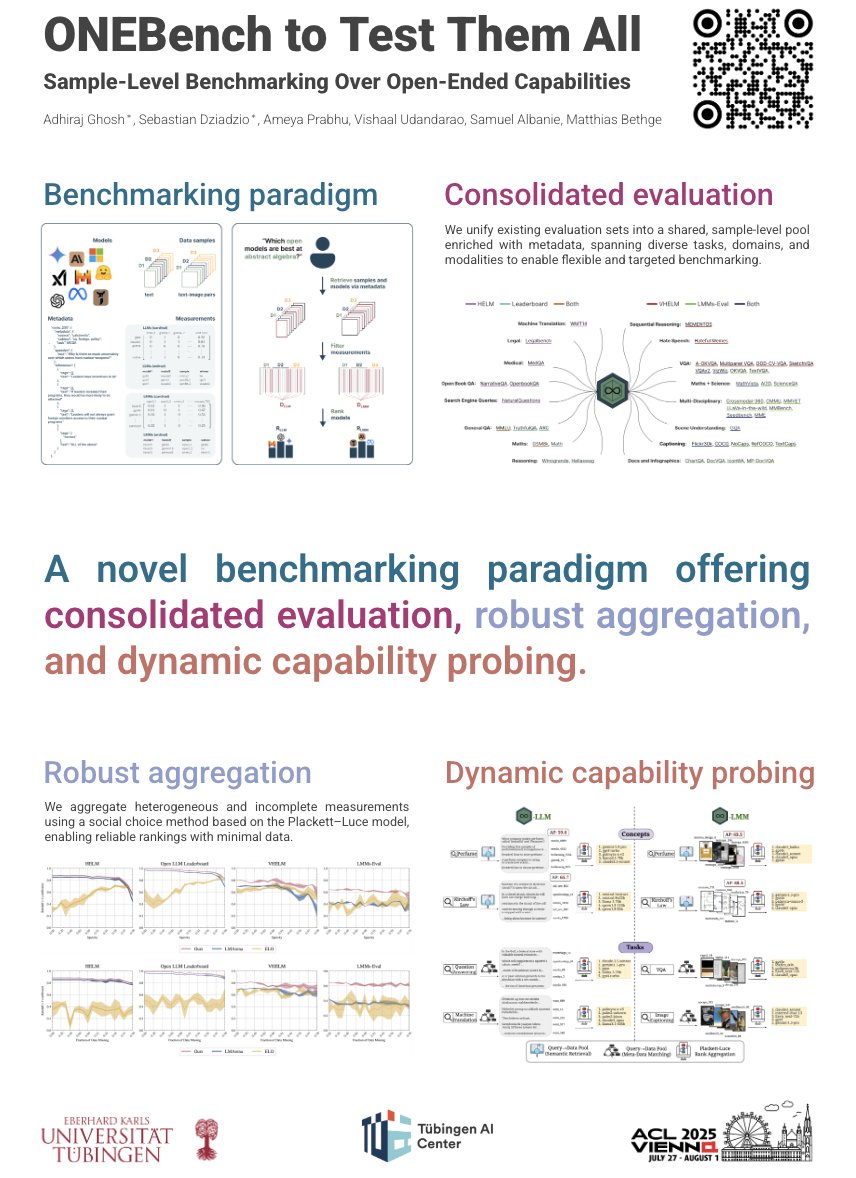

Excited to be in Vienna for #ACL2025🇦🇹! You'll find Sebastian Dziadzio and I by our ONEBench poster, so do drop by! 🗓️Wed, July 30, 11-12:30 CET 📍Hall 4/5 I’m also excited to talk about lifelong and personalised benchmarking, data curation and vision-language in general! Let’s connect!

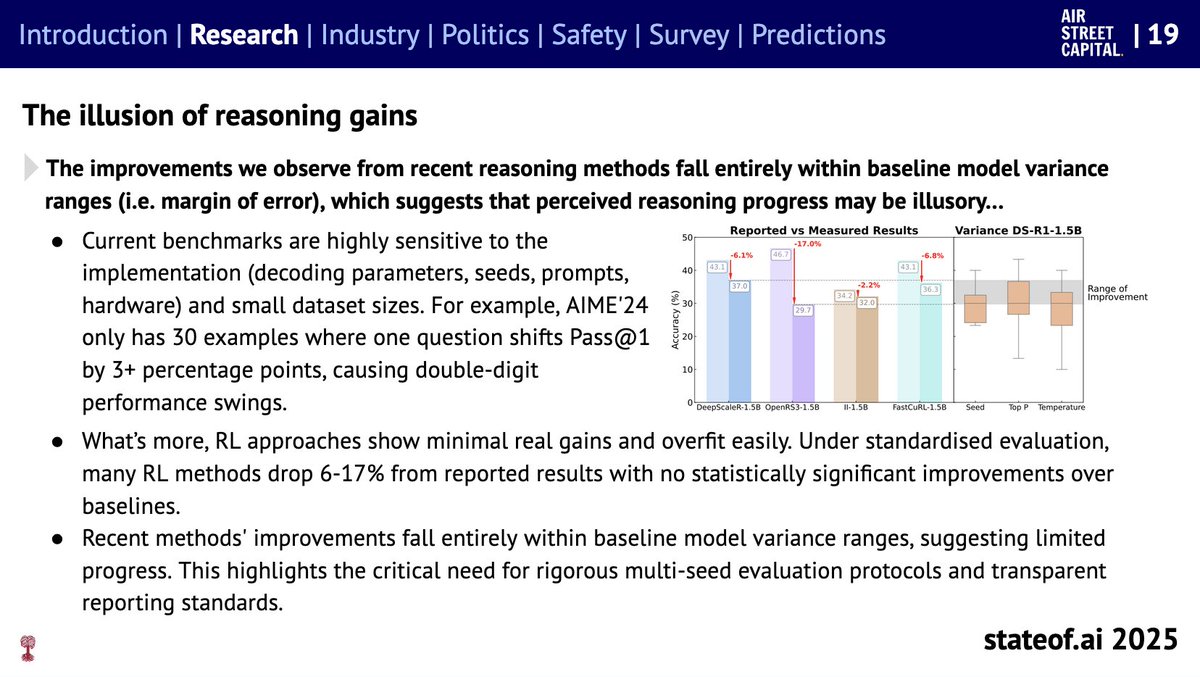

Great to see that our work is featured in the State of AI 2025 🎉 Hardik Bhatnagar Vishaal Udandarao Samuel Albanie 🇬🇧 Ameya P. @ COLM 2025 Matthias Bethge