Sanyam Bhutani

@bhutanisanyam1

👨💻 Working on llama models @AIatMeta | Previously: @h2oai, @weights_biases 🎙 Podcast @ctdsshow 👨🎓 Fellow @fastdotai 🎲 Grandmaster @Kaggle

ID: 784597005825871876

https://www.youtube.com/c/chaitimedatascience 08-10-2016 03:31:18

8,8K Tweet

39,39K Followers

989 Following

Also note if you're not getting good Llama 4 results, there are a few bugs: 1. QK Norm eps should be 1e-5 - collabed with HF on the fix! github.com/huggingface/tr… 2. RoPE scaling for Scout changed: github.com/ggml-org/llama… 3. vLLM +2% acc shared QK norm fix: github.com/vllm-project/v…

We’re excited to showcase all the amazing ways you’ve been building with Llama + Unsloth at LlamaCon 2025! 🦥🦙 Get ready for surprises and exciting announcements from Meta and us on Apr 29 in SF. 👀 Also big thanks to AI at Meta for the support and awesome merch!

Super excited to launch Synthetic-Data-Kit! 🙏 Fine-tuning LLMs is easy, there are many packages to get started, Unsloth AI is my absolute favorite. However, there is still a BIG HURDLE when working on fine-tuning: Data preparation Today I’m super grateful to be launching a

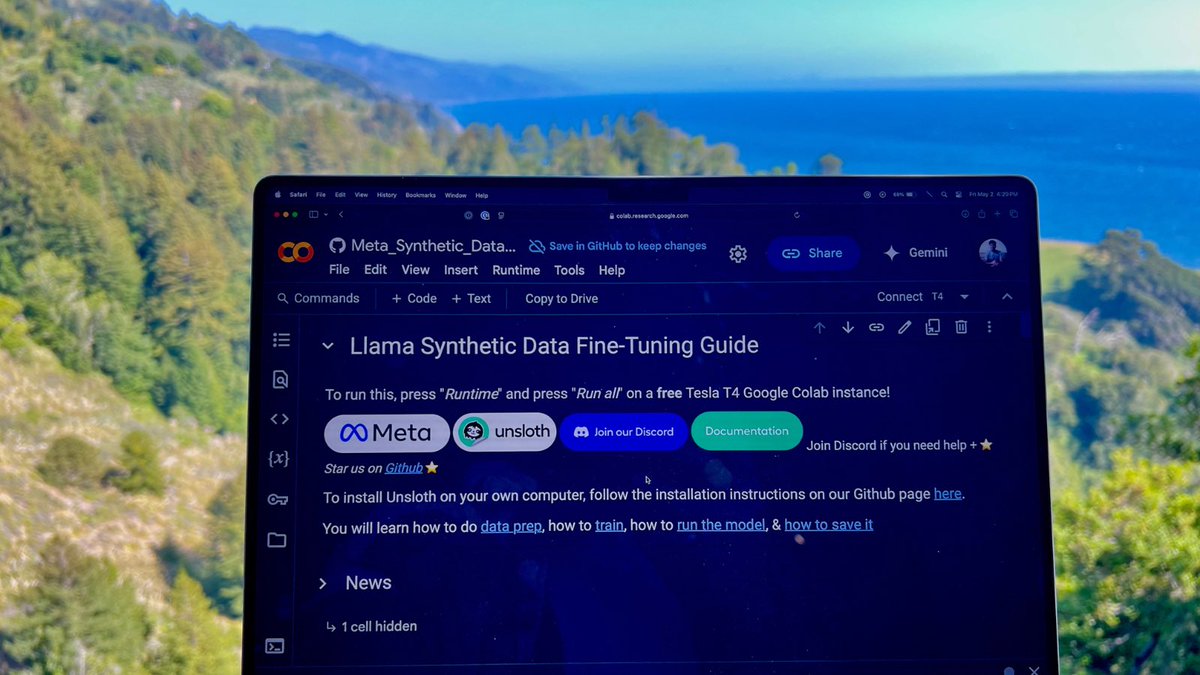

We partnered with AI at Meta on a free notebook that turns your documents into high-quality synthetic datasets using Llama! Features: • Parses PDFs, websites, videos • Use Llama to generate QA pairs + auto-filter data • Fine-tunes dataset with Llama 🔗colab.research.google.com/github/unsloth…

Llama Synthetic Data Fine-Tuning Guide! 🙏 My favourite thing about this tutorial-everything is powered by 3B model. It covers a step overlooked everywhere-data preparation and generation for fine tuning. Thanks Unsloth AI team for this gem: colab.research.google.com/github/unsloth…

You can now fine-tune TTS models with Unsloth! Train, run and save models like Sesame-CSM and OpenAI's Whisper locally with our free notebooks. Unsloth makes TTS training 1.5x faster with 50% less VRAM. GitHub: github.com/unslothai/unsl… Docs & Notebooks: docs.unsloth.ai/basics/text-to…