Bill Psomas

@bill_psomas

Postdoctoral researcher @ VisualRecognitionGroup, @CVUTPraha. PhD @ntua. Former IARAI, @Inria, @athenaRICinfo intern. Photographer. Crossfit freak.

ID: 1394460390319341569

http://users.ntua.gr/psomasbill/ 18-05-2021 01:10:46

260 Tweet

342 Followers

795 Following

At NAVER LABS Europe in Grenoble, France, we are searching for talented PhD interns for work on Spatial AI, geometric and robotic foundation models for navigation and manipulation. If you have experience in Embodied AI and are interested, DM me.

🚀Exciting news🚀 I’ve been awarded the Marie Skłodowska-Curie Postdoctoral Fellowship (#MSCA-PF) 2024 with 98/100!🎉 🥟My project, RAVIOLI, hosted at ČVUT v Praze, integrates retrieval-augmented predictions into vision-language models for open-vocabulary segmentation.

Excited to share that the recordings and slides of our SSLBIG tutorial are now online! If you notice any missing reference or have feedback, feel free to reach out. European Conference on Computer Vision #ECCV2026 Stay tuned for future editions! webpage: shashankvkt.github.io/eccv2024-SSLBI… Youtube: youtube.com/@SSLBiG_tutori…

![Marcin Przewięźlikowski (@pszwnzl) on Twitter photo Our paper "Beyond [cls]: Exploring the True Potential of Masked Image Modeling Representations" has been accepted to <a href="/ICCVConference/">#ICCV2025</a>!

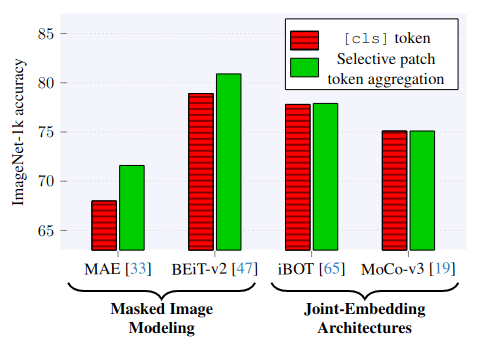

🧵 TL;DR: Masked image models (like MAE) underperform not just because of weak features, but because they aggregate them poorly.

[1/7] Our paper "Beyond [cls]: Exploring the True Potential of Masked Image Modeling Representations" has been accepted to <a href="/ICCVConference/">#ICCV2025</a>!

🧵 TL;DR: Masked image models (like MAE) underperform not just because of weak features, but because they aggregate them poorly.

[1/7]](https://pbs.twimg.com/media/GuYCGHWWcAAIgkL.jpg)