BIU NLP

@biunlp

The Bar-Ilan University, Natural Language Processing group.

ID: 347798643

https://biu-nlp.github.io/ 03-08-2011 11:21:06

192 Tweet

740 Followers

103 Following

🎉 Our paper, GenerationPrograms, which proposes a modular framework for attributable text generation, has been accepted to Conference on Language Modeling! GenerationPrograms produces a program that executes to text, providing an auditable trace of how the text was generated and major gains on

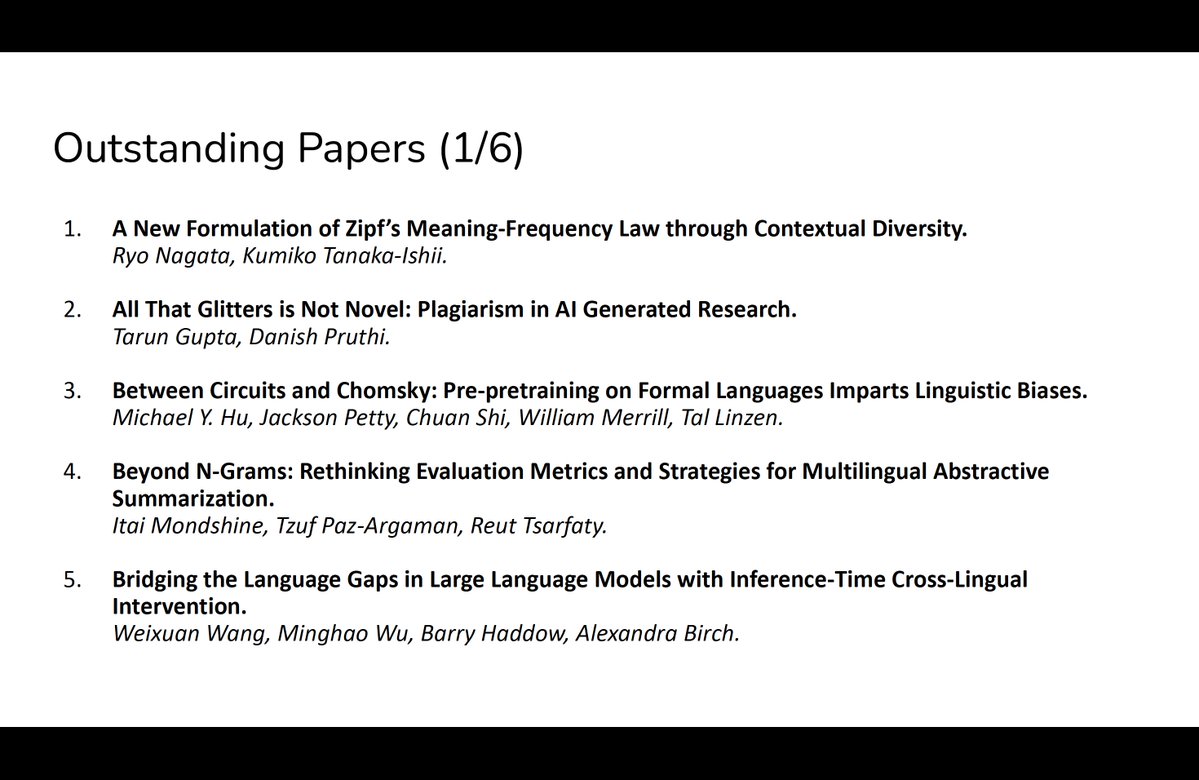

Congrats Itai Mondshine Tzuf - צוף Reut Tsarfaty !

BIU NLP Itai Mondshine Reut Tsarfaty Our paper "Beyond N-Grams: Rethinking Evaluation Metrics and Strategies for Multilingual Abstractive Summarization": arxiv.org/pdf/2507.08342

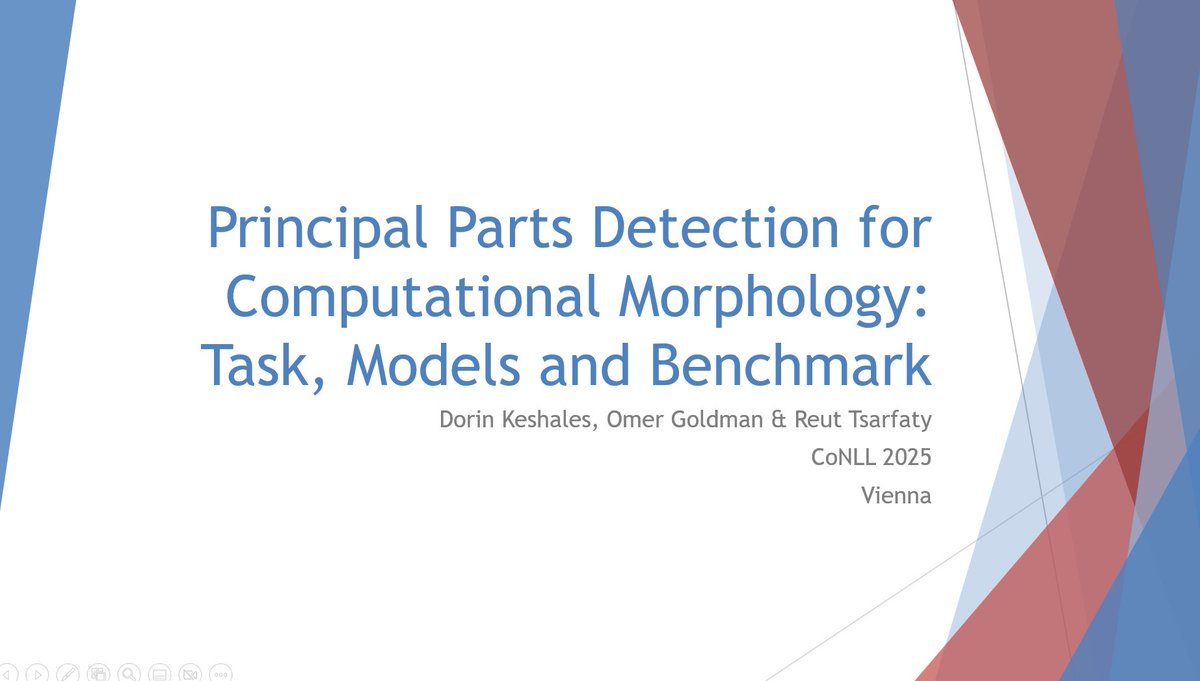

Are you still around Vienna? Come hear about a new morphological task at CoNLL at ~11:20 (hall M.1) Reut Tsarfaty

Led by Aviya Maimon, our new paper redefines how we evaluate LLMs. Instead of one flat leaderboard score, we uncover the latent skills—reasoning, comprehension, ethics, precision & more—that really shape LLM ability. Think: psychometrics meets AI. link: arxiv.org/pdf/2507.20208

1/8 Happy to share our new paper—“IQ Test for LLMs”—co-authored with Aviya Maimon, Amir David Nissan cohen, Gal Vishne @neurogal.bsky.social and Reut Tsarfaty. We propose to rethink how language models are evaluated by focusing on the latent capabilities that explain benchmark results. Arxiv: arxiv.org/pdf/2507.20208

We introduce CoCI, which improves fine-grained visual discrimination in LVLMs using contrast images. Shows up to 98.9% improvement on NaturalBench across three different supervision regimes. Reut Tsarfaty 📄 aclanthology.org/anthology-file…