black_samorez

@black_samorez

ML PhD @ISTAustria and @EllisForEurope

ID: 1304537032367239169

11-09-2020 21:48:57

24 Tweet

111 Followers

103 Following

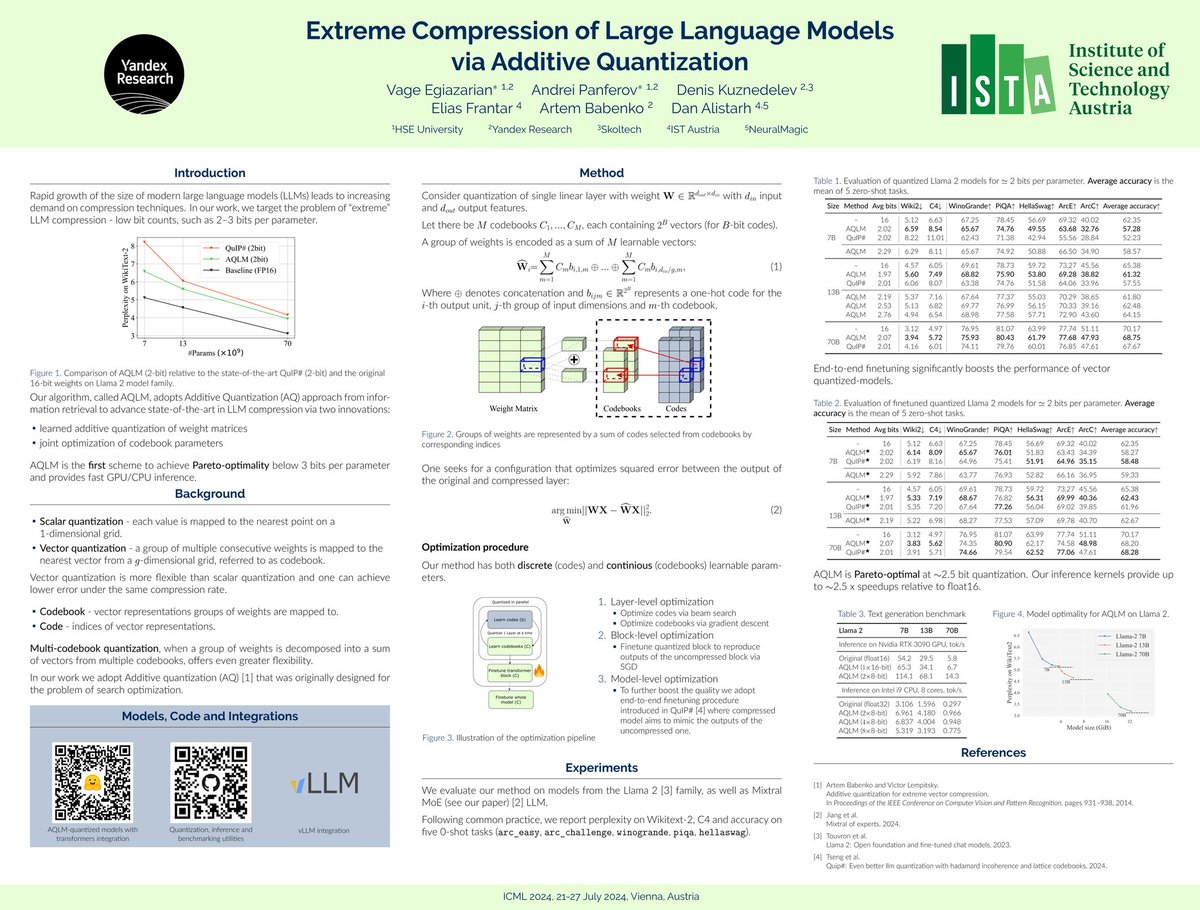

Announcing AQLM v1.1! Featuring: 1. New model collection with SOTA accuracy huggingface.co/collections/IS… 2. Gemma-2B support, running within 1.5GB; 3. LoRA integration for training Mixtral-8x7 on Colab; 4. Faster generation (3x) via CUDA graphs. Check it out: github.com/Vahe1994/AQLM

Introducing Panza V2, our personalized LLM writing assistant, running entirely on-device! Now faster and easier to use: * Local serving via GMail extension * Cloud training via Lightning AI ⚡️ Studio * More models, including AI at Meta LLama-3.2 * Inference w/ ollama! Details:

Excited to host black_samorez PhD student @ IST who will present 'Pushing the limits of LLM quantization via the linearity theorem' on Jan 10 @ 1800 CET at Cohere For AI. Really cool results and look forward to the talk. Join the community: tinyurl.com/C4AICommunityA…

We will have black_samorez presenting his work on low-bit pre-training at Cohere For AI next week (stable training at 1 bit weights + activations) -- continuing our theme of low bit training. Looking forward :) To join in, fill the form at: tinyurl.com/C4AICommunityA…

We'll be presenting this on April 27th in Singapore. For now, you can check out this recording of the Cohere For AI efficiency seminar on this topic: youtube.com/watch?v=e3ClKT…

ChatGPT agent mode apparently has enough RAM to load and run a full-fledged 8B LLM in browser. Kudos to Vladimir Malinovskii for implementing AQLM 2-bit quantization in WebAssembly at galqiwi.github.io/aqlm-rs

🎉 Excited to announce the Workshop on Foundations of LLM Security at #EurIPS2025! 🇩🇰 Dec 6–7, Copenhagen! 📢 Call for contributed talks is now open! See details at llmsec-eurips.github.io Kathrin Grosse Ilia Shumailov🦔 Verena Rieser sahar selim taher @thegrue Mario Fritz EurIPS Conference

![Dan Alistarh (@dalistarh) on Twitter photo We are introducing Quartet, a fully FP4-native training method for Large Language Models, achieving optimal accuracy-efficiency trade-offs on NVIDIA Blackwell GPUs! Quartet can be used to train billion-scale models in FP4 faster than FP8 or FP16, at matching accuracy.

[1/4] We are introducing Quartet, a fully FP4-native training method for Large Language Models, achieving optimal accuracy-efficiency trade-offs on NVIDIA Blackwell GPUs! Quartet can be used to train billion-scale models in FP4 faster than FP8 or FP16, at matching accuracy.

[1/4]](https://pbs.twimg.com/media/Gr4-TfhX0AEa_WW.jpg)